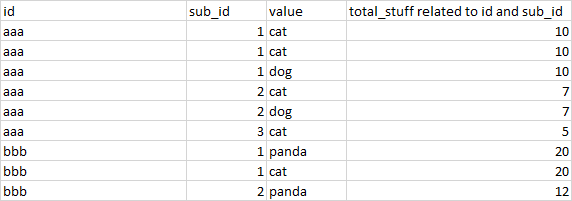

I have a pandas dataframe like this:

as a plain text:

{'id;sub_id;value;total_stuff related to id and sub_id': ['aaa;1;cat;10', 'aaa;1;cat;10', 'aaa;1;dog;10', 'aaa;2;cat;7', 'aaa;2;dog;7', 'aaa;3;cat;5', 'bbb;1;panda;20', 'bbb;1;cat;20', 'bbb;2;panda;12']}

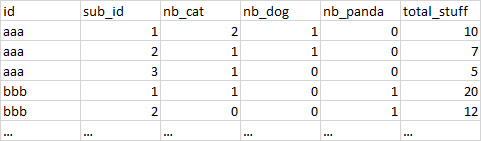

The desired output I want is this.

Note that there are many different "values" possible, so I would need to automate the creation of dummies variables (nb_animals). But these dummies variables must contain the number of occurences by id and sub_id. The total_stuff is always the same value for a given id/sub_id combination.

I've tried using get_dummies(df, columns = ['value']), which gave me this table.

as a plain text:

{'id;sub_id;value_cat;value_dog;value_panda;total_stuff related to id and sub_id': ['aaa;1;2;1;0;10', 'aaa;1;2;1;0;10', 'aaa;1;2;1;0;10', 'aaa;2;1;1;0;7', 'aaa;2;1;1;0;7', 'aaa;3;1;0;0;5', 'bbb;1;1;0;1;20', 'bbb;1;1;0;1;20', 'bbb;2;0;0;1;12']}

I'd love to use some kind of df.groupby(['id','sub_id']).agg({'value_cat':'sum', 'value_dog':'sum', ... , 'total_stuff':'mean'}), but writing all of the possible animal values would be too tedious.

So how to get a proper aggregated count/sum for values, and average for total_stuff (since total_stuff is unique per id/sub_id combination)

Thanks

EDIT : Thanks chikich for the neat answer. The agg_dict is what I needed

CodePudding user response:

Use pd.get_dummies to transform categorical data

df = pd.get_dummies(df, prefix='nb', columns='value')

Then group by id and subid

agg_dict = {key: 'sum' for key in df.columns if key[:3] == 'nb_'}

agg_dict['total_stuff'] = 'mean'

df = df.groupby(['id', 'subid']).agg(agg_dict).reset_index()