I have a problem. I want to investigate whether my assumption is correct that it can be said that receiver X frequently receives a delivery from consignor Y.

I have grouped receiver and consignor for this purpose.

However, what I would like to do now, I would like to output to myself all receivers where the most frequent consignor contains more than 80% of all consignors in receiver .

For example we have receiver c this has received from consignor 2, 3 what. From consignor 2 only 1 packet and from consignor 3 4 packets. Thus he has received a total of 5 packets. Thus consignor 3 accepts 80% of all consignor within receiver c.

Thus receiver c should be represented in a table. This should be done below for all others.

In simple terms, I want all receivers that have more than 80% of a given consignor.

How can I do this?

What I want

receiver consignor count_with_most_consignor count_all

c 3 4 5

d 2 4 5

Dataframe

consignor receiver

0 1 a

1 2 a

2 1 a

3 2 c

4 3 c

5 1 b

6 3 c

7 3 c

8 3 c

9 2 d

10 2 d

11 2 d

12 1 d

13 2 d

Code

import pandas as pd

d = {'consignor': [1, 2, 1, 2, 3, 1, 3, 3, 3, 2, 2, 2, 1, 2],

'receiver': ['a', 'a', 'a', 'c', 'c', 'b', 'c', 'c', 'c', 'd', 'd', 'd', 'd', 'd']}

df = pd.DataFrame(data=d)

print(df)

df_ = df.groupby(['receiver','consignor']).size().reset_index(name='counts').sort_values(by=['counts'], ascending=False)

print(df_)

X = 'c'

print(df_[df_['receiver'] == X].shape[1])

print(df_[df_['receiver'] == X])

Out

[OUT]

3

receiver consignor counts

4 c 3 4

3 c 2 1

CodePudding user response:

crosstab

# frequency table

s = pd.crosstab(df['receiver'], df['consignor'])

# agg columns along axis=1

d = {

'consignor': s.idxmax(1),

'count_with_most_consignor': s.max(1),

'count_all': s.sum(1)

}

(

s.assign(**d) # assign the agg columns

.drop(s.columns, axis=1) # drop the unwanted columns

.query('count_with_most_consignor / count_all >= .8') # filter the rows

)

Result

consignor consignor count_with_most_consignor count_all

receiver

b 1 1 1

c 3 4 5

d 2 4 5

CodePudding user response:

You can simply get all shares with value_counts(normalize=True)

In [13]: df.groupby("receiver").value_counts(normalize=True)

Out[13]:

receiver consignor

a 1 0.666667

2 0.333333

b 1 1.000000

c 3 0.800000

2 0.200000

d 2 0.800000

1 0.200000

dtype: float64

And then filter for values above 0.8 e.g. like this:

In [15]: res = df.groupby("receiver").value_counts(normalize=True).where(lambda x: x > 0.8).dropna()

Out[15]:

receiver consignor

b 1 1.0

c 3 0.8

d 2 0.8

dtype: float64

The simplest way to get the rest of the info is calculating them seperately

In [40]: count_with_most_consignor = df.value_counts().rename("count_with_most_consignor")

Out[41]:

consignor receiver

2 d 4

3 c 4

1 a 2

b 1

d 1

2 a 1

c 1

Name: count_with_most_consignor, dtype: int64

In [42]: count_all = df.groupby("receiver").size().rename("count_all")

In [43]: count_all

Out[43]:

receiver

a 3

b 1

c 5

d 5

Name: count_all, dtype: int64

and join that up together.

In [39]: res.rename("share").to_frame().join(count_with_most_consignor).join(count_all).reset_index()

Out[39]:

receiver consignor share count_with_most_consignor count_all

0 b 1 1.0 1 1

1 c 3 0.8 4 5

2 d 2 0.8 4 5

CodePudding user response:

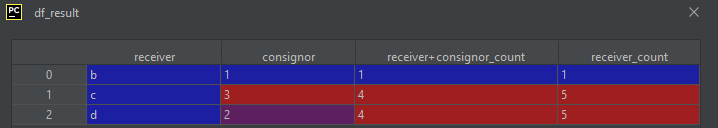

I could come up only with this kinda tricky way:)

# First, we create a group column

df["receiver consignor"] = df["receiver"] "-" df["consignor"].astype("str")

# Then we count "receiver consignor" values

df = df.merge(df["receiver consignor"].value_counts(), left_on="receiver consignor", right_index=True, suffixes=(None, "_count"))

# Now we count deliveries by receivers

df = df.merge(df["receiver"].value_counts(), left_on="receiver", right_index=True, suffixes=(None, "_count"))

# Now we can calculate the percentage

df["percentage"] = df["receiver consignor_count"] * 100 / df["receiver_count"]

# At last, we can filter out what we need now

df_result = df[df["percentage"] >= 80].groupby(["receiver", "consignor", "receiver consignor_count", "receiver_count"]).first().reset_index()[["receiver", "consignor", "receiver consignor_count", "receiver_count"]]

CodePudding user response:

A simple solution with dictionaries as follows:

import pandas as pd

d = {'consignor': [1, 2, 1, 2, 3, 1, 3, 3, 3, 2, 2, 2, 1, 2],

'receiver': ['a', 'a', 'a', 'c', 'c', 'b', 'c', 'c', 'c', 'd', 'd', 'd', 'd', 'd']}

df = pd.DataFrame(data=d)

df_ = df.groupby(['receiver','consignor']).size().reset_index(name='counts').sort_values(by=['counts'], ascending=False)

df__ = df_[['receiver','counts']]

df___ = df__.groupby(['receiver']).aggregate('sum')

dic = df___.to_dict()

for row in range(len(df_)):

if df_['counts'][row] / dic['counts'][df_['receiver'][row]] < 0.8:

df_ = df_.drop(row)

print(df_)

Outputs:

receiver consignor counts

4 c 3 4

6 d 2 4

2 b 1 1