I'm trying to use R to extract player photos from the PGA website. The following is my attempt at getting the image URLs, but it does not show the images or the image is blank as shown in the image below.

if(!require(pacman))install.packages("pacman")

pacman::p_load('rvest', 'stringi', 'dplyr', 'tidyr', 'measurements', 'reshape2','foreach','doParallel','curl','httr','Iso','stringi','janitor')

PGA_url <- "https://www.pgatour.com"

pga_web=read_html(paste0(PGA_url,'/players.html'))

plyers_photo <- pga_web%>%html_nodes("[class='player-card']")%>%html_nodes('div.player-image-wrapper')%>%html_nodes('img')%>%html_attr('src')

Could someone kindly tell me what I'm doing wrong?

CodePudding user response:

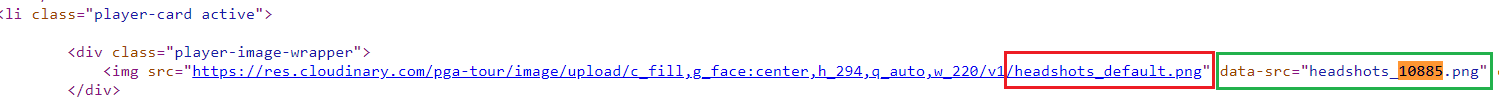

If you examine the page source you will see that you are retrieving the content as per the page source i.e. where there is a default img value. Scan across and you may notice that there is a data-src attribute adjacent which has an alternate ending for the png of the form matching regex: headshots_\d{5}\.png.

When JavaScript runs in the browser, which doesn't happen with an xmlhttp request through rvest, those urls are dynamically updated with the default png endings replaced with those in the data-src attributes.

Either replace the endings you are getting with that attribute's value, for the set-size small image, or instead, use the part up to and including upload as a base, and combine that with the extracted data-src values to give large images.

There is also no need for all those chained html_nodes() calls. A single call with an appropriate css selector list will do. Also, prefer the maintained html_elements() method, over the old html_nodes():

library(rvest)

library(magrittr)

PGA_url <- "https://www.pgatour.com"

pga_web <- read_html(paste0(PGA_url, "/players.html"))

placeholder_link <- 'https://pga-tour-res.cloudinary.com/image/upload/'

plyers_photo <- pga_web %>%

html_elements(".player-card .player-image-wrapper img") %>%

html_attr("data-src") %>% paste0(placeholder_link, .)