I'm trying to isolate a background from multiple images that have something different between each other, that is overlapping the background.

the images I have are individually listed here:

I wanted to do it in a sequence of images, as of I'm reading some video feed, and by getting the last frames I'm processing them to isolate the background, like this:

import os

import cv2

first = True

bwand = None

for filename in os.listdir('images'):

curImage = cv2.imread('images/%s' % filename)

if(first):

first = False

bwand = curImage

continue

bwand = cv2.bitwise_and(bwand,curImage)

cv2.imwrite("and.png",bwand)

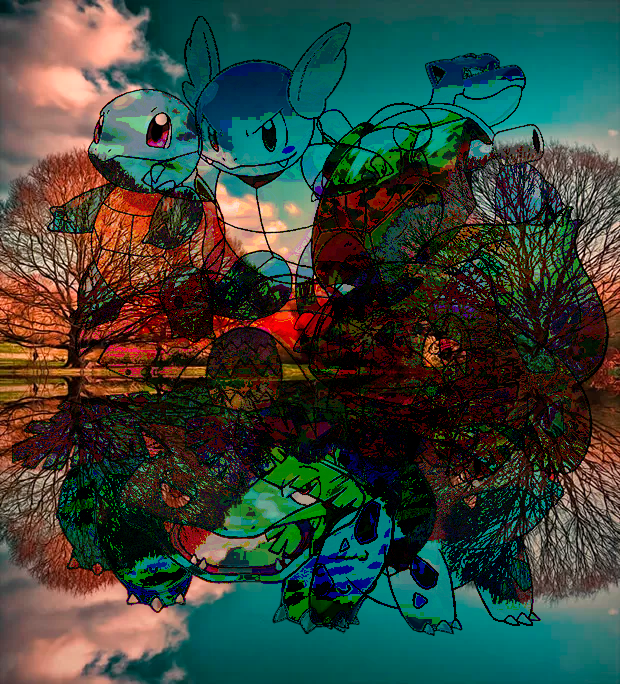

From this code, I'm always incrementing my buffer with bitwise operations, but the results I get is not what I'm looking for: Bitwise and:

the way of concurrent adding to a buffer its the best approach for me in terms of video filtering and performance, but if I treat it like a list, I can look for the median value like so:

import os

import cv2

import numpy as np

sequence = []

for filename in os.listdir('images'):

curImage = cv2.imread('images/%s' % filename)

sequence.append(curImage)

imgs = np.asarray(sequence)

median = np.median(imgs, axis=0)

cv2.imwrite("res.png",median)

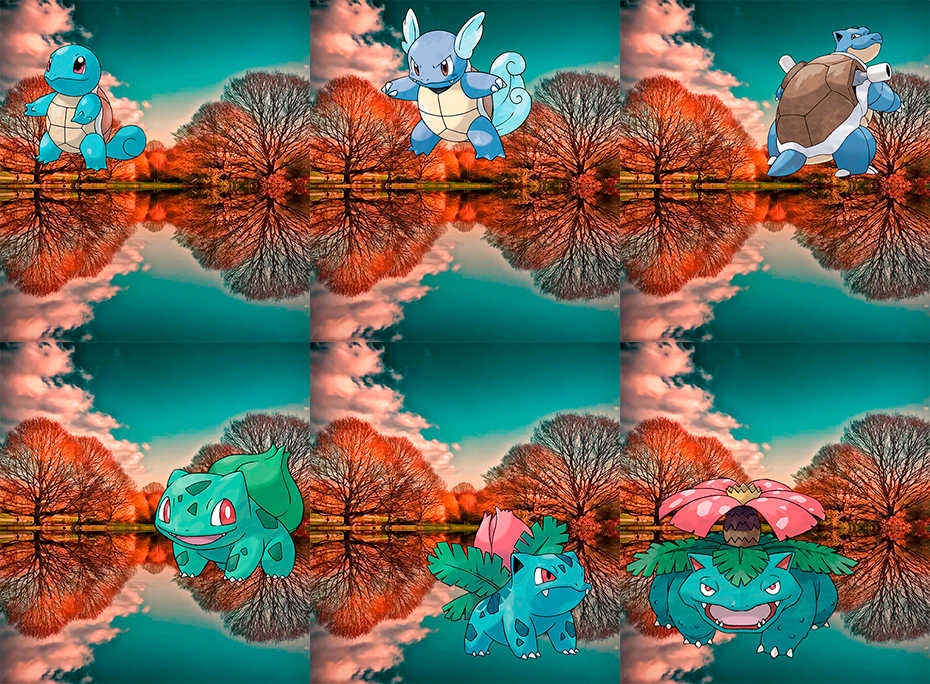

it results me:

Which is still not perfect, because I'm looking for the median value, if I would look for the mode value the performance would decrease significantly.

Is there an approach for obtaining the result that works as a buffer like the first alternative but outputs me the best result with good performance?

--Edit As suggested by @Christoph Rackwitz I used OpenCV background subtractor, it works as one of the requested features which is a buffer, but the result is not the most pleasant:

code:

import os

import cv2

mog = cv2.createBackgroundSubtractorMOG2()

for filename in os.listdir('images'):

curImage = cv2.imread('images/%s' % filename)

mog.apply(curImage)

x = mog.getBackgroundImage()

cv2.imwrite("res.png",x)

CodePudding user response:

Since scipy.stats.mode takes ages to do its thing, I did the same manually:

- calculate histogram (for every channel of every pixel of every row of every image)

argmaxgets mode- reshape and cast

Still not video speed but oh well. numba can probably speed this up.

filenames = ...

assert len(filenames) < 256, "need larger dtype for histogram"

stack = np.array([cv.imread(fname) for fname in filenames])

sheet = stack[0]

hist = np.zeros((sheet.size, 256), dtype=np.uint8)

index = np.arange(sheet.size)

for sheet in stack:

hist[index, sheet.flat] = 1

result = np.argmax(hist, axis=1).astype(np.uint8).reshape(sheet.shape)

del hist # because it's huge

cv.imshow("result", result); cv.waitKey()

And if I didn't use histograms and extensive amounts of memory, but a fixed number of sheets and data access that's cache-friendly, it could likely be even faster.