I'm working on a student project involved with resolution recognition from videos

My job is to prepare a training dataset from videos (I'm downloading these movies from YT) and does it in the following steps

- Downloading pre-selected videos in every quality (2160p, 1440p, 1080p,720p...)

- Extracting frames from every downloaded video (something about 20-30 frames)

- Upscaling every frame to the same resolution (in my case I upscale

all frames to 4K)

Extracted frames have different dimensions so I need to expand them all to the same resolution - Splitting these upscaled frames to 100x100 blocks

After completing this process, he gets a great deal of sorted data

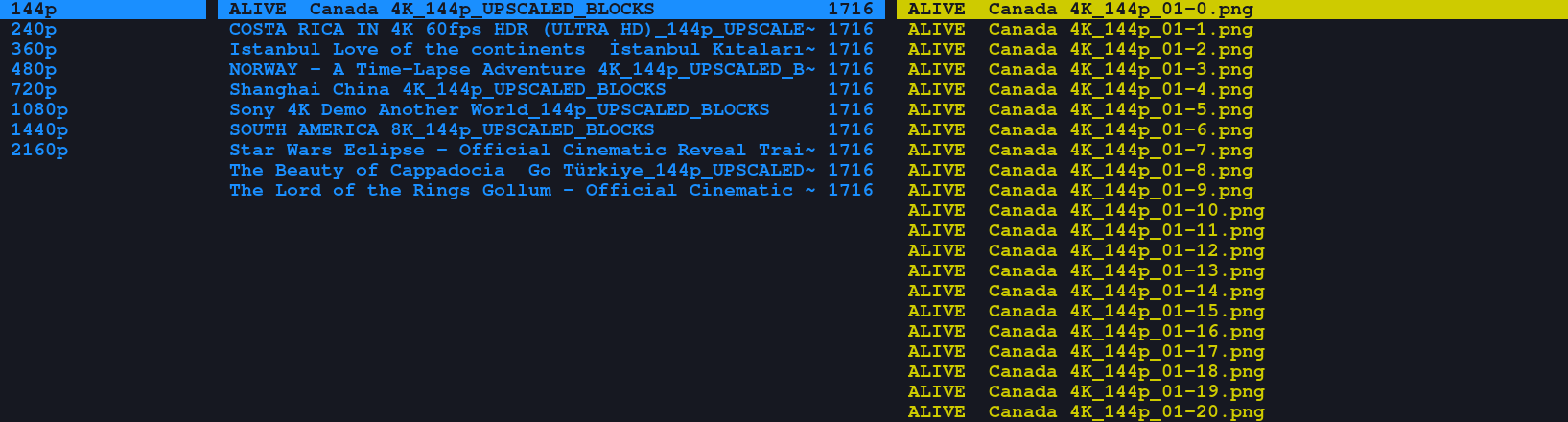

Below is a picture of what it looks like

On the left, you can see sorted directories by resolutions

In the middle directories for randomly downloaded videos

On the right mentioned in the fourth point 100x100 blocks from each video for each quality

The result I would like to achieve is that model from the same prepared dataset as for training would be able to properly recognize the quality (e.g. for a full had video output would be 1080p)

Now I'm wondering about the choice of a ready-made model using CNN.

My questions:

- What solution do you think I should use here?

- With the current set of data, how should I label it or do a different set of data?

Thank you very much in advance for your answers

CodePudding user response:

It seems like you are actually trying to solve an easier problem than the discriminator of KernelGAN:

Sefi Bell-Kligler, Assaf Shocher, Michal Irani Blind Super-Resolution Kernel Estimation using an Internal-GAN (NeurIPS 2019).

In their work, they tried to estimate an arbitrary downsampling kernel relating HR and LR images. Your work is much simpler: you only try to select between several known upsampling kernels. Since your upscaling method is known, you only need to recover the amount of upscaling.

I suggest you start with a CNN that has an architecture similar to the discriminator of KernelGAN. However, I would consider increasing significantly the receptive field so it can reason about upscaling from 144p to 4K.

Side notes:

- Do not change the aspect ratio of the frames when you upscale them! this will make your problem much more difficult: you will need to estimate two upscaling parameters (horizontal/vertical) instead of only one.

- Do not crop 100x100 regions in advance - let your

Dataset's transformations do it for you as random augmentations.