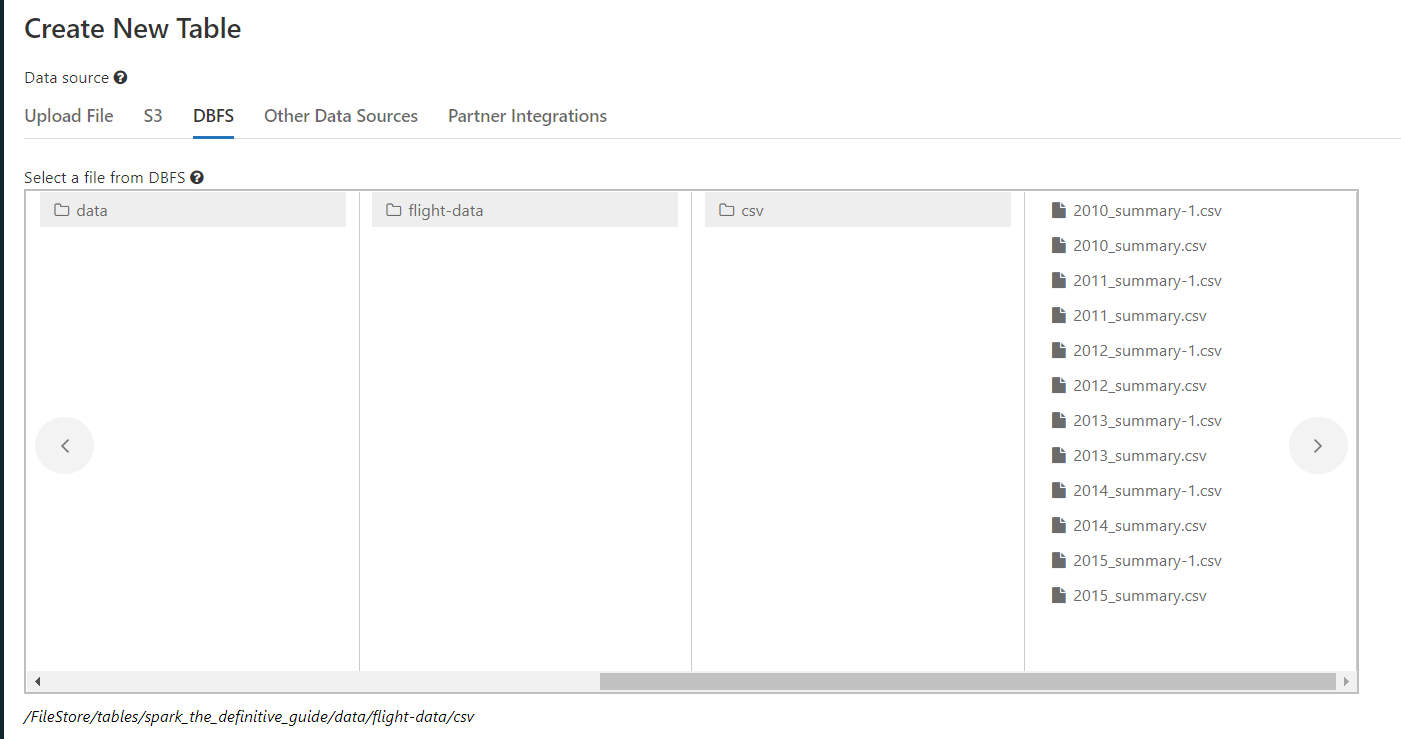

I'm a beginner to Spark and just picked up the highly recommended 'Spark - the Definitive Edition' textbook. Running the code examples and came across the first example that needed me to upload the flight-data csv files provided with the book. I've uploaded the files at the following location as shown in the screenshot:

/FileStore/tables/spark_the_definitive_guide/data/flight-data/csv

I've in the past used Azure Databricks to upload files directly onto DBFS and access them using ls command without any issues. But now in community edition of Databricks (Runtime 9.1) I don't seem to be able to do so.

When I try to access the csv files I just uploaded into dbfs using the below command:

%sh ls /dbfs/FileStore/tables/spark_the_definitive_guide/data/flight-data/csv

I keep getting the below error:

ls: cannot access '/dbfs/FileStore/tables/spark_the_definitive_guide/data/flight-data/csv': No such file or directory

I tried finding out a solution and came across the suggested workaround of using dbutils.fs.cp() as below:

dbutils.fs.cp('C:/Users/myusername/Documents/Spark_the_definitive_guide/Spark-The-Definitive-Guide-master/data/flight-data/csv', 'dbfs:/FileStore/tables/spark_the_definitive_guide/data/flight-data/csv')

dbutils.fs.cp('dbfs:/FileStore/tables/spark_the_definitive_guide/data/flight-data/csv/', 'C:/Users/myusername/Documents/Spark_the_definitive_guide/Spark-The-Definitive-Guide-master/data/flight-data/csv/', recurse=True)

Neither of them worked. Both threw the error: java.io.IOException: No FileSystem for scheme: C

This is really blocking me from proceeding with my learning. It would be supercool if someone can help me solve this soon. Thanks in advance.

CodePudding user response:

I believe the way you are trying to use is the wrong one, use it like this

to list the data:

display(dbutils.fs.ls("/FileStore/tables/spark_the_definitive_guide/data/flight-data/"))

to copy between databricks directories:

dbutils.fs.cp("/FileStore/jars/d004b203_4168_406a_89fc_50b7897b4aa6/databricksutils-1.3.0-py3-none-any.whl","/FileStore/tables/new.whl")

For local copy you need the premium version where you create a token and configure the databricks-cli to send from the computer to the dbfs of your databricks account:

databricks fs cp C:/folder/file.csv dbfs:/FileStore/folder