I am working on a binary classification problem using Random forest and using LIME explainer to explain the predictions.

I used the below code to generate LIME explanations

import lime

import lime.lime_tabular

explainer = lime.lime_tabular.LimeTabularExplainer(ord_train_t.values, discretize_continuous=True,

feature_names=feat_names,

mode="classification",

feature_selection = "lasso_path",

class_names=rf_boruta.classes_,

categorical_names=output,

kernel_width=10, verbose=True)

i = 969

exp = explainer.explain_instance(ord_test_t.iloc[1,:],rf_boruta.predict_proba,distance_metric = 'euclidean',num_features=5)

I got an output like below

Intercept 0.29625037124439896

Prediction_local [0.46168824]

Right:0.6911888737552843

However, the above is printed as a message in screen

How can we get this info in a dataframe?

CodePudding user response:

Lime doesn't have direct export-to-dataframe capabilities, so the way to go appears to be appending the predictions to a list and then transforming it into a Dataframe.

Yes, depending on how many predictions you have, this may take a lot of time, since the model has to predict every instance individually.

This is an example I found, the explain_instance needs to be adjusted to your model args, but follows the same logic.

l=[]

for n in range(0,X_test.shape[0] 1):

exp = explainer.explain_instance(X_test.values[n], clf.predict_proba, num_features=10)

a=exp.as_list()

l.append(a)

df = pd.DataFrame(l)

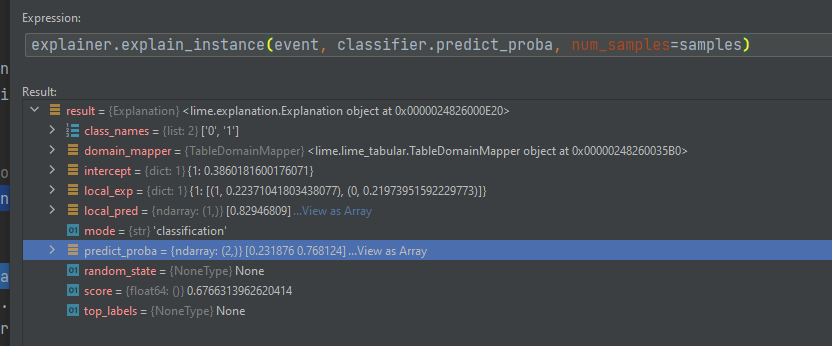

If you need more than what the as_list() provides, the explainer has more data on it. I ran an example to see what else explain instance would retrieve.

You can, instead of just using as_list(), append to this as_list the other values you need.

a = exp.to_list()

a.append(exp.intercept[1])

l.append(a)

Using this approach you can get the intercept and the prediction_local, for the right value I don't really know which one it would be, but I am certain the object explainer has it somewhere with another name.

Use a breakpoint on your code and explore the explainer, maybe there are other info you would want to save as well.