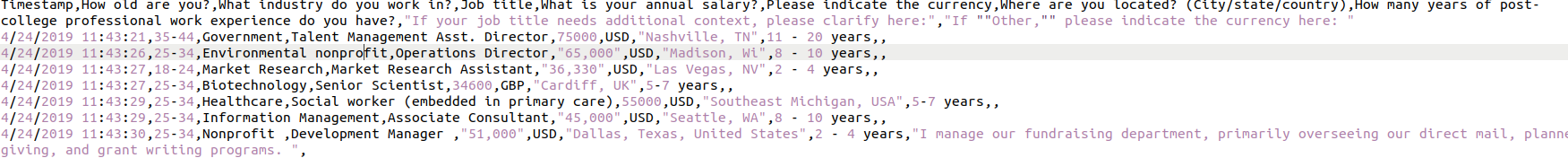

I have a CSV dataset that looks like the below:

Also, PFB data in form of text:

Timestamp,How old are you?,What industry do you work in?,Job title,What is your annual salary?,Please indicate the currency,Where are you located? (City/state/country),How many years of post-college professional work experience do you have?,"If your job title needs additional context, please clarify here:","If ""Other,"" please indicate the currency here: "

4/24/2019 11:43:21,35-44,Government,Talent Management Asst. Director,75000,USD,"Nashville, TN",11 - 20 years,,

4/24/2019 11:43:26,25-34,Environmental nonprofit,Operations Director,"65,000",USD,"Madison, Wi",8 - 10 years,,

4/24/2019 11:43:27,18-24,Market Research,Market Research Assistant,"36,330",USD,"Las Vegas, NV",2 - 4 years,,

4/24/2019 11:43:27,25-34,Biotechnology,Senior Scientist,34600,GBP,"Cardiff, UK",5-7 years,,

4/24/2019 11:43:29,25-34,Healthcare,Social worker (embedded in primary care),55000,USD,"Southeast Michigan, USA",5-7 years,,

4/24/2019 11:43:29,25-34,Information Management,Associate Consultant,"45,000",USD,"Seattle, WA",8 - 10 years,,

4/24/2019 11:43:30,25-34,Nonprofit ,Development Manager ,"51,000",USD,"Dallas, Texas, United States",2 - 4 years,"I manage our fundraising department, primarily overseeing our direct mail, planned giving, and grant writing programs. ",

4/24/2019 11:43:30,25-34,Higher Education,Student Records Coordinator,"54,371",USD,Philadelphia,8 - 10 years,equivalent to Assistant Registrar,

4/25/2019 8:35:51,25-34,Marketing,Associate Product Manager,"43,000",USD,"Cincinnati, OH, USA",5-7 years,"I started as the Marketing Coordinator, and was given the ""Associate Product Manager"" title as a promotion. My duties remained mostly the same and include graphic design work, marketing, and product management.",

Now, I tried the below code to load the data:

df = spark.read.option("header", "true").option("multiline", "true").option(

"delimiter", ",").csv("path")

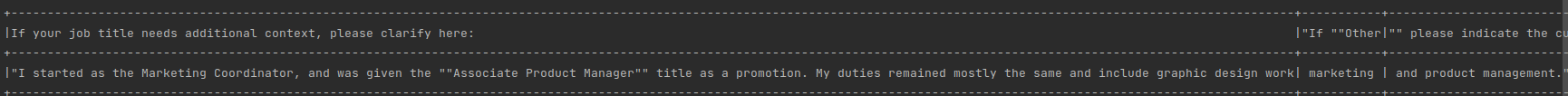

It gives me the output as below for the last record which divides the columns and also the output is not as expected:

The value should be null for the last column i.e "If ""Other,"" please indicate the currency here: " and the entire string should be wrapped up in the earlier column which is "If your job title needs additional context, please clarify here:"

I also tried .option('quote','/"').option('escape','/"') but didn't work too.

However, when I tried to load this file using Pandas, it was loaded correctly. I was surprised how Pandas can identify where the new column name starts and all. Maybe I can define a String schema for all the columns and load it back to the spark data frame but since I am using the lower spark version it won't work in a distributed manner hence I was exploring a way how Spark can handle this efficiently.

Any help is much appreciated.

CodePudding user response:

I would suggest to read the file as text.

- Data cleanse using Regex

logLine = sc.textFile("C:\TestLogs\Hospital.log")

logLine_Filtered = logLine.filter(lambda x: "LOG_PATTERN" in x)

logLine_output = logLine_Filtered(lambda a: a.split("<delimiter>")[0], a.split("<delimiter>")[1].....).collect()

logLine_output.first()

- Then read it as CSV

CodePudding user response:

Main issue is consecutive double quotes in your csv file. you have to escape extra double quotes in your csv file Like this :

4/24/2019 11:43:30,25-34,Higher Education,Student Records Coordinator,"54,371",USD,Philadelphia,8 - 10 years,equivalent to Assistant Registrar,

4/25/2019 8:35:51,25-34,Marketing,Associate Product Manager,"43,000",USD,"Cincinnati, OH, USA",5-7 years,"I started as the Marketing Coordinator, and was given the \" \ " Associate Product Manager \" \ " title as a promotion. My duties remained mostly the same and include graphic design work, marketing, and product management.",

After this it is generating result as expected :

df2 = spark.read.option("header",True).csv("sample1.csv")

df2.show(10,truncate=False)

******** Output ********

|4/25/2019 8:35:51 |25-34 |Marketing |Associate Product Manager |43,000 |USD |Cincinnati, OH, USA |5-7 years |I started as the Marketing Coordinator, and was given the ""Associate Product Manager"" title as a promotion. My duties remained mostly the same and include graphic design work, marketing, and product management.|null |null |

Or you can use blow code

df2 = spark.read.option("header",True).option("escape","\"").csv("sample1.csv")