I have a dataframe like as shown below

id,Name,country,amount,qty

1,ABC,USA,123,4500

1,ABC,USA,156,3210

1,BCE,USA,687,2137

1,DEF,UK,456,1236

1,ABC,nan,216,324

1,DEF,nan,12678,11241

1,nan,nan,637,213

1,BCE,nan,213,543

1,XYZ,KOREA,432,321

1,XYZ,AUS,231,321

sf = pd.read_clipboard(sep=',')

I would like to do the below

a) Get top 3 based on amount for each id and other selected columns such as Name and country. Meaning, we get top 3 based id and Name first and later, we again get top 3 based on id and country

b) Find out how much does each of the top 3 item contribute to total amount for each unique id.

So, I tried the below

sf_name = sf.groupby(['id','Name'],dropna=False)['amount'].sum().nlargest(3).reset_index().rename(columns={'amount':'Name_amount'})

sf_country = sf.groupby(['id','country'],dropna=False)['amount'].sum().nlargest(3).reset_index().rename(columns={'amount':'country_amount'})

sf_name['total'] = sf.groupby('id')['amount'].sum()

sf_country['total'] = sf.groupby('id')['amount'].sum()

sf_name['name_pct_total'] = (sf_name['Name_amount']/sf_name['total'])*100

sf_country['country_pct_total'] = (sf_country['country_amount']/sf_country['total'])*100

As you can see, I am repeating the same operation for each column.

But in my real dataframe, I have to do this groupby id and find Top3 and compute pct_total % for another 8 columns (along with Name and country)

Is there any efficient, elegant and scalable solution that you can share?

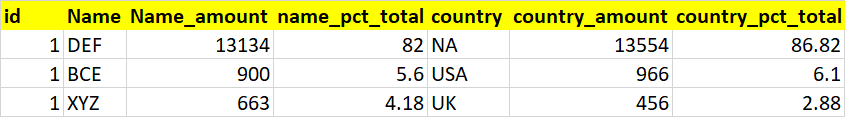

I expect my output to be like as below

update - full error

KeyError Traceback (most recent call last)

C:\Users\Test\AppData\Local\Temp/ipykernel_8720/1850446854.py in <module>

----> 1 df_new.groupby(['unique_key','Resale Customer'],dropna=False)['Revenue Resale EUR'].sum().nlargest(3).reset_index(level=1, name=f'{c}_revenue')

~\Anaconda3\lib\site-packages\pandas\core\series.py in nlargest(self, n, keep)

3834 dtype: int64

3835 """

-> 3836 return algorithms.SelectNSeries(self, n=n, keep=keep).nlargest()

3837

3838 def nsmallest(self, n: int = 5, keep: str = "first") -> Series:

~\Anaconda3\lib\site-packages\pandas\core\algorithms.py in nlargest(self)

1135 @final

1136 def nlargest(self):

-> 1137 return self.compute("nlargest")

1138

1139 @final

~\Anaconda3\lib\site-packages\pandas\core\algorithms.py in compute(self, method)

1181

1182 dropped = self.obj.dropna()

-> 1183 nan_index = self.obj.drop(dropped.index)

1184

1185 if is_extension_array_dtype(dropped.dtype):

~\Anaconda3\lib\site-packages\pandas\util\_decorators.py in wrapper(*args, **kwargs)

309 stacklevel=stacklevel,

310 )

--> 311 return func(*args, **kwargs)

312

313 return wrapper

~\Anaconda3\lib\site-packages\pandas\core\series.py in drop(self, labels, axis, index, columns, level, inplace, errors)

4769 dtype: float64

4770 """

-> 4771 return super().drop(

4772 labels=labels,

4773 axis=axis,

~\Anaconda3\lib\site-packages\pandas\core\generic.py in drop(self, labels, axis, index, columns, level, inplace, errors)

4277 for axis, labels in axes.items():

4278 if labels is not None:

-> 4279 obj = obj._drop_axis(labels, axis, level=level, errors=errors)

4280

4281 if inplace:

~\Anaconda3\lib\site-packages\pandas\core\generic.py in _drop_axis(self, labels, axis, level, errors, consolidate, only_slice)

4321 new_axis = axis.drop(labels, level=level, errors=errors)

4322 else:

-> 4323 new_axis = axis.drop(labels, errors=errors)

4324 indexer = axis.get_indexer(new_axis)

4325

~\Anaconda3\lib\site-packages\pandas\core\indexes\multi.py in drop(self, codes, level, errors)

2234 for level_codes in codes:

2235 try:

-> 2236 loc = self.get_loc(level_codes)

2237 # get_loc returns either an integer, a slice, or a boolean

2238 # mask

~\Anaconda3\lib\site-packages\pandas\core\indexes\multi.py in get_loc(self, key, method)

2880 if keylen == self.nlevels and self.is_unique:

2881 try:

-> 2882 return self._engine.get_loc(key)

2883 except TypeError:

2884 # e.g. test_partial_slicing_with_multiindex partial string slicing

~\Anaconda3\lib\site-packages\pandas\_libs\index.pyx in pandas._libs.index.BaseMultiIndexCodesEngine.get_loc()

~\Anaconda3\lib\site-packages\pandas\_libs\index.pyx in pandas._libs.index.IndexEngine.get_loc()

~\Anaconda3\lib\site-packages\pandas\_libs\index.pyx in pandas._libs.index.IndexEngine.get_loc()

pandas\_libs\hashtable_class_helper.pxi in pandas._libs.hashtable.UInt64HashTable.get_item()

pandas\_libs\hashtable_class_helper.pxi in pandas._libs.hashtable.UInt64HashTable.get_item()

KeyError: 8937472

CodePudding user response:

Simpliest is use loop by columnsnames in list, for pct_amount use GroupBy.transform with sum per id and divide amount column:

dfs = []

cols = ['Name','country']

for c in cols:

df = (sf.groupby(['id',c],dropna=False)['amount'].sum()

.nlargest(3)

.reset_index(level=1, name=f'{c}_amount'))

df[f'{c}_pct_total']=(df[f'{c}_amount'].div(df.groupby('id',dropna=False)[f'{c}_amount']

.transform('sum'))*100)

dfs.append(df)

df = pd.concat(dfs, axis=1)

print (df)

Name Name_amount Name_pct_total country country_amount \

id

1 DEF 13134 89.365177 NaN 13744

1 BCE 900 6.123699 USA 966

1 XYZ 663 4.511125 UK 456

country_pct_total

id

1 90.623764

1 6.369511

1 3.006726

Testing with Resale Customer column name::

print (sf)

id Resale Customer country amount qty

0 1 ABC USA 123 4500

1 1 ABC USA 156 3210

2 1 BCE USA 687 2137

3 1 DEF UK 456 1236

4 1 ABC NaN 216 324

5 1 DEF NaN 12678 11241

6 1 NaN NaN 637 213

7 1 BCE NaN 213 543

8 1 XYZ KOREA 432 321

9 1 XYZ AUS 231 321

Test columns names:

print (sf.columns)

Index(['id', 'Resale Customer', 'country', 'amount', 'qty'], dtype='object')

dfs = []

cols = ['Resale Customer','country']

for c in cols:

df = (sf.groupby(['id',c],dropna=False)['amount'].sum()

.nlargest(3)

.reset_index(level=1, name=f'{c}_amount'))

df[f'{c}_pct_total']=(df[f'{c}_amount'].div(df.groupby('id',dropna=False)[f'{c}_amount']

.transform('sum'))*100)

dfs.append(df)

df = pd.concat(dfs, axis=1)

print (df)

Resale Customer Resale Customer_amount Resale Customer_pct_total country \

id

1 DEF 13134 89.365177 NaN

1 BCE 900 6.123699 USA

1 XYZ 663 4.511125 UK

country_amount country_pct_total

id

1 13744 90.623764

1 966 6.369511

1 456 3.006726

Solution with melt is possible, but more complicated:

df = sf.melt(id_vars=['id', 'amount'], value_vars=['Name','country'])

df = (df.groupby(['id','variable', 'value'],dropna=False)['amount']

.sum()

.sort_values(ascending=False)

.groupby(level=[0,1],dropna=False)

.head(3)

.to_frame()

.assign(pct_total=lambda x: x['amount'].div(x.groupby(level=[0,1],dropna=False)['amount'].transform('sum')).mul(100),

g=lambda x: x.groupby(level=[0,1],dropna=False).cumcount())

.set_index('g', append=True)

.reset_index('value')

.unstack(1)

.sort_index(level=1, axis=1)

.droplevel(1)

)

df.columns = df.columns.map(lambda x: f'{x[1]}_{x[0]}')

print (df)

Name_amount Name_pct_total Name_value country_amount country_pct_total \

id

1 13134 89.365177 DEF 13744 90.623764

1 900 6.123699 BCE 966 6.369511

1 663 4.511125 XYZ 456 3.006726

country_value

id

1 NaN

1 USA

1 UK