New to ML and I would like to know what I'm missing or doing incorrectly.

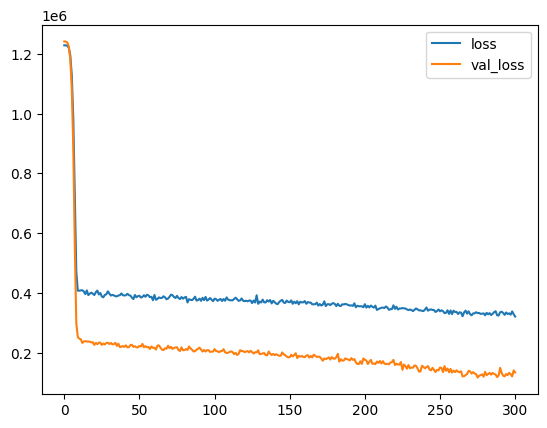

I'm trying to figure out why my data is being underfit when applying early stopping and dropout however when I don't use earlystopping or dropout the fit seems to be okay...

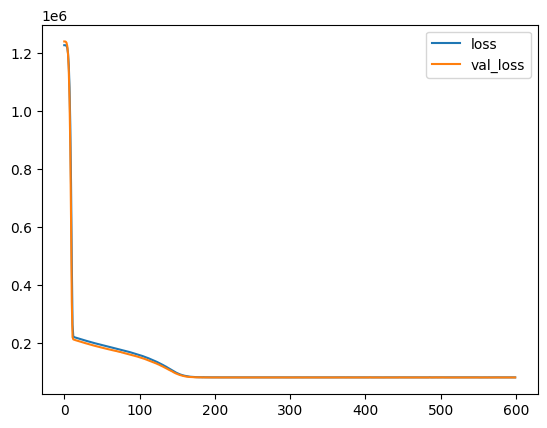

Data looks underfit with earlystopping and dropout combined:

model = Sequential()

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(1))

early_stopping = EarlyStopping(monitor='val_loss', mode='min', patience=25)

model.compile(optimizer='adam', loss='mae')

model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=600, callbacks=[early_stopping])

I'm trying to figure out why early stopping would stop when the results are so far off. I would guess that the model would continue until the end of the 600 epochs however early stopping pulls the plug around 300.

I'm probably doing something wrong but I can't figure it out so any insights would be appreciated. Thank you in advance :)

CodePudding user response:

It defines performance measure and specifies whether to maximize or minimize it.

Keras then stops training at the appropriate epoch. When verbose=1 is designated, it is possible to output on the screen when the training is stopped in keras.

es = EarlyStopping(monitor='val_loss', mode='min')

It may not be effective to stop right away because performance does not increase. Patience defines how many times to allow epochs that do not increase performance. Partiance is a rather subjective criterion. The optimal value can be changed depending on the design of the used data and model used.

es = EarlyStopping(monitor='val_loss', mode='min', verbose=1, patience=50)

When the training is stopped by the Model Choice Early stopping object, the state will generally have a higher validation error than the previous model. Therefore, early stopping may be controlled so that the validation error of the model is no longer lowered by stopping the training of the model at a certain point in time, but the stopped state will not be the best model. Therefore, it is necessary to store the model with the best validation performance, and for this purpose, the object called Model Checkpoint exists in keras. This object monitors validation errors and unconditionally stores parameters at this time if the validation performance is better than the previous epoch. Through this, when training is stopped, the model with the highest validation performance can be returned.

from keras.callbacks import ModelCheckpoint

mc = ModelCheckpoint ('best_model.h5', monitor='val_loss', mode='min', save_best_only=True)

in the callbacks parameter, allowing the best model to be stored.

hist = model.fit(train_x, train_y, nb_epoch=10,

batch_size=10, verbose=2, validation_split=0.2,

callbacks=[early_stopping, mc])

In your case Patience 25 indicates whether to end when the reference value does not improve more than 25 times consecutively.

from keras.callbacks import ModelCheckpoint

model = Sequential()

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(1))

early_stopping = EarlyStopping(monitor='val_loss', mode='min', patience=25, verbose=1)

mc = ModelCheckpoint ('best_model.h5', monitor='val_loss', mode='min', save_best_only=True)

model.compile(optimizer='adam', loss='mae')

model.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=600, callbacks=[early_stopping, mc])

CodePudding user response:

I recommend 2 things. In the early stop callback set the parameter

restore_best_weights=True

This way if the early stopping callback activates, your model is set to the weights for the epoch with the lowest validation loss. To get the lower validation loss I recommend you use the callback ReduceLROnPlateau. My recommended code for these callbacks is shown below.

estop=tf.keras.callbacks.EarlyStopping( monitor="val_loss", patience=4,

verbose=1, estore_best_weights=True)

rlronp=tf.keras.callbacks.ReduceLROnPlateau(monitor="val_loss", factor=0.5,

patience=2, verbose=1)

callbacks=[estop, rlronp]

In model.fit set parameter callbacks=callbacks. Set epochs to a large number so it is likely the estop callback will be activated.