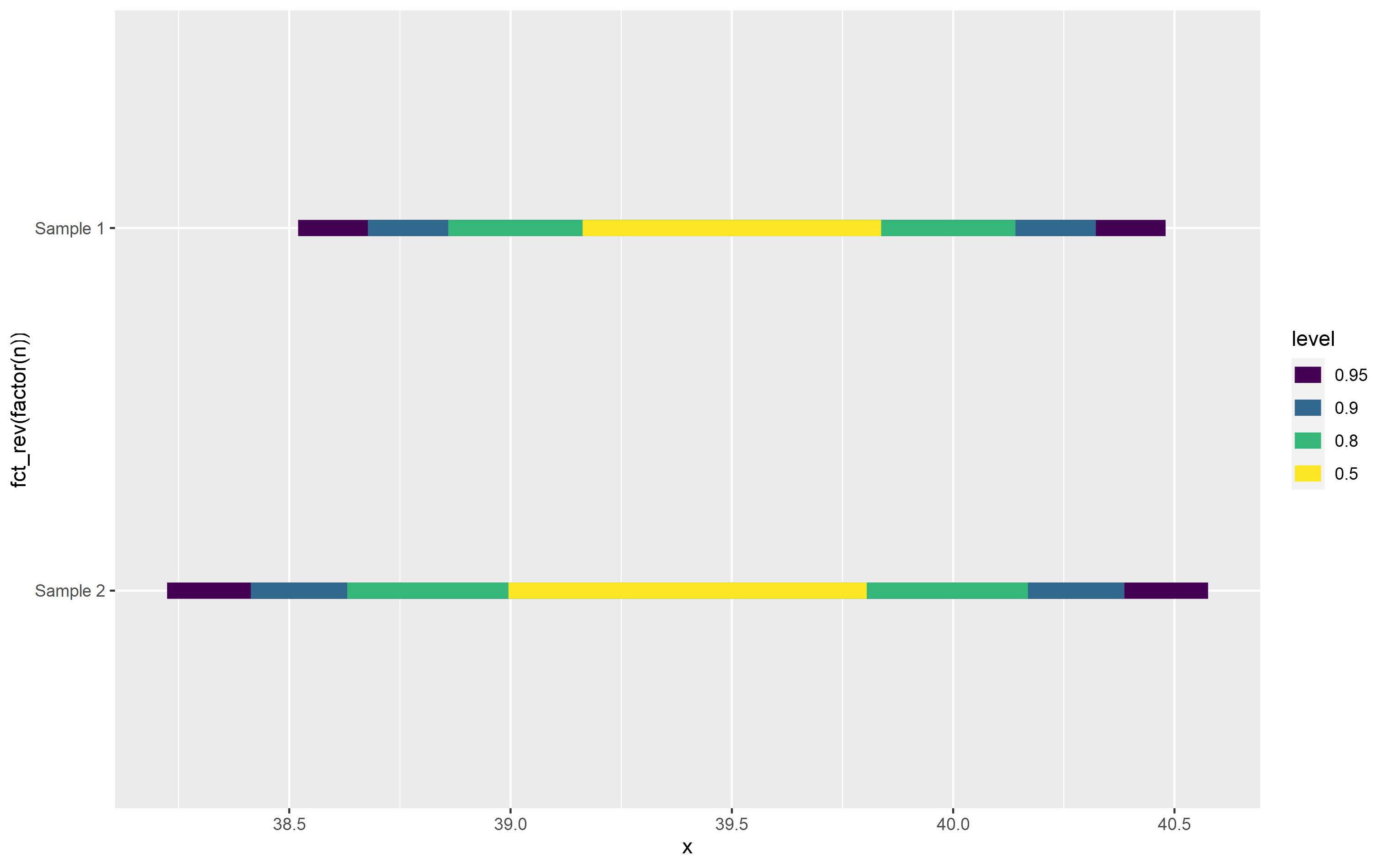

I'm using ggdist (which is awesome) to show variability within a sample. I have a series of means, SDs, and std.errors and I want to use the stat_interval() function to show the 50%, 80%, 90%, and 95% confidence intervals of these samples. It seems that they're calculating something different because the intervals being plotted are very different to when I calculate confidence intervals manually. Does anyone know what I'm doing wrong / what the function is doing?

The 95% CI of Sample 1 in the attached plot should be from 39.38 - 39.62 but it's being plotted much larger (nearly 38.5 - 40.5, see image).

Reprex ↓

library(tidyverse)

library(ggdist)

data <- tibble::tribble(

~n, ~mean, ~SD, ~se,

"Sample 1", 39.5, 0.5, 0.0625,

"Sample 2", 39.4, 0.6, 0.0750

)

data |>

ggplot(aes(xdist = dist_normal(mean, SD), y = fct_rev(factor(n))))

stat_interval(.width = c(.5, .8, .9, .95), position = "dodge")

CodePudding user response:

I believe the ranges being plotted are simply the quantiles, not confidence intervals. e.g.:

> quantile(rnorm(10000, 39.5, 0.5), c(0.025, 0.975 ))

2.5% 97.5%

38.49979 40.46578

For confidence intervals, I think you are looking for Students T. e.g.:

data %>%

ggplot(aes(xdist = distributional::dist_student_t( 63, mean, se), y = fct_rev(factor(n))))

stat_interval(.width = c(.5, .8, .9, .95), position = "dodge")