Approach 1 :

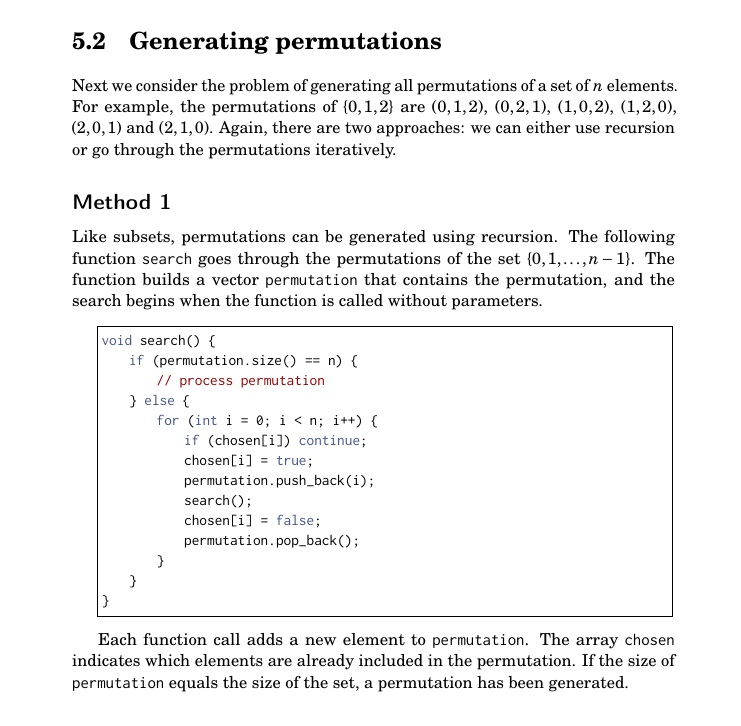

The difference between this code and approach 1 is that we're doing some wasteful work everytime (when chosen[i] is true, we continue instead of moving down the recursion tree). I think, the recurrence relation here should be T(N) = N * T(N-1) N * c as we can divide the for loop into 2 parts : one where we do constant work in each iteration (N * c) and one where we make recursive calls (N * T(N-1)). Then for T(N-1) = (N-1) * T(N-2) N*c (note that we're still paying N * c cost here) and so on. At the end, we'll have a term of N^N left and that will dominate O(N!) for large N. So does this mean that the time complexity of 2nd code snippet is O(N^N) and not O(N!)?

Relation question : For N queens problem, we have a backtracking solution (can refer https://dev.to/chengmuy/optimizing-an-n-queens-solution-3m1m) but here, we have a for loop which checks whether or not the current state is safe (in O(1) average time) and makes recursive calls accordingly. Unless I'm wrong, the time complexity of this backtracking solution is also O(N!). But, I'm confused how we factor in the cost of early terminated branches in O(N!) i.e. basically the cost of having to check all the rows for current column (or columns for current row, depending on implementation) which are safe every time.

CodePudding user response:

In both algorithms, the recursive function is entered once for every prefix of a permutation of {1, …, n}. In the first algorithm, we can imagine the work done by each iteration of the for loop being accounted for inside the recursive call, since there will be a recursive call for every iteration. So the total amount of work is proportional to the number of possible prefixes. Since they are not proper prefixes, this includes all the permutations; so there are more than n! prefixes in all. In fact, the number of prefixes of length n-1 is also n!, so there are more than 2(n!) prefixes, but the remaining terms drop off pretty rapidly. It turns out that there are asymptotically e(n!) prefixes, where e is Euler's constant, approximately 2.71828. So that's O(n!), since we can ignore constant factors.

Now what about the second algorithm? We have the same number of recursive calls but each recursive call which does not correspond to a complete permutation requires a scan over the chosen array, which has n elements. That adds n × (e - 1) × n!. In effect, it changes the asymptote to O((n 1)!), which is bigger than O(n!). But not as much as O(nn).