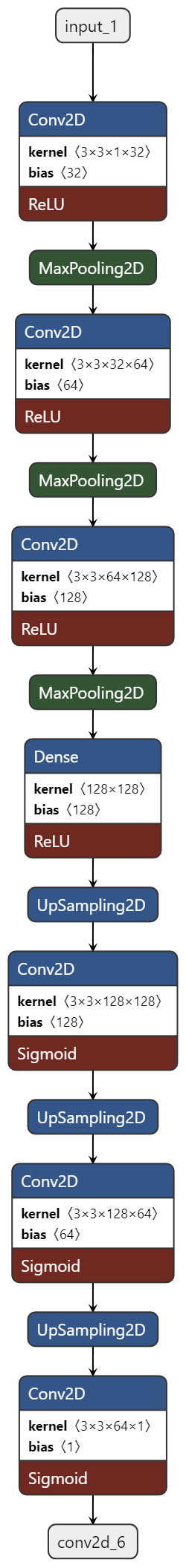

Background Information I am trying to create a model (a beginner so please excuse my ignorance). The architecture I am trying to convert is given below as a link as well.

This is the code I came up with. I am using Binder to run the code.

import os

import torch

import torchvision

import tarfile

from torchvision.datasets.utils import download_url

from torch.utils.data import random_split

from torchsummary import summary

# Implementation of CNN/ConvNet Model

class build_unet(torch.nn.Module):

def __init__(self):

super(build_unet, self).__init__()

keep_prob = 0.5

self.layer1 = torch.nn.Sequential(

torch.nn.Conv2d(3, 32, kernel_size=3),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, padding=1))

self.layer2 = torch.nn.Sequential(

torch.nn.Conv2d(32, 64, kernel_size=3),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, padding=1))

self.layer3 = torch.nn.Sequential(

torch.nn.Conv2d(64, 128, kernel_size=3),

torch.nn.ReLU(),

torch.nn.MaxPool2d(kernel_size=2, padding=1))

self.dense = torch.nn.Linear(64, 128, bias=True)

torch.nn.init.xavier_uniform_(self.dense.weight)

self.layer4 = torch.nn.Sequential(

self.dense,

torch.nn.ReLU(),

torch.nn.Upsample()

)

self.layer5 = torch.nn.Sequential(

torch.nn.Conv2d(128, 128, kernel_size=3),

torch.nn.Sigmoid(),

torch.nn.Upsample()

)

self.layer6 = torch.nn.Sequential(

torch.nn.Conv2d(128, 64, kernel_size=3),

torch.nn.Sigmoid(),

torch.nn.Upsample()

)

self.layer7 = torch.nn.Sequential(

torch.nn.Conv2d(64, 1, kernel_size=3),

torch.nn.Sigmoid()

)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

out = self.layer6(out)

out = self.layer7(out)

return out

if __name__ == "__main__":

x = torch.randn((2, 3, 512, 512))

f = build_unet()

y = f(x)

print(y.shape)

How would I resolve this error?

ERROR MESSAGE

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

/tmp/ipykernel_36/1438699785.py in <module>

87 x = torch.randn((2, 3, 512, 512))

88 f = build_unet()

---> 89 y = f(x)

90 print(y.shape)

/opt/conda/lib/python3.9/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

1049 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1050 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1051 return forward_call(*input, **kwargs)

1052 # Do not call functions when jit is used

1053 full_backward_hooks, non_full_backward_hooks = [], []

/tmp/ipykernel_36/1438699785.py in forward(self, x)

72 out = self.layer3(out)

73

---> 74 out = self.layer4(out)

75 out = self.layer5(out)

76 out = self.layer6(out)

/opt/conda/lib/python3.9/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

1049 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1050 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1051 return forward_call(*input, **kwargs)

1052 # Do not call functions when jit is used

1053 full_backward_hooks, non_full_backward_hooks = [], []

/opt/conda/lib/python3.9/site-packages/torch/nn/modules/container.py in forward(self, input)

137 def forward(self, input):

138 for module in self:

--> 139 input = module(input)

140 return input

141

/opt/conda/lib/python3.9/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

1049 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1050 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1051 return forward_call(*input, **kwargs)

1052 # Do not call functions when jit is used

1053 full_backward_hooks, non_full_backward_hooks = [], []

/opt/conda/lib/python3.9/site-packages/torch/nn/modules/upsampling.py in forward(self, input)

139

140 def forward(self, input: Tensor) -> Tensor:

--> 141 return F.interpolate(input, self.size, self.scale_factor, self.mode, self.align_corners)

142

143 def extra_repr(self) -> str:

/opt/conda/lib/python3.9/site-packages/torch/nn/functional.py in interpolate(input, size, scale_factor, mode, align_corners, recompute_scale_factor)

3647 scale_factors = [scale_factor for _ in range(dim)]

3648 else:

-> 3649 raise ValueError("either size or scale_factor should be defined")

3650

3651 if recompute_scale_factor is None:

ValueError: either size or scale_factor should be defined

CodePudding user response:

nn.Upsample() has following parameters: size, scale_factor, mode, align_corners. By default size=None, mode=nearest and align_corners=None.

torch.nn.Upsample(size=None, scale_factor=None, mode='nearest', align_corners=None)

When you set scale_factor=2 you will get following result:

import torch

import torch.nn as nn

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

keep_prob = 0.5

self.layer1 = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, padding=1))

self.layer2 = nn.Sequential(

nn.Conv2d(32, 64, kernel_size=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, padding=1))

self.layer3 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, padding=1))

self.dense = nn.Linear(64, 128, bias=True)

nn.init.xavier_uniform_(self.dense.weight)

self.layer4 = nn.Sequential(

self.dense,

nn.ReLU(),

nn.Upsample(scale_factor=2)

)

self.layer5 = nn.Sequential(

nn.Conv2d(128, 128, kernel_size=3),

nn.Sigmoid(),

nn.Upsample(scale_factor=2)

)

self.layer6 = nn.Sequential(

nn.Conv2d(128, 64, kernel_size=3),

nn.Sigmoid(),

nn.Upsample(scale_factor=2)

)

self.layer7 = nn.Sequential(

nn.Conv2d(64, 1, kernel_size=3),

nn.Sigmoid()

)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

out = self.layer6(out)

out = self.layer7(out)

return out

if __name__ == "__main__":

x = torch.randn((2, 3, 512, 512))

f = Net()

y = f(x)

print(y.shape)

Result:

torch.Size([2, 1, 498, 1010])