When training a neural network, if the same module is used multiple times in one iteration, does the gradient of the module need special processing during backpropagation?

for example:

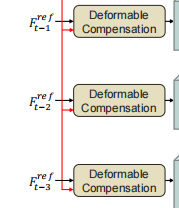

One Deformable Compensation is used three times in this model, which means they share the same weights.

What will happen when I use loss.backward()?

Will loss.backward() work correctly?

CodePudding user response:

The nice thing about autograd and backward passes is that the underlying framework is not "algorithmic", but rather a mathematic one: it implements the chain rule of derivatives. Therefore, there are no "algorithmic" considerations of "shared weights" or "weighting different layers", it's pure math. The backward pass provides the derivative of your loss function w.r.t the weights in a purely mathematical way.

Sharing weights can be done globally (e.g., when training Saimese networks), on a "layer level" (as in your example), but also within a layer. When you think about it Convolution layers and Reccurent layers are a fancy way of locally sharing weights.

Naturally, pytorch (as well as all other DL frameworks) can trivially handle these cases.

As long as your "deformable compensation" layer is correctly implemented -- pytorch will take care of the gradients for you, in a mathematically correct manner, thanks to the chain rule.