I'm trying to apply spaCys tokenizer on dataframe column to get a new column containing list of tokens.

Assume we have the following dataframe:

import pandas as pd

details = {

'Text_id' : [23, 21, 22, 21],

'Text' : ['All roads lead to Rome',

'All work and no play makes Jack a dull buy',

'Any port in a storm',

'Avoid a questioner, for he is also a tattler'],

}

# creating a Dataframe object

example_df = pd.DataFrame(details)

The code below aims to tokenize Text column:

import spacy

nlp = spacy.load("en_core_web_sm")

example_df["tokens"] = example_df["Text"].apply(lambda x: nlp.tokenizer(x))

example_df

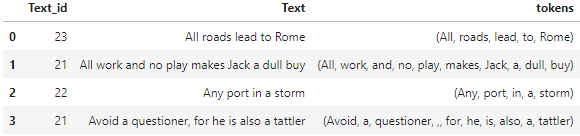

The results looks like:

Now, we have a new column tokens, which returns doc object for each sentence.

How could we change the code to get a python list of tokenized words?

I've tried the following line:

example_df["tokens"] = example_df["Text"].apply(token.text for token in (lambda x: nlp.tokenizer(x)))

but I have the following error:

TypeError Traceback (most recent call last)

/tmp/ipykernel_33/3712416053.py in <module>

14 nlp = spacy.load("en_core_web_sm")

15

---> 16 example_df["tokens"] = example_df["Text"].apply(token.text for token in (lambda x: nlp.tokenizer(x)))

17

18 example_df

TypeError: 'function' object is not iterable

Thank you in advance!

UPDATE: I have a solution, but I still have another problem. I want to count words using built-in class Counter, which takes a list as input and can be incrementally updated with a list of tokens of other document using update function. The below code should returns the number of occurences for each word in dataframe:

from collections import Counter

# instantiate counter object

counter_df = Counter()

# call update function of the counter object in update the counts

example_df["tokens"].map(counter_df.update)

However, the output is:

0 None

1 None

2 None

3 None

Name: tokens, dtype: object

The expected output must be like:

Counter({'All': 2, 'roads': 1, 'lead': 1, 'to': 1, 'Rome': 1, 'work': 1, 'and': 1, 'no': 1, 'play': 1, 'makes': 1, 'a': 4, 'dull':1, 'buy':1, 'Any':1, 'port':1, 'in': 1, 'storm':1, 'Avoid':1, 'questioner':1, ',':1, 'for':1, 'he':1})

Thank you again :)

CodePudding user response:

You can use

example_df["tokens"] = example_df["Text"].apply(lambda x: [t.text for t in nlp.tokenizer(x)])

See the Pandas test:

import pandas as pd

details = {

'Text_id' : [23, 21, 22, 21],

'Text' : ['All roads lead to Rome',

'All work and no play makes Jack a dull buy',

'Any port in a storm',

'Avoid a questioner, for he is also a tattler'],

}

# creating a Dataframe object

example_df = pd.DataFrame(details)

import spacy

nlp = spacy.load("en_core_web_sm")

example_df["tokens"] = example_df["Text"].apply(lambda x: [t.text for t in nlp.tokenizer(x)])

print(example_df.to_string())

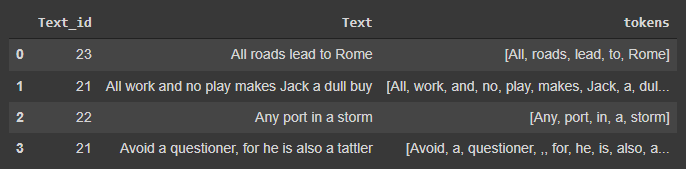

Output:

Text_id Text tokens

0 23 All roads lead to Rome [All, roads, lead, to, Rome]

1 21 All work and no play makes Jack a dull buy [All, work, and, no, play, makes, Jack, a, dull, buy]

2 22 Any port in a storm [Any, port, in, a, storm]

3 21 Avoid a questioner, for he is also a tattler [Avoid, a, questioner, ,, for, he, is, also, a, tattler]

CodePudding user response:

Try this

example_df["tokens"] = example_df["Text"].apply(lambda x : [token.text for token in nlp.tokenizer(x)])

which gives us

As discussed in comments OP needs the frequency of every word in the list.

counter_df = Counter()

def exfunc(row):

counter_df.update(row)

_ = example_df["tokens"].apply(exfunc)

which gives the expected output

Counter({',': 1,

'All': 2,

'Any': 1,

'Avoid': 1,

'Jack': 1,

'Rome': 1,

'a': 4,

'also': 1,

'and': 1,

'buy': 1,

'dull': 1,

'for': 1,

'he': 1,

'in': 1,

'is': 1,

'lead': 1,

'makes': 1,

'no': 1,

'play': 1,

'port': 1,

'questioner': 1,

'roads': 1,

'storm': 1,

'tattler': 1,

'to': 1,

'work': 1})

Q : Why did your solution with map did not work?

Map is used for substituting each value in a Series with another value, that may be derived from a function, a dict or a Series.