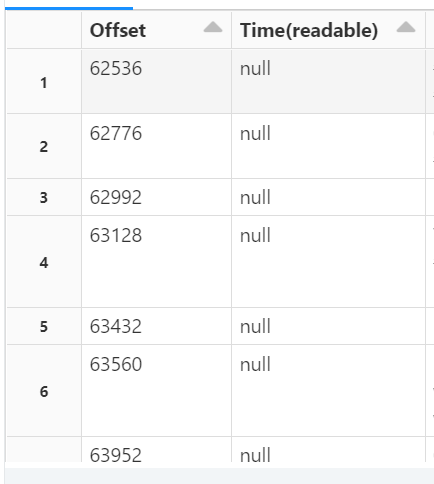

I'm trying to cast the column type to Timestamptype for which the value is in the format "11/14/2022 4:48:24 PM". However when I display the results I see the values as null.

Here is the sample code that I'm using to cast the timestamp field.

val messages = df.withColumn("Offset", $"Offset".cast(LongType)) .withColumn("Time(readable)", $"EnqueuedTimeUtc".cast(TimestampType)) .withColumn("Body", $"Body".cast(StringType)) .select("Offset", "Time(readable)", "Body")

display(messages)

Is there any other way I can try to avoid the null values?

CodePudding user response:

Instead of casting to TimestampType, you can use to_timestamp function and provide the time format explicitly, like so:

import org.apache.spark.sql.types._

import org.apache.spark.sql.functions._

import spark.implicits._

val time_df = Seq((62536, "11/14/2022 4:48:24 PM"), (62537, "12/14/2022 4:48:24 PM")).toDF("Offset", "Time")

val messages = time_df

.withColumn("Offset", $"Offset".cast(LongType))

.withColumn("Time(readable)", to_timestamp($"Time", "MM/dd/yyyy h:mm:ss a"))

.select("Offset", "Time(readable)")

messages.show(false)

------ -------------------

|Offset|Time(readable) |

------ -------------------

|62536 |2022-11-14 16:48:24|

|62537 |2022-12-14 16:48:24|

------ -------------------

messages: org.apache.spark.sql.DataFrame = [Offset: bigint, Time(readable): timestamp]

One thing to remember, is that you will have to set one Spark configuration, to allow for legacy time parser policy:

spark.conf.set("spark.sql.legacy.timeParserPolicy", "LEGACY")