I am trying to extract data from this site of the table which has 382 rows.This is the site:

I am using beautifulsoup for scraping and i want this program to run every 5 min schedule.I am trying to insert the value in a json list with exactly 382 rows excluding the header and first column with numbering.this is my code:

import requests

from bs4 import BeautifulSoup

def convert_to_html5lib(URL, my_list):

r = requests.get(URL)

# Create a BeautifulSoup object

soup = BeautifulSoup(r.content, 'html5lib')

soup.prettify()

# result = soup.find_all("div")[1].get_text()

result = soup.find('table', {'class': 'table table-bordered background-white shares-table fixedHeader'}).get_text()

# result = result.find('tbody')

print(result)

for item in result.split():

my_list.append(item)

print(my_list)

# return

details_list = []

convert_to_html5lib("http://www.dsebd.org/latest_share_price_scroll_l.php", details_list)

counter = 0

while counter < len(details_list):

if counter == 0:

company_name = details_list[counter]

counter = 1

last_trading_price = details_list[counter]

counter = 1

last_change_price_in_value = details_list[counter]

counter = 1

schedule.every(5).minutes.do(scrape_stock)

But i am not getting all the value of the table.I want all the data of 382 rows table as a list so later i can save it into database.But i am not getting any result and also scheduler not working.What am i doing wrong here?

CodePudding user response:

You can use BeautifulSoup for requirement

here are wrong in some points

- scraping only 1 row.

- use Schedule library in proper way. (reference :https://www.geeksforgeeks.org/python-schedule-library/)

Here is your solution with changes :

import schedule

import time

from bs4 import BeautifulSoup

import requests

def convert_to_html5lib(url,details_list):

# Make a GET request to fetch the raw HTML content

html_content = requests.get(url).text

# Parse the html content

soup = BeautifulSoup(html_content, "lxml")

# extract table from webpage

table = soup.find("table", { "class" : "table table-bordered background-white shares-table fixedHeader" })

rows = table.find_all('tr')

for row in rows:

cols=row.find_all('td')

# remove first element from row

cols=[x.text.strip() for x in cols[1:]]

details_list.append(cols)

print(cols)

# return

details_list = []

counter = 0

url="http://www.dsebd.org/latest_share_price_scroll_l.php"

# schedule job for every 5 mins

schedule.every(5).minutes.do(convert_to_html5lib,url,details_list)

# same as your logic

while counter < len(details_list):

if counter == 0:

company_name = details_list[counter]

counter = 1

last_trading_price = details_list[counter]

counter = 1

last_change_price_in_value = details_list[counter]

counter = 1

# scheduler wait for 5 mins

while True:

schedule.run_pending()

time.sleep(5)

CodePudding user response:

You can check my code first to get all the data in the table. Since the data here is always updated, I think it would be better to use selenium.

from bs4 import BeautifulSoup

import requests

from selenium import webdriver

import pandas as pd

url = "https://www.dsebd.org/latest_share_price_scroll_l.php"

driver = webdriver.Firefox(executable_path="") // Insert your webdriver path please

driver.get(url)

html = driver.page_source

soup = BeautifulSoup(html)

table = soup.find_all('table', {'class': 'table table-bordered background-white shares-table fixedHeader'})

df = pd.read_html(str(table))

print(df)

Output:

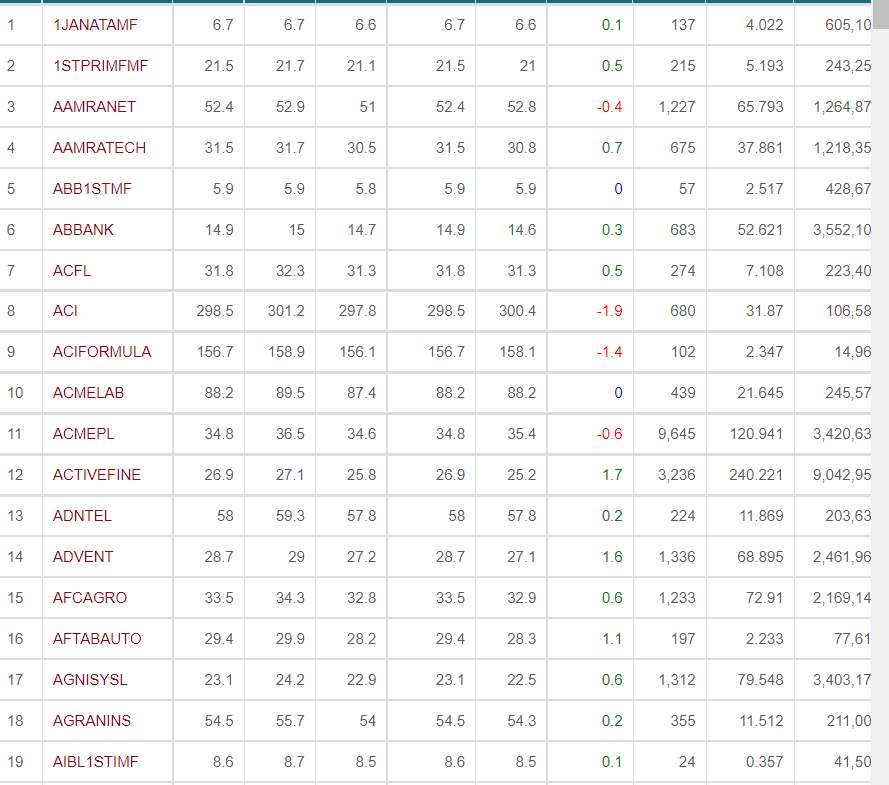

[ Unnamed: 0 Unnamed: 1 Unnamed: 2 ... Unnamed: 8 Unnamed: 9 Unnamed: 10

0 1 1JANATAMF 6.7 ... 137 4.022 605104

1 2 1STPRIMFMF 21.5 ... 215 5.193 243258

2 3 AAMRANET 52.4 ... 1227 65.793 1264871

3 4 AAMRATECH 31.5 ... 675 37.861 1218353

4 5 ABB1STMF 5.9 ... 57 2.517 428672

.. ... ... ... ... ... ... ...

377 378 WMSHIPYARD 11.2 ... 835 14.942 1374409

378 379 YPL 11.3 ... 247 4.863 434777

379 380 ZAHEENSPIN 8.8 ... 174 2.984 342971

380 381 ZAHINTEX 7.7 ... 111 1.301 174786

381 382 ZEALBANGLA 120.0 ... 102 0.640 5271

[382 rows x 11 columns]]