I want to execute Azure Synapse Pipeline whenever a file is copied into a folder in data lake.

Can we do that and how can we achieve that?

Thanks, Pavan.

CodePudding user response:

You can trigger a pipeline (start pipeline execution) based on a file copied to datalake folder using storage event triggers. The storage event triggers can start the execution of pipeline based on a selected action.

You can follow the steps specified below to create a storage event trigger.

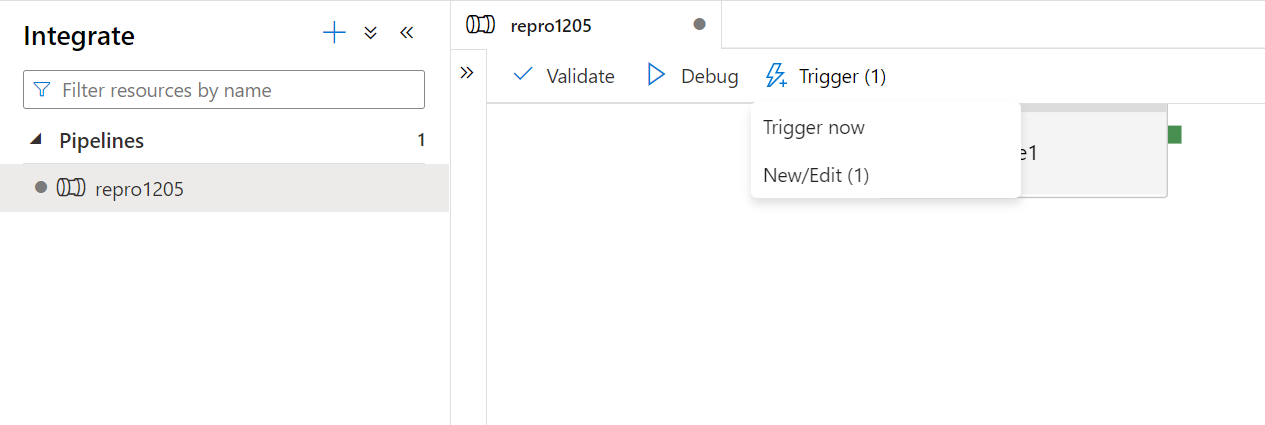

Assuming you have a pipeline named ‘pipeline1’ in azure synapse which you want to execute based on file copied to datalake folder, click on trigger and select

New/Edit.

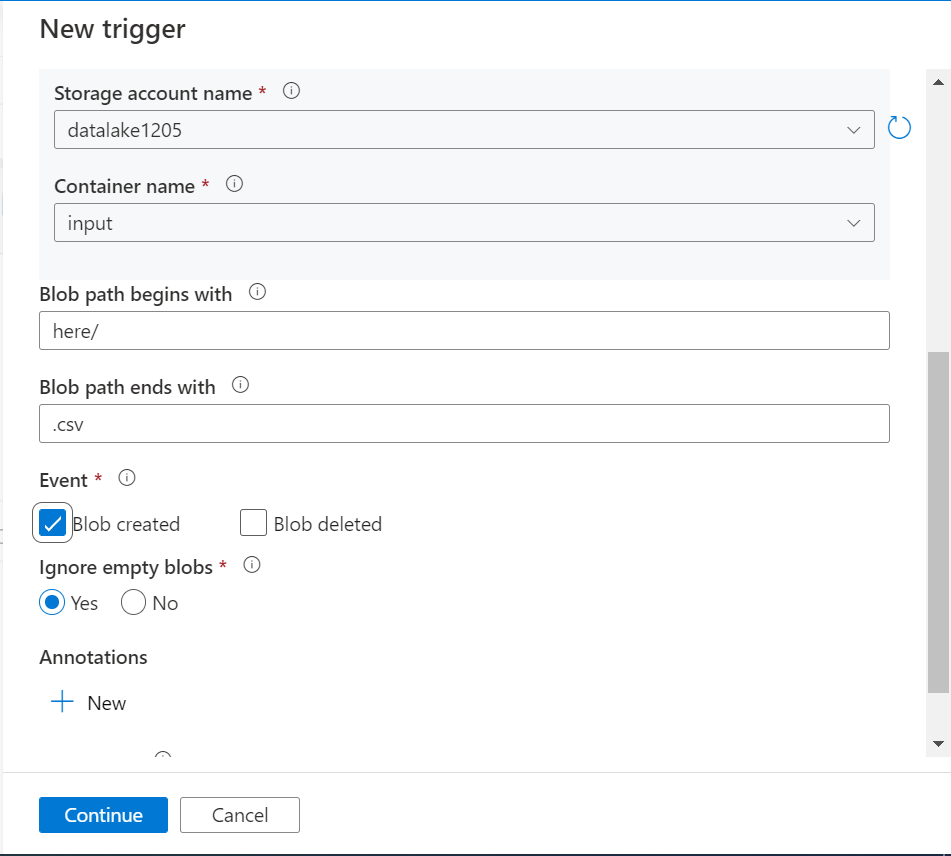

Choose a new trigger. Select trigger type as storage events and specify the datalake storage details on which you want to start trigger when a file is copied into it. Specify

container name,blob path begins withandblob path ends withaccording to your datalake directory structure and type of files.

Since you need to start pipeline when a blob file appears in datalake folder, check

Blob Createdevent. Check start trigger on action, complete creating the trigger and publish it.

These steps allow you to create a storage event trigger for your pipeline based on the datalake storage. As soon as files are uploaded or copied to the specific directory of the datalake container, the pipeline execution will be started, and you can work on further steps. You can refer to the following document to understand more about event triggers.

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger?tabs=data-factory