I have a CSV data like the below:

time_value,annual_salary

5/01/2019 1:02:16,120.56

06/01/2019 2:02:17,12800

7/01/2019 03:02:18,123.00

08/01/2019 4:02:19,123isdhad

Now, I want to convert to the timestamp column. So, I created a view out of these records and tried to convert it but it throws an error:

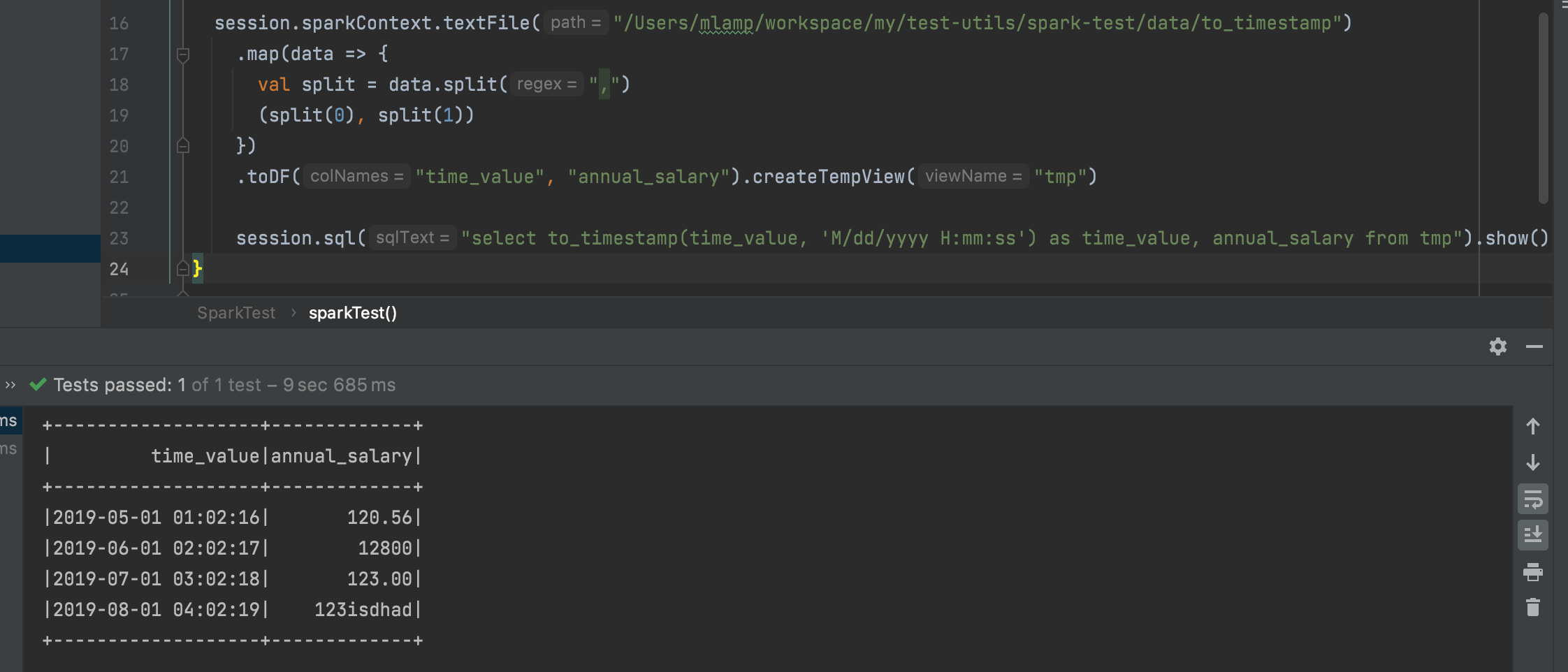

spark.sql("select to_timestamp(time_value,'M/dd/yyyy H:mm:ss') as time_value from table")

Error:

Text '5/1/2019 1:02:16' could not be parsed

CodePudding user response:

Sid,

According to the error that i am seeing there, this is concerning the Date Format issue.

Text '5/1/2019 1:02:16' could not be parsed

But your time format is specific as such 'M/dd/yyyy H:mm:ss') You can see that the day specific is /1/ but your format is dd which expects two digits.

Please try the following format

'M/d/yyyy H:mm:ss'

CodePudding user response:

I tried your SQL no problem. It may be a problem with the spark version. I used 2.4.8

CodePudding user response:

Pay attention to the fact that there is a changement in the format with Spark 3.0 (https://spark.apache.org/docs/3.0.0-preview2/sql-migration-guide.html#:~:text=To parse the same timestamp,SSS .)

Unfortunately I don't have the time to run the code locally and explain in detail what is wrong but I remember having a similar problem when following a Spark 2 tutorial with Spark 3.0 running.