I am unable to establish connection to my Oracle database from Azure Databricks although it works in ADF where I am able to query the table. But ADF takes time to filter the records so I am still trying to connect from Databricks.

Error message when I tried to establish the connection: DPI-1047: Cannot locate a 64-bit Oracle Client library: "/databricks/driver/oracle_ctl//lib/libclntsh.so: cannot open shared object file: No such file or directory".

When I executed the following command

dbutils.fs.ls("/databricks/driver/")

there was no such directory

This triggered me to post some questions here:

Does this mean the init-script did not perform its job?

Is /databricks/driver/oracle_ctl a hidden directory for dbutils.fs.ls?

Error message points to /databricks/driver/oracle_ctl//lib/libclntsh.so, when I manually inspected the downloaded oracle client, there is no such folder called lib although libclntsh.so exists in the main directory. Is there a problem that databricks is checking the wrong directory for the libclntsh.so?

Does this connections still works for others?

Syntax for connection: cx_Oracle.connect(user= user_name, password= password,dsn= IP ':' Port '/' DB_name)

Above syntax works fine when connected from inside a on-premises machine.

CodePudding user response:

You will get the above error if you don’t have Oracle instant client in your Cluster.

To resolve above error in azure databricks, please follow this code:

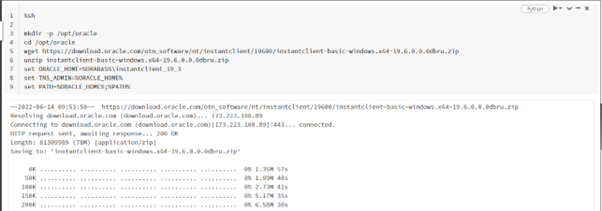

%sh

mkdir -p /opt/oracle

cd /opt/oracle

wget https://download.oracle.com/otn_software/nt/instantclient/19600/instantclient-basic-windows.x64-19.6.0.0.0dbru.zip

unzip instantclient-basic-windows.x64-19.6.0.0.0dbru.zip

set ORACLE_HOME=%ORABAS%\instantclient_19_3

set TNS_ADMIN=%ORACLE_HOME%

set PATH=%ORACLE_HOME%;%PATH%

To create init script, use the following code:

To read data from oracle database in PySpark follow this article by Emrah Mete

For more information refer this official document:

https://docs.databricks.com/data/data-sources/oracle.html#oracle

CodePudding user response:

Try installing the latest major release of cx_Oracle - which got renamed to python-oracledb, see the release announcement.

This version doesn't need Oracle Instant Client. The API is the same as cx_Oracle, although obviously the name is different.

If I understand the instructions, your init script would do something like:

/databricks/python/bin/pip install oracledb

Application code would be like:

import oracledb

connection = oracledb.connect(user='scott', password=mypw, dsn='yourdbhostname/yourdbservicename')

with connection.cursor() as cursor:

for row in cursor.execute('select city from locations'):

print(row)

Resources:

Home page: oracle.github.io/python-oracledb/

Quick start: Quick Start python-oracledb Installation

Documentation: python-oracle.readthedocs.io/en/latest/index.html

PyPI: pypi.org/project/oracledb/

Source: github.com/oracle/python-oracledb

Upgrading: Upgrading from cx_Oracle 8.3 to python-oracledb

CodePudding user response:

Changed the path from "/databricks/driver/oracle_ctl/" to "/databricks/driver/oracle_ctl/instantclient" in the init-script and that error does not appear anymore.

Please use the following init script instead

dbutils.fs.put("dbfs:/databricks/<init-script-folder-name>/oracle_ctl.sh","""

#!/bin/bash

sudo apt-get install libaio1

wget --quiet -O /tmp/instantclient-basiclite-linuxx64.zip https://download.oracle.com/otn_software/linux/instantclient/instantclient-basiclite-linuxx64.zip

unzip /tmp/instantclient-basiclite-linuxx64.zip -d /databricks/driver/oracle_ctl/

mv /databricks/driver/oracle_ctl/instantclient* /databricks/driver/oracle_ctl/instantclient

sudo echo 'export LD_LIBRARY_PATH="/databricks/driver/oracle_ctl/instantclient/"' >> /databricks/spark/conf/spark-env.sh

sudo echo 'export ORACLE_HOME="/databricks/driver/oracle_ctl/instantclient/"' >> /databricks/spark/conf/spark-env.sh

""", True)

Notes:

The above init-script was advised by a databricks employee and can be found here.

As mentioned by Christopher Jones in one of the comments, cx_Oracle has been recently upgraded to oracledb with a thin and thick version.