Hi I have images with the size of 160*120 (meaning 160 is the width and 120 is the height) I want to train a network with this data set in keras and no resizing.. I think in keras instead of writing

model.add(Conv2D(....,input_shape = (160,120,1) , ....)

I should write

model.add(Conv2D(....,input_shape = (120,160,1) , ....)

so does input shape in keras first take height and then width?though with both input sizes the training starts and no error pops up indicating that input size doesn't match... One other note is that after training the network with

model.add(Conv2D(....,input_shape = (120,160,1) , ....)

as my input shape still in summary it says Output Shape = (None,159,119,3) after the first convolution layer with filter size=2*2 and number of filters being 3...I thought it should be Output Shape = (None,119,159,3) I am really confused can anyone help?

CodePudding user response:

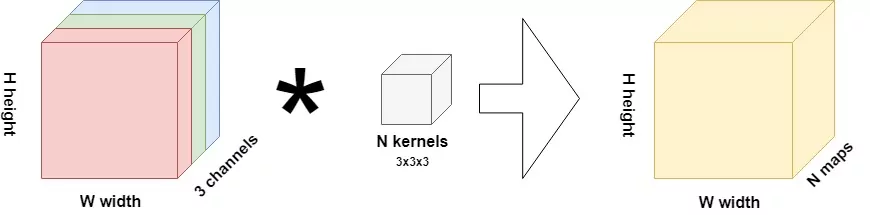

From the  (source: https://www.machinecurve.com/index.php/2021/11/07/introduction-to-isotropic-architectures-in-computer-vision/)

(source: https://www.machinecurve.com/index.php/2021/11/07/introduction-to-isotropic-architectures-in-computer-vision/)