I am plotting some data using the geom_linerange function. This is daily observations over 5-10 years depending on the dataset.

When running the script on my Mac, the linerange alpha changes based on the number of observations in each plot. However, I want all plots to have alpha=1. Explicitly setting alpha within the geom_linerange function has no effect on the plot - the colours are still transparent when a large number of observations are plotted.

When I used the exact same script on my Windows laptop, the plot was correct with the default alpha of 1.

Below is a minimal working example:

library(ggplot2)

library(gridExtra)

df1 = data.frame(name = c("A","B","C"),

Date = rep(seq(as.Date("2010-01-01"),as.Date("2018-01-01"),by=1),each=3),

value = runif(8769,-1,1))

df2 = data.frame(name = c("A","B","C"),

Date = rep(seq(as.Date("2010-01-01"),as.Date("2014-01-01"),by=1),each=3),

value = runif(4386,-1,1))

df3 = data.frame(name = c("A","B","C"),

Date = rep(seq(as.Date("2010-01-01"),as.Date("2011-01-01"),by=1),each=3),

value = runif(1098,-1,1))

Plot1 = ggplot()

geom_linerange(data=df1,aes(x=name,ymin=Date,ymax=Date 1,colour=value),size=15)

scale_colour_gradient2(low="red",mid="white",high="blue",midpoint=0,name = "Value")

theme_bw()

coord_flip()

xlab("Driver")

ylab("")

Plot2 = ggplot()

geom_linerange(data=df2,aes(x=name,ymin=Date,ymax=Date 1,colour=value),size=15)

scale_colour_gradient2(low="red",mid="white",high="blue",midpoint=0,name = "Value")

theme_bw()

coord_flip()

xlab("Driver")

ylab("")

Plot3 = ggplot()

geom_linerange(data=df3,aes(x=name,ymin=Date,ymax=Date 1,colour=value),size=15)

scale_colour_gradient2(low="red",mid="white",high="blue",midpoint=0,name = "Value")

theme_bw()

coord_flip()

xlab("Driver")

ylab("")

grid.arrange(Plot1,Plot2,Plot3)

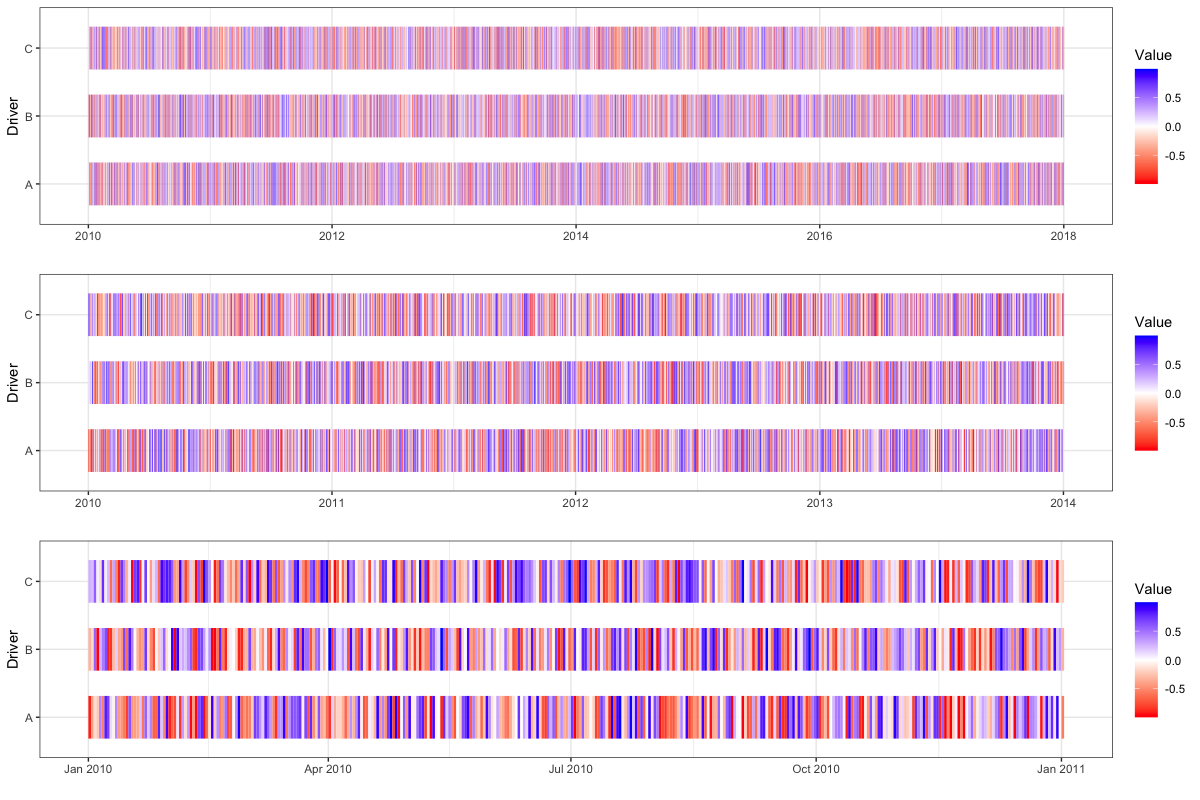

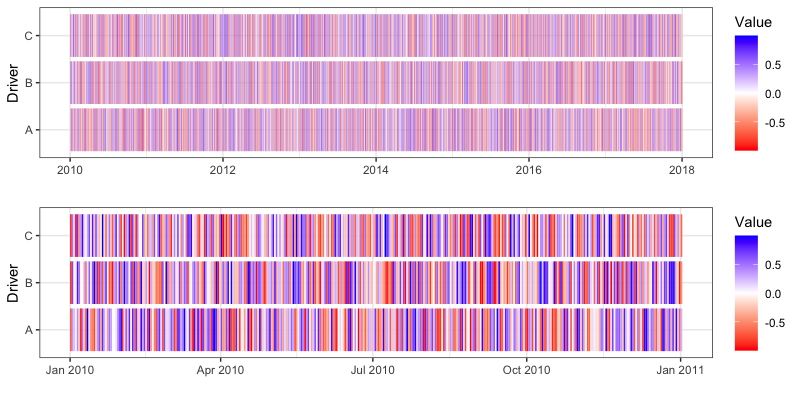

Below is the output on my Mac. The top plot, with the most observations, has the lowest alpha:

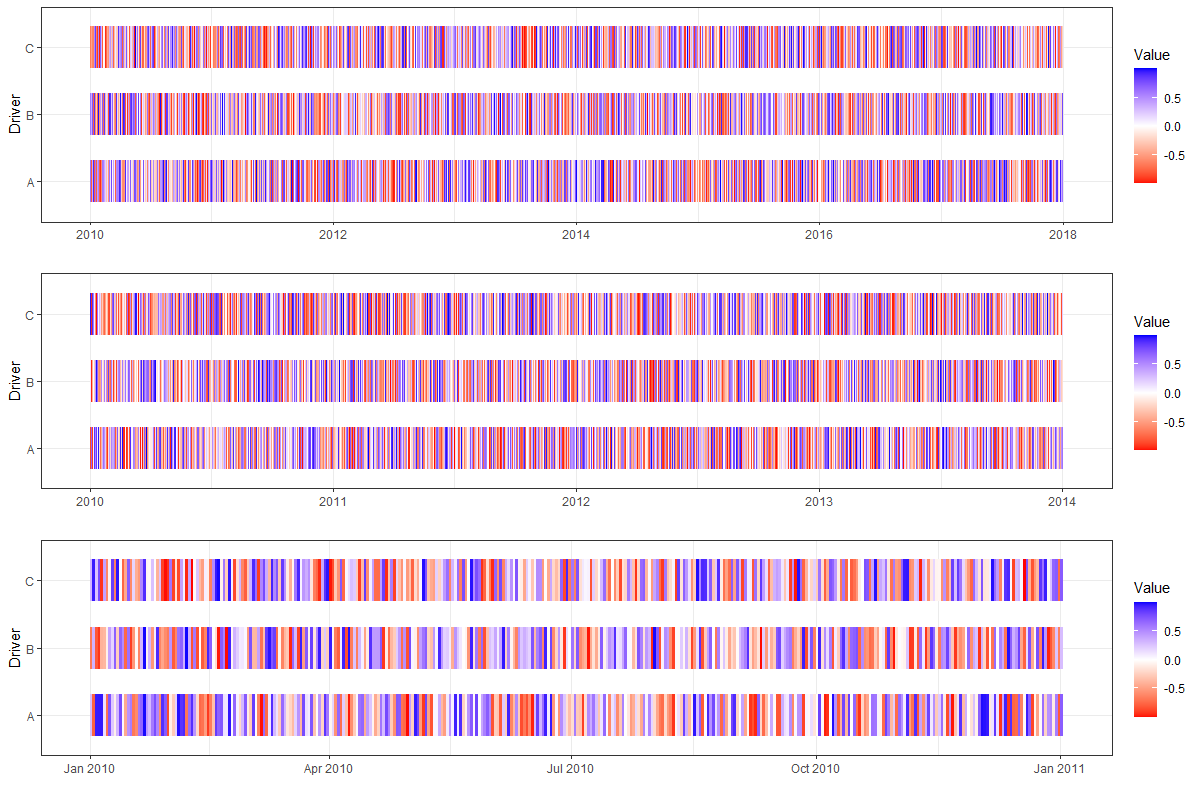

Below is the output on my Windows - as you can see, all plots have alpha=1:

The code is transferred via GitHub repos.

Unfortunately, I am absolutely stumped as to why this is occurring. Is this expected behaviour on a Mac, or is there something I am doing wrong?

Many thanks!

CodePudding user response:

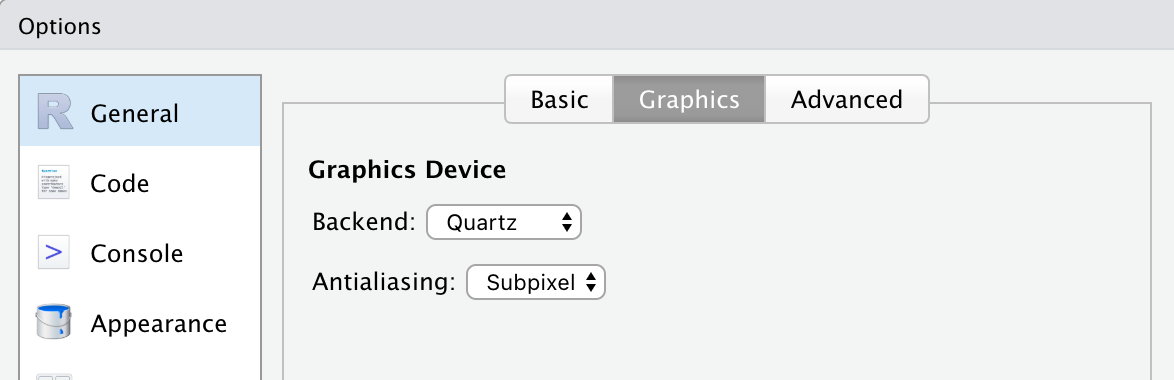

This is a result of the interaction between your high-frequency data and your graphics device, in particular its anti-aliasing setting/capability. In this case, we are trying to plot about 2,900 days of data using (in my examples below) only about 600 pixels of plot width. With each pixel representing about 4 days' of data, antialiasing gives a more "blurred" look, while plotting without antialiasing shows the range of data better (at the cost of showing less of the data; I'm guessing we're effectively seeing every fourth day's data).

In Windows, I believe the default graphics device for the Plot window has been Quartz, without antialiasing. Plot1 Plot2 look like this with that setting:

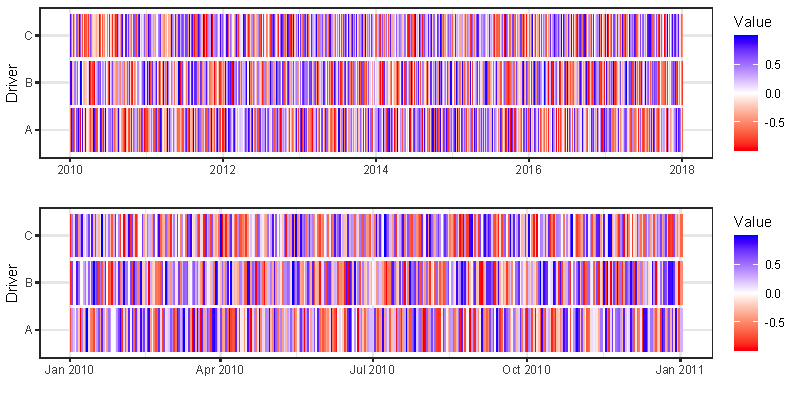

If I enable antialiasing in RStudio global settings, I get a result similar to your Mac result, since its default graphics device uses antialiasing.

The simplest way to get what you're going for would be to increase the resolution enough to be able to give each day at least one pixel; that way you can represent 100% of the data and use the full range of your color scale. You could also output to a vector format like svg to achieve much higher effective resolution.

Alternatively, depending on the nature of your data and what you're trying to show, you might taking a rolling average across your days (I expect the result would be similar to the antialiased outputs), or grab a rolling max or min or SD, or some other summary measure which captures what you want more directly, but at a more digestible time granularity. You might also consider other geometries (like a line chart, or scatter plot, or a horizon plot) which are easier for a reader to map to values.