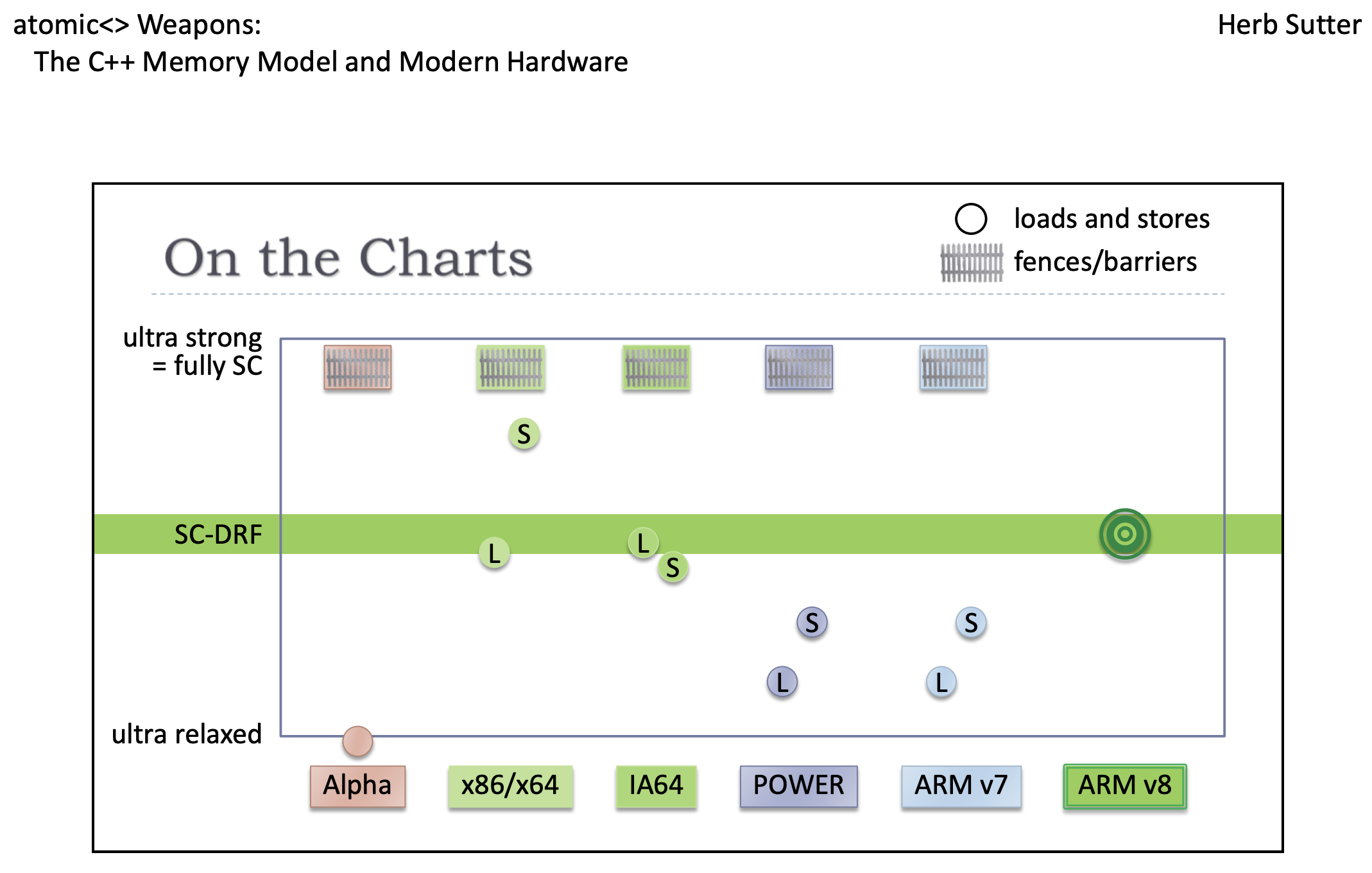

I read about Herb's atomic<> Weapons talk and had a question about page 42:

He mentioned that (50:00 in the video):

(x86) stores are much stronger than they need to be...

What I don't understand is: if the x86 "S" on the chart is a plain store, i.e. mov, I don't think it's stronger than SC-DRF because it's only a release store plus total store order (and that's why you need an xchg for a SC store). But if it means an SC store, i.e. xchg, it should fall on the "fully SC" bar because it's effectively a full barrier. How should I take this x86 "S"'s strong-ness on the chart?

(SC-DRF is a guarantee of Sequentially Consistent execution for Data Race Free programs, as long as they don't use any atomics with orders weaker than std::memory_order_seq_cst. ISO C and Java, and other languages, provide this.)

CodePudding user response:

Yes, he's showing xchg there (full barrier and an RMW operation), not just a mov store - a plain mov would be below the SC-DRF bar because it doesn't provide sequential consistency on its own without mfence or other barrier.

Compare ARM64 stlr / ldar - they can't reorder with each other (not even StoreLoad), but stlr can reorder with other later operations, except of course other release-store operations, or some fences. (Like I mentioned in answer to your previous question). See also Does STLR(B) provide sequential consistency on ARM64? re: interaction with ldar for SC vs. ldapr for just acquire / release or acq_rel. Also Possible orderings with memory_order_seq_cst and memory_order_release for another example of how AArch64 compiles (without ARMv8.3 LDAPR).

But x86 seq_cst stores drain the store buffer on the spot, even if there is no later seq_cst load, store, or RMW in the same thread. This lack of reordering with later non-SC or non-atomic loads/stores is what makes it stronger (and more expensive) than necessary.

Herb Sutter explained this earlier in the video, at around 36:00. He points out xchg is stronger than necessary, not just an SC-release that can one-way reorder with later non-SC operations. "So what we have here, is overkill. Much stronger than is necessary" at 36:30

(Side note: right around 36:00, he mis-spoke: he said "we're not going to use these first 3 guarantees" (that x86 doesn't reorder loads with loads or stores with stores, or stores with older loads). But those guarantees are why SC load can be just a plain mov. Same for acq/rel being just plain mov for both load and store. That's why as he says, lfence and sfence are irrelevant for std::atomic.)

So anyway, ARM64 can hit the sweet spot with no extra barrier instructions, being exactly strong enough for seq_cst but no stronger. (ARMv8.3 with ldapr is slightly stronger than acq_rel requires, e.g. ARM64 still forbids IRIW reordering, but only a few machines can do that in practice, notably POWER)

Other ISAs with both L and S below the bar need extra barriers as part of their seq_cst load and seq_cst store recipes (https://www.cl.cam.ac.uk/~pes20/cpp/cpp0xmappings.html).