i have a web application running inside cluster ip on worker node on port 5001,i'm also using k3s for cluster deployment, i checked the cluster connection it's running fine

the deployment has the container port set to 5001:

ports:

- containerPort:5001

Here is the service file:

apiVersion: v1

kind: Service

metadata:

labels:

io.kompose.service: user-ms

name: user-ms

spec:

ports:

- name: http

port: 80

targetPort: 5001

selector:

io.kompose.service: user-ms

status:

loadBalancer: {}

and here is the ingress file:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: user-ms-ingress

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: user-ms

port:

number: 80

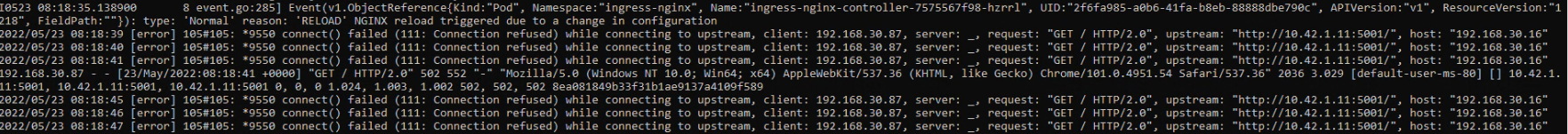

i'm getting 502 Bad Gateway error whenever i type in my worker or master ip address

expected: it should return the web application page

i looked online and most of them mention wrong port for service and ingress, but my ports are correct yes i triple check it:

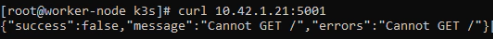

try calling user-ms service on port 80 from another pod -> worked try calling cluster ip on worker node on port 5001 -> worked

the ports are running correct, why is the ingress returning 502?

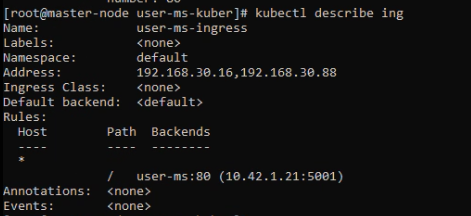

here is the ingress describe:

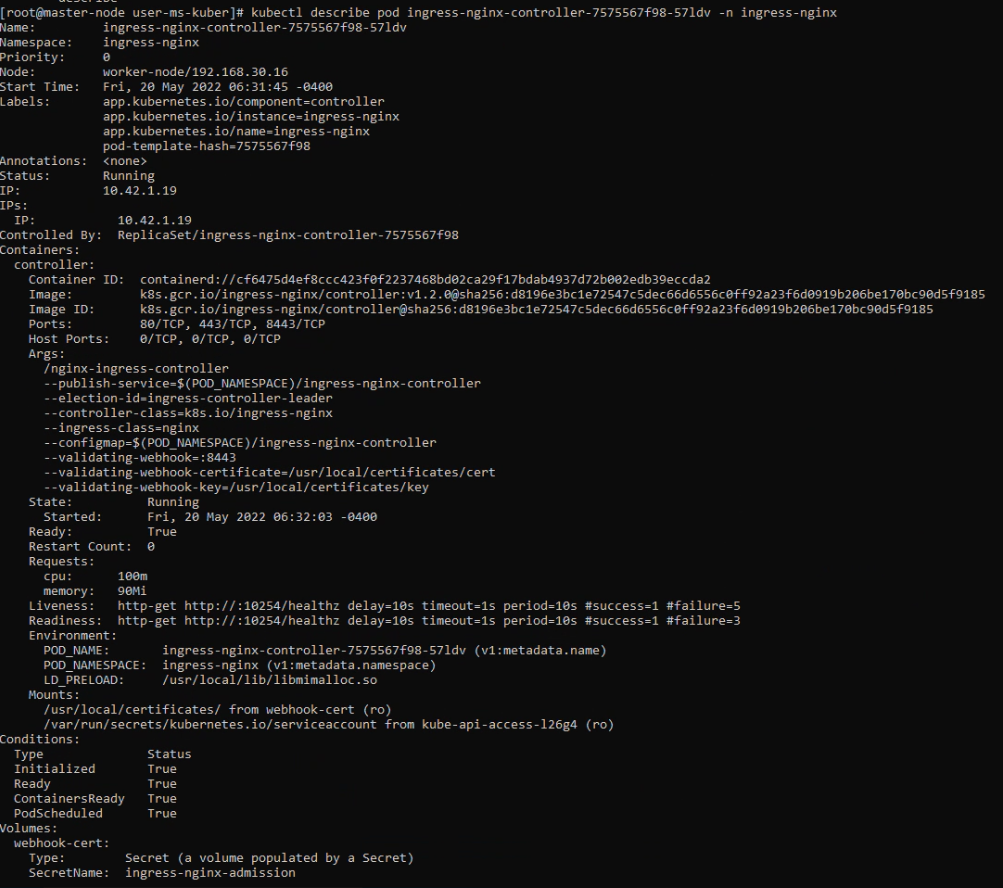

and here is the describe of nginx ingress controller pod:

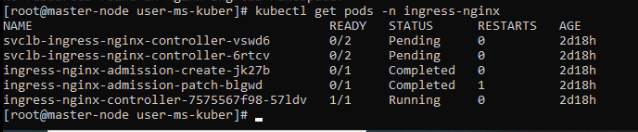

the nginx ingress pod running normally:

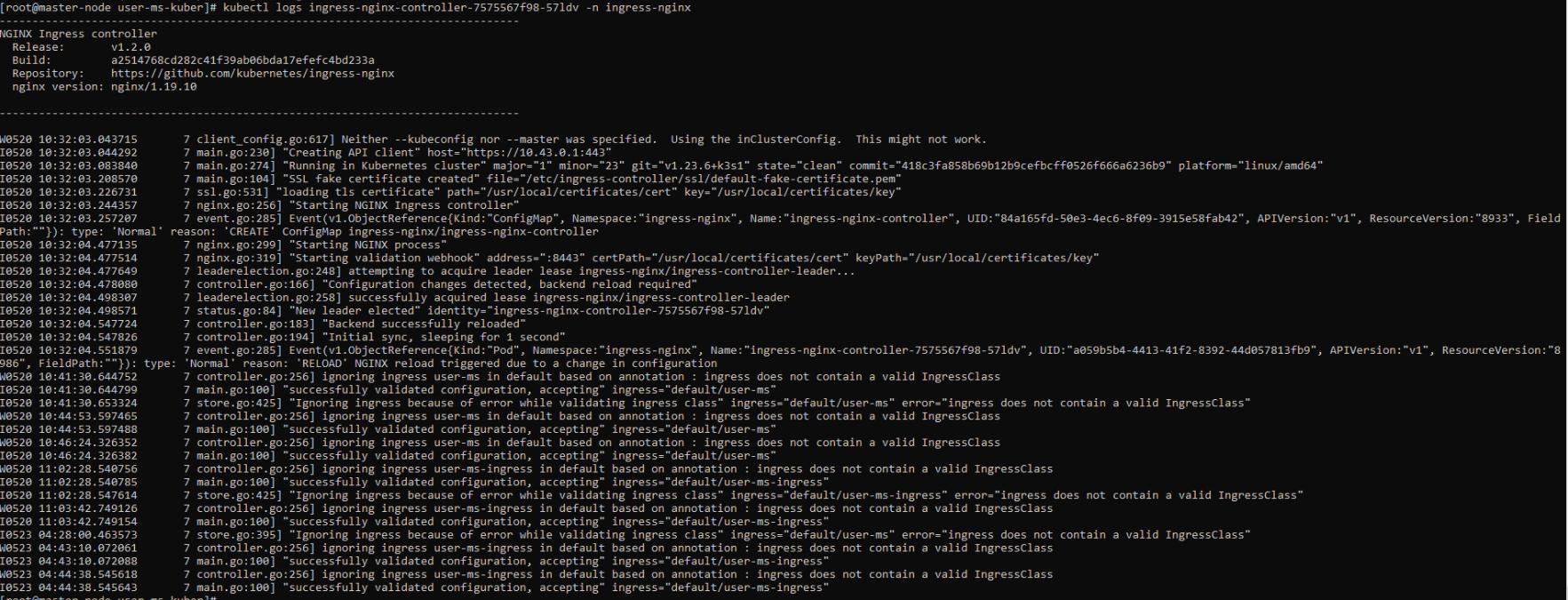

here is the logs of the nginx ingress pod:

sorry for the images, but i'm using a streaming machine to access the terminal so i can't copy paste

How should i go with debugging this error?

CodePudding user response:

You can turn on access logging on nginx, which will enable you to see more logs on ingress-controller and also trace every requests routing through ingress, if you are trying to load UI/etc, it will show you that the requests are coming in from browser or if you accessing a particular endpoint, the calls will be visible on the nginx-controller logs. You can conclude, if the requests coming in are actually routing to the proper service using this and then start debugging the service (ex: check to see if you can curl the endpoint from any pod within the cluster etc)

Noticed that you are using the image(k8s.gcr.io/ingress-nginx/controller:v1.2.0), if you have installed using helm, there must be a kubernetes-ingress configmap with ingress controller, by default "disable-access-log" will be true, change it false and you should start seeing more logs on ingress-controller, you might want to bounce ingress controller pods if you do not see detailed logs.

Kubectl edit cm -n namespace kubernetes-ingress

apiVersion: v1

data:

disable-access-log: "false" #turn this to false

map-hash-bucket-size: "128"

ssl-protocols: SSLv2 SSLv3 TLSv1 TLSv1.1 TLSv1.2 TLSv1.3

kind: ConfigMap

CodePudding user response:

If docker is used, EXPOSE 5001 in Dockerfile.

Service can be accessed from External IP or DNS (if configured) of Ingress Controller suffixed with service endpoints.

Service

apiVersion: v1

kind: Service

metadata:

name: user-ms

spec:

ports:

- port: 5001

protocol: TCP

targetPort: 5001

selector:

app: user-ms

type: ClusterIP

Ingress Route

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: user-ms-ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-buffer-size: 128k

nginx.ingress.kubernetes.io/proxy-buffering: 'on'

nginx.ingress.kubernetes.io/proxy-buffers-number: '4'

nginx.ingress.kubernetes.io/rewrite-target: /$1

nginx.ingress.kubernetes.io/use-regex: 'true'

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: user-ms

port:

number: 5001

CodePudding user response:

ok i managed to figure out this, in the default setting of K3S it uses traefik as it default ingress, so that why my nginx ingress log doesn't show anything from 502 Bad GateWay

I decided to tear down my cluster and set it up again, now with suggestion from this issue

i figured out that i was setting a network policy for all of my micro services, i delete the network policy and remove it's setting from all my deployment files.

Finally check once more and i can see that i can access my api and swagger page normally.

TLDR:

- If you are using nginx ingress on K3S, remember to disable traefik first when created the cluster

- don't forget to set ingress class inside your ingress configuration

- don't setup network policy because nginx pod won't be able to call the other pods in that network