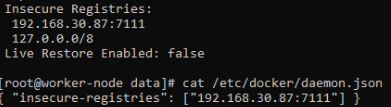

i'm using k3s to deploy my cluster, in my worker node i already setup insecure registries in /etc/docker/daemon.json for an internal network private registry

{ "insecure-registries": ["192.168.30.87:7111"] }

and i restarted both my docker and my machine. I can see the settings from docker info

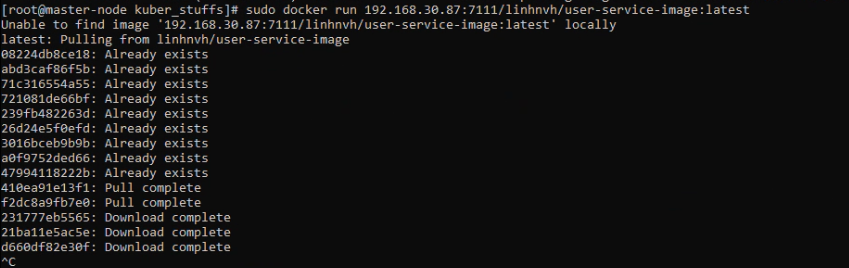

when i ran

docker run 192.168.30.87:7111/linhnvh/user-service-image:latest

it worked perfectly

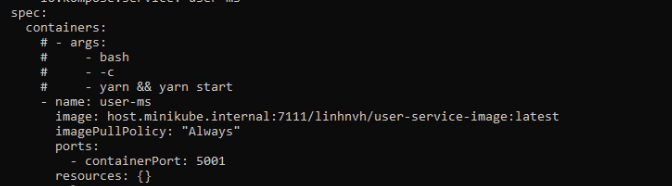

But i also set the image within my kubernetes deployment

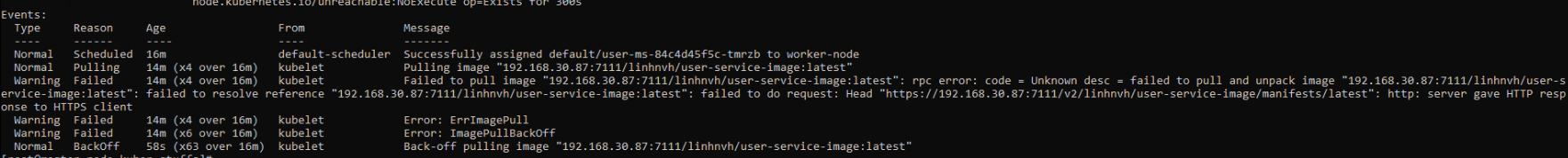

when i apply the deployment i'm getting the following error:

Why is this still not working within my kubernetes cluster? i can run docker run normally on the worker node with the image url

it just doesn't work for kubernetes deployment? The insecure-registries settings is there in docker info

sorry for the images, but i'm coding on a streaming machine so i can't copy paste

CodePudding user response:

finally after 4 hours of searching i finally come into this issue https://github.com/k3s-io/k3s/issues/1802

Because i'm using k3s for cluster setup it's using crictl instead of docker, so the daemon.json doesn't apply which bring me to this documentation https://rancher.com/docs/k3s/latest/en/installation/private-registry/

i created registries.yaml in at /etc/rancher/k3s/ with the following content:

mirrors:

"192.168.30.87:7111":

endpoint:

- "http://192.168.30.87:7111"

after saving i restart the k3s service with systemctl restart k3s

after that my pod able to pull the image, remember to set the ip both and after endpoint in registries.yaml

This need to be set on all node that gonna create a pod, and the restart command of service will be different

on master node: systemctl restart k3s

on worker/agent node: systemctl restart k3s-agent