What happens when the loss function is cubed or quartic instead of the traditional squaring the error method? I want to know what happens if we use (y-y')^3 or (y-y')^4 or higher powers instead of (y-y')^2, where y' is the predicted value. How will these loss functions respond?

CodePudding user response:

2 is not chosen arbitrarily, in your formulation it's the square of the euclidian distance, i.e. L2 norm.

You can use another norm, L^p in general. See this explanation https://angms.science/doc/Math/LA/LA_4_VectorLpNorm.pdf

You have to understand that different norms and loss functions will behave differently, and you choose them depending on the problem you want to solve.

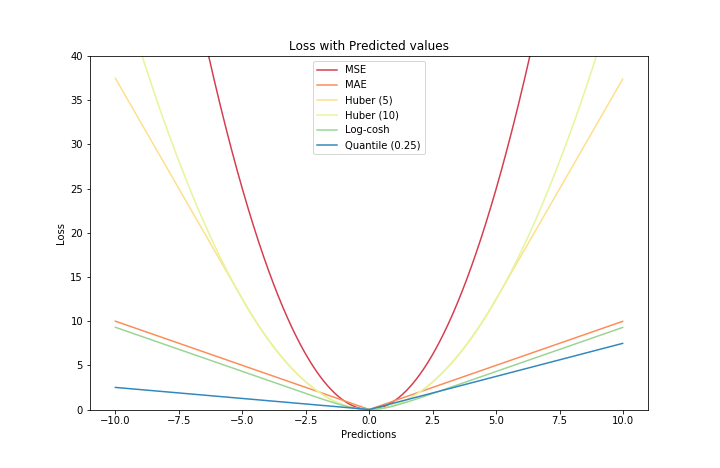

Good article here: 5 Regression Loss Functions All Machine Learners Should Know

Now be careful, if you want to minimize directly (y-y')^3 you might not get the behaviour you were hoping for: f: x->x^3 does not have a global minimum!

This might also be of interest to you https://stats.stackexchange.com/questions/200416/is-regression-with-l1-regularization-the-same-as-lasso-and-with-l2-regularizati

CodePudding user response:

To build your intuition, let's take e4=(y-y')^4 for a start.

This is the square or e2=(y-y')^2.

Hence points at some distance from the regression line will generate more error with e4. Since the regression algorithm tries to minimize the total distances to the regression line, the regression line will tend to get closer to more distant points (outliers). In order to minimise the total distance to outliers, the regression line will tend to be at "equidistance" of the outliers, and not care too much about other points.

In the case of odd exponents such as e3=(y-y')^3:

- If you use it as is, and try to minimize, then the line will go to infinity, since there is no minimum for e3.

- But of course you will rather fit your regression minimising something like $|\sum_{} e3|$ which has 0 as minimum.

In that case the same will happen: outliers have a stronger influence on the regression line. The difference being that low outliers will compensate for high outliers, so that if the regression line goes at equidistance of outliers, their total effect is 0. This will then give back importance to other points.