Closed. This question does not meet

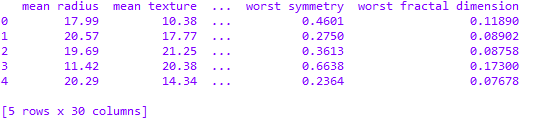

Ok, I know what the features are. Great. Then all the fun happens, and we end up with this at the end.

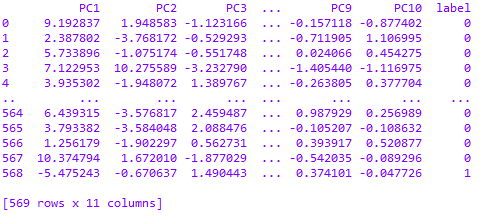

df_new = pd.DataFrame(X_pca_95, columns=['PC1','PC2','PC3','PC4','PC5','PC6','PC7','PC8','PC9','PC10'])

df_new['label'] = cancer.target

df_new

Of the 30 features that we started with, how do we know what the final 10 columns consist of? It seems like there has to be some kind of last step to map the df_new to df?

CodePudding user response:

To understand, you need to know a little bit more about PCA. In fact, PCA returns all principal components that shape the whole space of vectors, i.e., eigenvalues and eigenvectors of the covariance matrix of features. Hence, you can select eigenvectors based on the size of their corresponding eigenvalues. Hence, you need to pick up the biggest eigenvalues and their corresponding eigenvectors.

Now if you look at the documentation of PCA method in scikit learn, you find some useful properties like the following:

components_ ndarray of shape (n_components, n_features): Principal axes in feature space, representing the directions of maximum variance in the data. The components are sorted by explained_variance_.

explained_variance_ratio_ ndarray of shape (n_components,)

Percentage of variance explained by each of the selected components.

If n_components is not set then all components are stored and the sum of the ratios is equal to 1.0.

explained_variance_ratio_ is a very useful property that you can use it to select principal components based on the desired threshold for the percentage of the covered variance. For example, take values in this array are [0.4, 0.3, 0.2, 0.1]. If we take the first three components, the covered variance is 90% of the whole variance of the original data.

CodePudding user response:

Almost surely the 10 resulting columns are all composed of pieces of all 30 original features. The PCA object has an attribute components_ that shows the coefficients defining the principal components in terms of the original features.