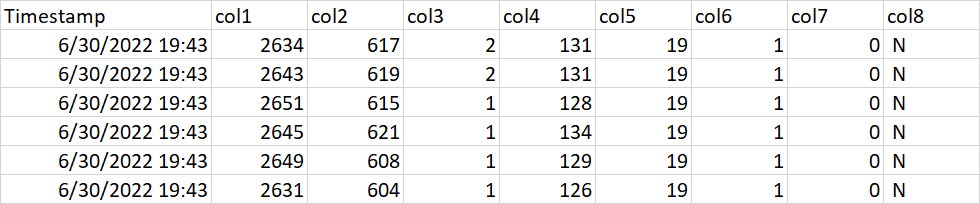

I am reading a very large csv file (~1 million rows) into a pandas dataframe using pd.read_csv() function according to the following options: (note that the seconds are also inside timestamp column but not shown in here due to exact copy and paste from csv file)

pd.read_csv(file,

index_col='Timestamp',

engine='c',

na_filter=False,

parse_dates=['Timestamp'],

infer_datetime_format=True,

low_memory=True)

My question is how to speed up the reading as it is taking forever to read the file?

CodePudding user response:

dask appears quicker at reading .csv files then the typical pandas.dataframe although the syntax remains similar.

The answer to this question appears to help using dask:

How to speed up loading data using pandas?

I use this method when working with .csv's also when performance is an issue.