I am trying to change a SQL query into Pyspark. The SQL Query looks like this. I need to set ZIPCODE='0' where the below conditions satisfies.

UPDATE COUNTRY_TABLE SET COUNTRY_TABLE.ZIPCODE = "0"

WHERE (((COUNTRY_TABLE.STATE)="TN" Or

(COUNTRY_TABLE.STATE)="DEL" Or

(COUNTRY_TABLE.STATE)="UK" Or

(COUNTRY_TABLE.STATE)="UP" Or

(COUNTRY_TABLE.STATE)="HP" Or

(COUNTRY_TABLE.STATE)="JK" Or

(COUNTRY_TABLE.STATE)="MP") AND ((Len([ZIPCODE]))<"5"));

Pyspark Query i am trying to implement is

df=df.withColumn('ZIPCODE', F.when( (col('COUNTRY_TABLE.STATE') == 'TN') | (col('COUNTRY_TABLE.STATE') == 'DEL') \

| (col('COUNTRY_TABLE.STATE') == 'UK') | (col('COUNTRY_TABLE.STATE') == 'UP') | (col('COUNTRY_TABLE.STATE') == 'HP') \

| (col('COUNTRY_TABLE.STATE') == 'JK') | (col('COUNTRY_TABLE.STATE') == 'MP') & (col('length_ZIP') < '5'), '0')

.otherwise(df.ZIPCODE))

In my pyspark code i have used one column as length ZIP so basically what i am doing i am taking out length of column(ZIPCODE) in a separate column 'length_ZIP' and checking with the value with that column.

df=df.withColumn('ZIPCODE', substring('ZIPCODE', 1,5)) -- take only first five character

df=df.withColumn('length_ZIP',length(df.ZIPCODE))

I am not getting my expected result. can anyone help me what i can do to get the result.

CodePudding user response:

First you can clean up your code this way:

states = ["TN", "DEL", "UK", "UP", "HP", "JK", "MP"]

and then:

import pyspark.sql.functions as F

df = df.withColumn("length_ZIP",F.length("ZIPCODE"))

df = df.withColumn("ZIPCODE", F.when(F.col("state").isin(states) & (F.col('length_ZIP') < '5'), '0').otherwise(df["ZIPCODE"]))

CodePudding user response:

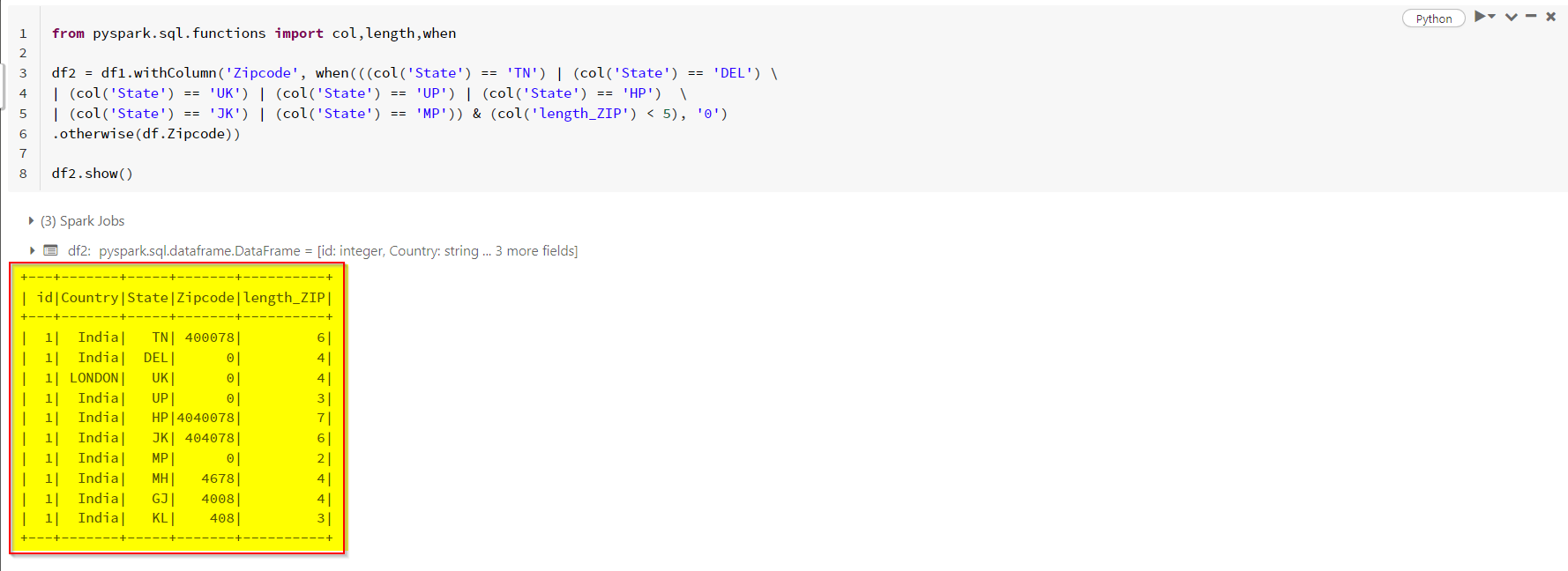

In your case you are giving AND condition along with OR condition without separating them because of that you are not getting desired output

To resolve this, keep your all OR conditions in a Round bracket and then give the AND condition. It will first check all OR condition and then for that it will check AND condition and give output.

from pyspark.sql.functions import col,length,when

df2 = df1.withColumn('Zipcode', when(((col('State') == 'TN') | (col('State') == 'DEL') \

| (col('State') == 'UK') | (col('State') == 'UP') | (col('State') == 'HP') \

| (col('State') == 'JK') | (col('State') == 'MP')) & (col('length_ZIP') < 5), '0')

.otherwise(df.Zipcode))

df2.show()

- Execution or Output