In Spark, I have a large list (millions) of elements that contain items associated with each other. Examples:

1: ("A", "C", "D") # Each of the items in this array is associated with any other element in the array, so A and C are associated, A, and D are associated, and C and D are associated.

2: ("F", "H", "I", "P")

3: ("H", "I", "D")

4: ("X", "Y", "Z")

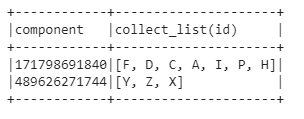

I want to perform an operation to combine the associations where there are associations that go across the lists. In the example above, we can see that all the items of the first three lines are associated with each other (line 1 and line 2 should be combined because according line 3 D and I are associated). Therefore, the output should be:

("A", "C", "D", "F", "H", "I", "P")

("X", "Y", "Z")

What type of transformations in Spark can I use to perform this operation? I looked like various ways of grouping the data, but haven't found an obvious way to combine list elements if they share common elements.

Thank you!

CodePudding user response:

There probably isn't enough information in the question to fully solve this but I would suggest creating an adjacency matrix/list using GraphX to represent it as a graph. From there hopefully you can solve the rest of your problem.

You can install the dependencies by using:

pip install -q pyspark==3.2 graphframes