I have two PySpark DataFrames, both have a column named "Country". One DataFrame is the reference and I want to compare name of the countries in the 2nd DataFrame with the reference DataFrame to find the difference. The output that I want is a list of countries in the 2nd DataFrame that don't exist in the reference DataFrame.

Note that the datasets I am working on are large and following is only a sample.

I am running code on Databricks.

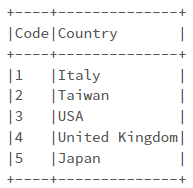

Sample data (the 1st DataFrame is the reference):

# Prepare Data

data_1 = [(1, 'Italy'), \

(2, 'Taiwan'), \

(3, 'USA'), \

(4, 'United Kingdom'), \

(5, 'Japan')

]

# Create DataFrame

columns = ['Code', 'Country']

df_1 = spark.createDataFrame(data = data_1, schema = columns)

df_1.show(truncate=False)

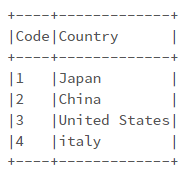

# Prepare Data

data_2 = [(1, 'Japan'), \

(2, 'China'), \

(3, 'United States'), \

(4, 'italy')

]

# Create DataFrame

columns = ['Code', 'Country']

df_2 = spark.createDataFrame(data = data_2, schema = columns)

df_2.show(truncate=False)

The expected output will be :

['China', 'United States', 'italy']

CodePudding user response:

you will have to use exceptAll which internally uses left-anti join.

result = df_2.select('Country').exceptAll(df_1.select('Country'))

In the above syntax we are creating a dataframe where we take df2 as base dataframe and take df1 as reference and remove all the elements in the column country from df2 which matches with the elements of df1.

At this point if you want the result to be a dataframe you will have to do a .show()

result.show()

-------------

| Country|

-------------

| China|

|United States|

| italy|

-------------

If you require the output to be a list you'll have to collect the result and iterate it in a loop and collect all the values like below.

output = []

for res in result.collect():

output.append(res['Country'])

print(output)

['China', 'United States', 'italy']