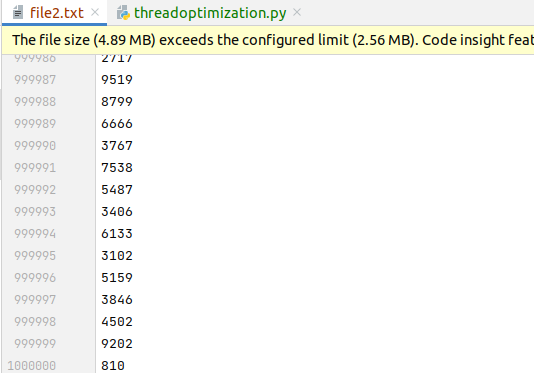

How can I implement multithreading to make this process faster? The program generates 1million random numbers and writes them on a file. It takes just over 2 seconds, but I'm wondering if multithreading would make it any faster.

import random

import time

startTime = time.time()

data = open("file2.txt", "a ")

for i in range(1000000):

number = str(random.randint(1, 9999))

data.write(number '\n')

data.close()

executionTime = (time.time() - startTime)

print('Execution time in seconds: ', str(executionTime))

CodePudding user response:

The short answer: Not easily.

Here is an example of using a multiprocessing pool to speed up your code:

import random

import time

from multiprocessing import Pool

startTime = time.time()

def f(_):

number = str(random.randint(1, 9999))

data.write(number '\n')

data = open("file2.txt", "a ")

with Pool() as p:

p.map(f, range(1000000))

data.close()

executionTime = (time.time() - startTime)

print(f'Execution time in seconds: {executionTime})')

Looks good? Wait! This is not a drop-in replacement as it lacks synchronization of the processes so not all 1000000 line will be written (some will be overwritten in the same buffer)!

See