I am looking for a solution where we can use multiple when conditions for updating a column values in pyspark.

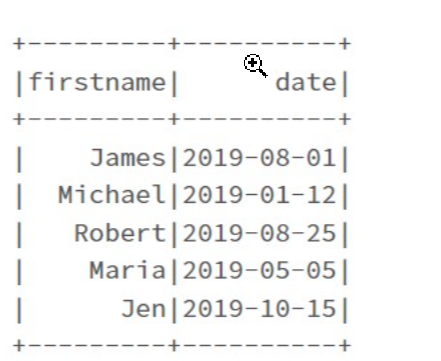

I am currently trying to achieve a solution when we have multiple conditions in spark how we can update a column. I have one dataframe in which we have three columns DATE, Flag_values, salary:

df = df.withColumn('Flag_values', F.when(F.col('DATE') < '2019-08-01', 'GIVEHIKE').otherwise(''))

After this I have to update the same column Flag_values now with multiple conditins:

new_column=when((col("Flag_values")=='') & (col("DATE")<'2019-12-15'),'GIVE BONUS ONLY').otherwise('')

df = df.withColumn("Flag_values", new_column)

But when I am running these two queries together the second query is overwriting the first query with data which I dont want. I want data for both query conditions.

The other queries that I have also tried are:

df = df.withColumn('Flag_values', F.when(F.col('DATE') < '2019-08-01', 'GIVEHIKE').otherwise('PYTHON'))

df = df.withColumn('Flag_values', F.when((F.col('DATE') < '2019-12-15') & (F.col('Flag_values') == 'PYTHON') , 'GIVE BONUS').otherwise('JAVA'))

I thought may be otherwise null is creating issue, but again after running the query I am getting output of second query only. Seems like it is overwriting first query data. Is there any approach by which we can achieve this solution?

CodePudding user response:

second query is overwriting the first query

To avoid that you can pass data frame column value in .otherwise So it will replace the value you want and keep the previous value as it is.

- My sample Dataframe

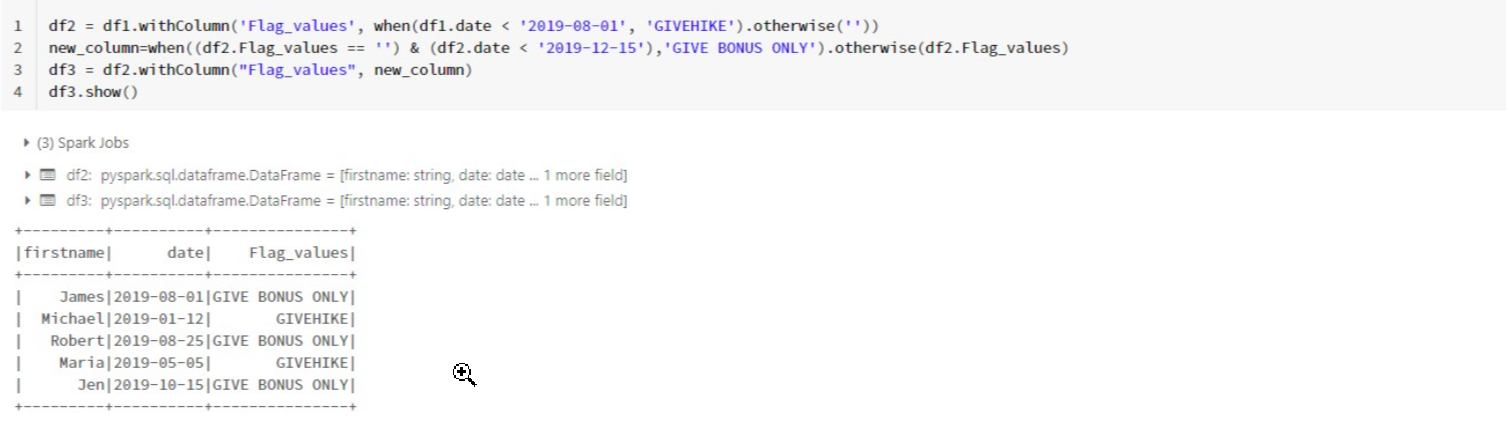

- Query I used

df2 = df1.withColumn('Flag_values', when(df1.date < '2019-08-01', 'GIVEHIKE').otherwise(''))

new_column=when((df2.Flag_values == '') & (df2.date < '2019-12-15'),'GIVE BONUS ONLY').otherwise(df2.Flag_values)

df3 = df2.withColumn("Flag_values", new_column)

df3.show()