I want to create a metadata variable based on my input dataset's columns using dictionaries. Specifically, I want the metadata variable to have exactly this structure and values:

metadata = {

'fields': {

'id': {'type': 'categorical'},

'name': {'type': 'categorical'},

'category_id': {'type': 'categorical'},

'category_label': {'type': 'categorical'},

'Compatible operating systems.2507': {'type': 'categorical'},

'Compatible operating systems.2507.unit': {'type': 'categorical'},

'Dimensions (WxDxH).49': {'type': 'categorical'},

'Dimensions (WxDxH).49.unit': {'type': 'categorical'},

'Internal mass storage.588': {'type': 'categorical'},

'Internal mass storage.588.unit': {'type': 'categorical'},

'Processor cores.6089': {'type': 'categorical'},

'Processor cores.6089.unit': {'type': 'categorical'},

'Built-in processor.7787': {'type': 'boolean'},

'Built-in processor.7787.unit': {'type': 'categorical'}

},

'constraints': [],

'primary_key': 'id'

}

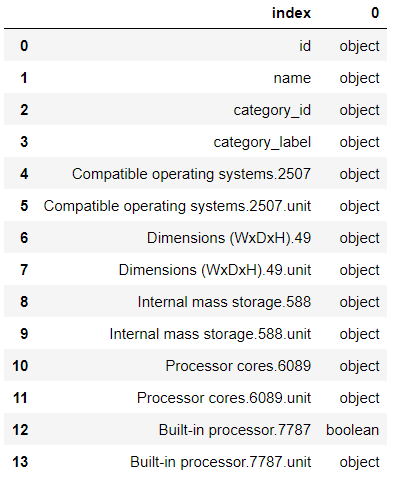

My input dataframe to create the above metadata structure looks like below:

So far I have attempted the following code to do this:

metadata = {}

metadata['fields'] = {}

for col_name in table_dtypes['index']:

metadata['fields'][col_name] = {}

The code above outputs this:

As you can see, my values are {}. I want to fill them in with 'type' as the key and the associated value will be column "0" in my screen shot dataframe (i.e. object, boolean). I want this to be an automated looping process because in the future I will have 'float', 'integer' and other data types in addition to 'categorical'.

How do I go about doing this?

CodePudding user response:

You can convert your 0 column into an array of dicts, using replace to replace 'object' with 'categorical' (and any others you might need)) and then applying a function to return a dict with type as the key and that value. You can then zip that to the index column and convert to a dict of fields. Finally you can add that to an output dict with the constraints and primary_id properties.

types = df['0'].replace({ 'object' : 'categorical' }).apply(lambda x:{ 'type' : x })

fields = dict(zip(df['index'], types))

metadata = { 'fields' : fields,

'constraints': [],

'primary_key': df.loc[0, 'index']

}

Note I've assumed the primary_key is stored in the first row of index, if that is not the case, you can adjust as required.

For this sample input:

index 0

0 id object

1 name object

2 category_id object

3 category_label object

4 Built-in processor.7787 boolean

5 A float float

6 An integer int

You will get this output (dumped as JSON for readability):

{

"fields": {

"id": {

"type": "categorical"

},

"name": {

"type": "categorical"

},

"category_id": {

"type": "categorical"

},

"category_label": {

"type": "categorical"

},

"Built-in processor.7787": {

"type": "boolean"

},

"A float": {

"type": "float"

},

"An integer": {

"type": "int"

}

},

"constraints": [],

"primary_key": "id"

}