I created a dataframe that contains only duplicated rows using the duplicated() method. my question is probably quite easy. i'd like to add a count column to the right and for each row count how many duplicated appearances of it are inside the duplicated df. I thought about creating a groupby of each column but that didn't really work.

something like df.groupby([*all columns*]).count()

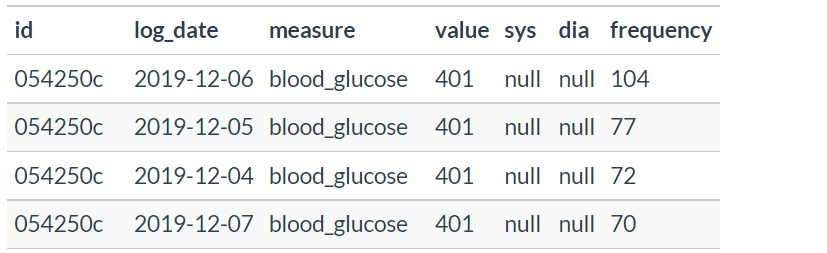

this is how the df looks like :

thanks!

edit :

question was answered and solved by seabean, solution could go in either of these methods :

newdf = healthdf[healthdf.duplicated(keep = False)].copy()

df_count = newdf.value_counts(dropna =

False).reset_index(name='count')

df_out = newdf.merge(df_count, how='left')

df_out.drop_duplicates(keep = "first").sort_values("count", ascending

= False)

or

col = newdf.columns.to_list()

newdf.groupby(col,dropna=False).size().sort_values(ascending = False)

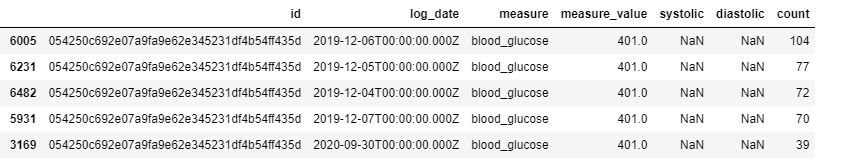

here is the output :

CodePudding user response:

You can try .groupby() .transform() size:

df['count'] = df.groupby(df.columns.tolist(), dropna=False)[df.columns[0]].transform('size')

As your data contains NaN, we have to use parameter dropna=False in .groupby() in order to get a complete list of count including rows with NaN values. Otherwise, rows with NaN values will be skipped and excluded from count.

Demo

Data Input

print(df)

Col1 Col2 Col3 Col4

0 ABC 123 XYZ NaN # group #1 of 3

1 ABC 123 XYZ NaN # group #1 of 3

2 ABC 678 PQR def # group #2 of 1

3 MNO 890 EFG abc # group #3 of 4

4 MNO 890 EFG abc # group #3 of 4

5 CDE 234 567 xyz # group #4 of 2

6 ABC 123 XYZ NaN # group #1 of 3

7 CDE 234 567 xyz # group #4 of 2

8 MNO 890 EFG abc # group #3 of 4

9 MNO 890 EFG abc # group #3 of 4

Output

print(df)

Col1 Col2 Col3 Col4 count

0 ABC 123 XYZ NaN 3

1 ABC 123 XYZ NaN 3

2 ABC 678 PQR def 1

3 MNO 890 EFG abc 4

4 MNO 890 EFG abc 4

5 CDE 234 567 xyz 2

6 ABC 123 XYZ NaN 3

7 CDE 234 567 xyz 2

8 MNO 890 EFG abc 4

9 MNO 890 EFG abc 4

Edit

If you get memory problem by using the .groupby() solution, we can go for using the .value_counts() solution by getting the count by .value_counts(), followed by merging with the original dataframe by .merge(), as follows:

df_count = df.value_counts(dropna=False).reset_index(name='count')

df_out = df.merge(df_count, how='left') # left join to keep the original row sequence order of df

Result:

print(df_count)

Col1 Col2 Col3 Col4 count

0 MNO 890 EFG abc 4

1 ABC 123 XYZ NaN 3

2 CDE 234 567 xyz 2

3 ABC 678 PQR def 1

print(df_out)

Col1 Col2 Col3 Col4 count

0 ABC 123 XYZ NaN 3

1 ABC 123 XYZ NaN 3

2 ABC 678 PQR def 1

3 MNO 890 EFG abc 4

4 MNO 890 EFG abc 4

5 CDE 234 567 xyz 2

6 ABC 123 XYZ NaN 3

7 CDE 234 567 xyz 2

8 MNO 890 EFG abc 4

9 MNO 890 EFG abc 4