I'm attempting to implement a KL Divergence on two imaginary dice from scratch and I'm not sure if it is correct.

By 'how do I graph all distributions' I'm wondering if the KL Divergence just a number or is it also a distribution?

Imports

%matplotlib inline

import random

import numpy as np

import matplotlib. pyplot as plt

I create empty arrays to store about 5500 rolls of two different imaginary dice.

Die p rolls a 1-6 with equal probability.

Die q rolls 1-4 90% of the time and rolls a 5 or 6 just 10% of the time. (Of course a working die is expected to roll 1-6 one sixth of the time.

q = []

p = []

for z in range(5000):

if random.random() < 0.1:

q.append(random.randint(5, 6))

p.append(random.randint(1, 6))

q.append(random.randint(1, 4))

p.append(random.randint(1, 6))

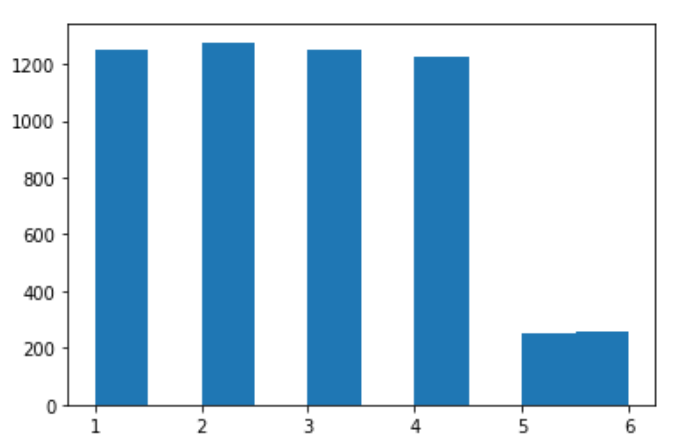

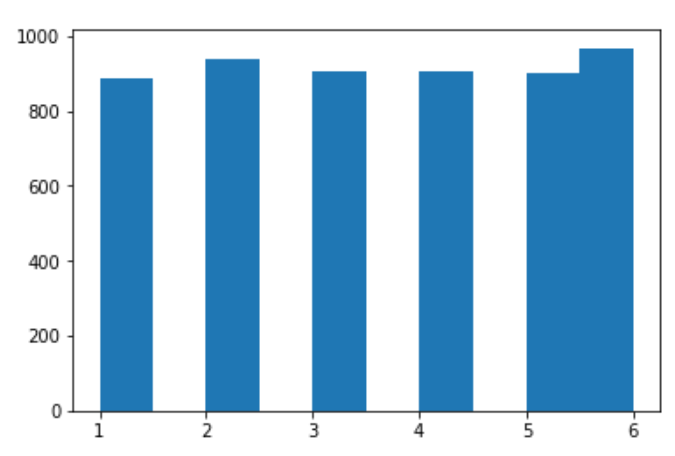

An example of the distributions of die p, and die q.

Here I take random samples of the dice arrays and integrate the log of the p selection divided by the q selection. This is where I think I am out of my depth because I didn't know if I should do a summation or integration. When I did a summation I got a huge number. Below I think I might have the integration correct, but I don't know if I need it because I'm dealing with a finite set of dice rolls.

For the dx I used 12 divided by the length of both arrays. 12 is the number of possible dice numbers and the total length of both arrays is the total number of every reading from a single die tossed.

kl_num = 0

both_len = len(q) len(p)

for i in range(both_len):

q_selection = q[random.randint(0, len(q)-1)]

p_selection = p[random.randint(0, len(p)-1)]

kl_num = p_selection * np.log(p_selection / q_selection) * 12 / both_len

$D_{KL} (P || Q) = \int P(x) log(P(x)/Q(x)) dx $

The number stored in kl_num is usually about 22. This brings me to the part B of my question, about how this could be graphed or how I might know if this is correct.

Thank you.

CodePudding user response:

For calculating the KL of two distribution, you need to have the probablity at each point. I think that in you KL formula, there is a mistake (please check that again). For example, for your provided histogram, if we consider the histogram which you provide as a distribution ( you should definitely consider their distribution). The KL of the two distribution which you provide is approximately calculated as :

1000* log(1200/900) 1300* log(1300/950) 1200*log(1200/900) 1100*log(1100/900) 250*log(250/900) 260*log(260/950)

CodePudding user response:

The probabilities in the KL Divergence were not actual probabilities.

This contains a loop that calculates the probabilities for both distributions and they are called in the KL Divergence equation.

%matplotlib inline

import random

import numpy as np

import matplotlib. pyplot as plt

q = []

p = []

for z in range(5000):

if random.random() < 0.1:

q.append(random.randint(5, 6))

p.append(random.randint(1, 6))

q.append(random.randint(1, 4))

p.append(random.randint(1, 6))

def probs(array):

fo = []

count = 0

for i in range(6):

sh = array.count(i 1) / np.sum(array)

fo.append(sh)

count = sh

factor = 1 / count

return [factor * z for z in fo]

KL = 0

for x in range(6):

KL = probs(p)[x] * np.log(probs(p)[x] / probs(q)[x])

print(KL)