The original query :

with Tr As (

SELECT

DocDtls.Warehouse,

Transactions.Code,

DocDtls.zDate,

Transactions.ID,

Transactions.QtyIn,

Transactions.QtyOut,

Transactions.BalanceAfter

FROM

DocDtls

INNER JOIN Transactions ON DocDtls.[PrimDocNum] = Transactions.[DocNum]

),

formatted_tr as (

select

ID,

Code,

QtyIn,

QtyOut,

BalanceAfter,

LAG(BalanceAfter, 1, 0) Over (

partition by Warehouse,

Code

order by

Code,zDate,ID

) Prev_BlncAfter

from

Tr

)

select ID,Code,QtyIn,QtyOut,BalanceAfter

,SUM(Prev_BlncAfter QtyIn)-QtyOut As NewBlncAfter

from formatted_tr

group by ID,Code,QtyIn,QtyOut,BalanceAfter;

;

Explaining the idea :

Let's say that the query returns all transactions of Item X and there are 10 rows as result , I need to loop through all 10 rows and SET BalanceAfter( for the first transaction QtyIn-QtyOut , Any other transaction (PreviousBalanceAfter QtyIn)-QtyOut) And so on .

What I've tried :

I tried to put the query result in a Datatable then filter it one more time using DataView to get the NewBlncAfter of the DataGridView current row ID only so the Dataview only have one row and save it in a variable - Working well so far - when I try to loop through all rows in my DataGridview and update BalanceAfter I got :

Must Declare Scalar Variable @Newblnc

You can find the whole code in here :

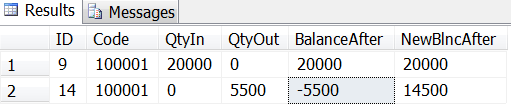

I used the old query to compare the results , The BalanceAfter should equal NewBlncAfter in every row .

Edit #2 : Using SUM instead of LAG causing wrong calculation and if I used the query more than once the result in BalanceAfter is multiplied

ID Code QtyIn QtyOut BalanceAfter

9 100001 20000 0 20000

14 100001 0 6000 40000

21 100001 3500 0 60000

24 100001 0 3000 80000

The main idea and the desired result for example :

ID Code QtyIn QtyOut BalanceAfter

9 100001 20000 0 20000

14 100001 0 6000 14000

21 100001 3500 0 17500

24 100001 0 3000 14500

The formula is :

for the first transaction QtyIn-QtyOut , Any other transaction (PreviousBalanceAfter QtyIn)-QtyOut And so on .

CodePudding user response:

Firstly, I see no reason at all to pull all this data into C# only to then update row-by-row (which is highly inefficient). You can do this in a single batch update.

It's not quite clear what result you want, but it seems you want to just assign a running SUM calculation, rather than LAG.

Furthermore:

- The second CTE is unnecessary and can be collapsed into the first.

- Partitioning and ordering by the same column in an

OVERmakes no sense. - The final

GROUP BYalso makes no sense and appears unnecessary, as you are grouping by a primary key.

WITH Tr AS (

SELECT

d.Warehouse,

t.Code,

d.zDate,

t.ID,

t.QtyIn,

t.QtyOut,

t.BalanceAfter,

SUM(t.QtyIn - t.QtyOut) OVER (

PARTITION BY d.Warehouse, t.Code

ORDER BY d.zDate, t.ID

ROWS UNBOUNDED PRECEDING

) BlncAfter

FROM

DocDtls d

INNER JOIN Transactions t ON d.PrimDocNum = t.DocNum

WHERE t.Code = @VariableCode

)

UPDATE Tr

SET BalanceAfter = BlncAfter;

One final point: why bother storing this information in a new column at all? Why not just calculate it when you need to, using SUM OVER?