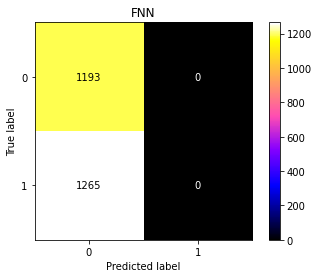

I'm building 2 neural network models (binary classification) for my finals. I've confusion matrix as an evaluation, but it always gives me one label output.

Anyone can tell where I made a mistake? Here's the code:

# Start neural network

network = Sequential()

# Add fully connected layer with a ReLU activation function

network.add(Dense(units=2, activation='relu', input_shape=(2,)))

# Add fully connected layer with a ReLU activation function

network.add(Dense(units=4, activation='relu'))

# Add fully connected layer with a sigmoid activation function

network.add(Dense(units=1, activation='sigmoid'))

# Compile neural network

network.compile(loss='binary_crossentropy', # Cross-entropy

optimizer='rmsprop', # Root Mean Square Propagation

metrics=['accuracy']) # Accuracy performance metric

# Train neural network

history = network.fit(X_train, # Features

y_train, # Target vector

epochs=3, # Number of epochs

verbose=1, # Print description after each epoch

batch_size=10, # Number of observations per batch

validation_data=(X_val, y_val)) # Data for evaluation

y_pred = network.predict(X_test)

# y_test = y_test.astype(int).tolist()

y_pred = np.argmax(y_pred, axis=1).tolist()

cm = confusion_matrix(y_test, y_pred)

print(cm)

I've got 93% accuracy from the validation, but the confusion matrix gave me this output:

CodePudding user response:

Your network has a single output with a sigmoid, this means that in order to get your classification you want to round your result, not take an argmax.

y_pred = np.round(y_pred).tolist()

If you were to print your y_pred you would notice that it is N x 1 matrix, and thus an argmax returns 0 for every single row (as this is the only column there is).