I have created deployment which has a service. I set it to run it in 5 replicas. When I call the service it always uses the same replica (pod). Is there a way how to use round robin instead, so all pods will be used?

CodePudding user response:

When you access your pods by service name, you get an IP address for one of the pods and use it in subsequent requests.

To solve this problem, you can create an ingress and use the url instead of the service name, in this case you will get an IP address on each request, and the load will be distributed between the pods.

CodePudding user response:

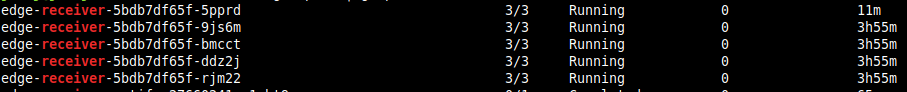

By default, Kubernetes distributes the traffic to all the service endpoints in a round-robin fashion.

Although, if you want to check or tweak the traffic distribution algorithms, you can do the following steps:

First, check the mode of the kube-proxy:(it should be either iptables or ipvs)

kubectl get cm -n kube-system kube-proxy -o jsonpath='{.data.config\.conf}{"\n"}' |grep mode

If the mode is blank or iptables, the traffic is distributed round-robin. However, if the mode is set to ipvs, things will get interesting. You will have to check the scheduler under the ipvs. E.g.:

kubectl get cm -n kube-system kube-proxy -o jsonpath='{.data.config\.conf}{"\n"}' |grep -ozP '(?s)ipvs:.*?(?=kind:)'

ipvs:

excludeCIDRs: []

minSyncPeriod: 0s

scheduler: rr #<----rr here means round-robin

strictARP: false

syncPeriod: 30s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

There are other traffic distribution algorithms, like:

rr: round-robin Robin Robin: distributes jobs equally amongst the available real servers.

lc: least connection (smallest number of open connections) Least-Connection: assigns more jobs to real servers with fewer active jobs.

dh: destination hashing Destination Hashing: assigns jobs to servers by looking up a statically assigned hash table by their destination IP addresses.

sh: source hashing Source Hashing: assigns jobs to servers by looking up a statically assigned hash table by their source IP addresses.

sed: shortest expected delay Shortest Expected Delay: assigns an incoming job to the server with the shortest expected delay. The expected delay that the job will experience is (Ci 1) / Ui if sent to the ith server, in which Ci is the number of jobs on the ith server and Ui is the fixed service rate (weight) of the ith server.

nq: never queue Never Queue: assigns an incoming job to an idle server if there is, instead of waiting for a fast one; if all the servers are busy, it adopts the Shortest Expected Delay policy to assign the job.

If you decide to play around with the different values, then you would have to edit the configmap:

kubectl edit cm -n kube-system kube-proxy

And restart the daemonset for kube-proxy:

kubectl rollout restart ds kube-proxy -n kube-system

You may find some exciting read about loadbalancing for services here and here.