I would like to maximize power of Spark cluster in MLRun solution for my calculation and I used this session setting for Spark cluster in MLRun solution (it is under Kubernetes cluster):

spark = SparkSession.builder.appName('Test-Spark') \

.config("spark.dynamicAllocation.enabled", True) \

.config("spark.shuffle.service.enabled", True) \

.config("spark.executor.memory", "12g") \

.config("spark.executor.cores", "4") \

.config("spark.dynamicAllocation.enabled", True) \

.config("spark.dynamicAllocation.minExecutors", 3) \

.config("spark.dynamicAllocation.maxExecutors", 6) \

.config("spark.dynamicAllocation.initialExecutors", 5)\

.getOrCreate()

The issue is, that I cannot utilize all power and in many cases I utilized only 1, 2 or 3 executor with small amount of cores.

Do you know, how to utilize in Spark session higher sources/performance (it seems, that dynamic allocation does not work correctly in MLRun & K8s & Spark)?

CodePudding user response:

I can fully utilize Spar cluster (environment MLRun & K8s & Spark) in case of static parameters in Spark session (Spark session with params 'dynamicAllocation' did not work for me). You can see a few function samples (note: infrastructure in K8s must be higher e.g. 3 executors and 12 cores as total):

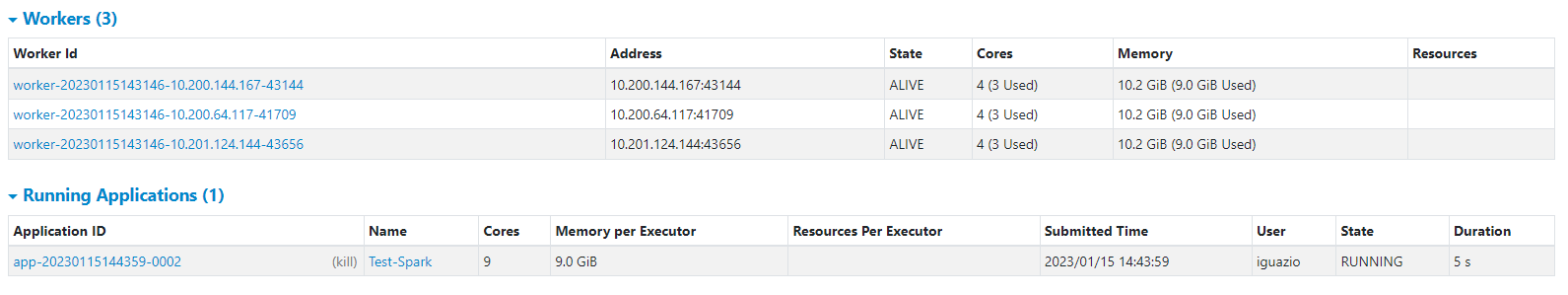

Configuration 3x executors, total 9 cores:

spark = SparkSession.builder.appName('Test-Spark') \

.config("spark.executor.memory", "9g") \

.config("spark.executor.cores", "3") \

.config('spark.cores.max', 9) \

.getOrCreate()

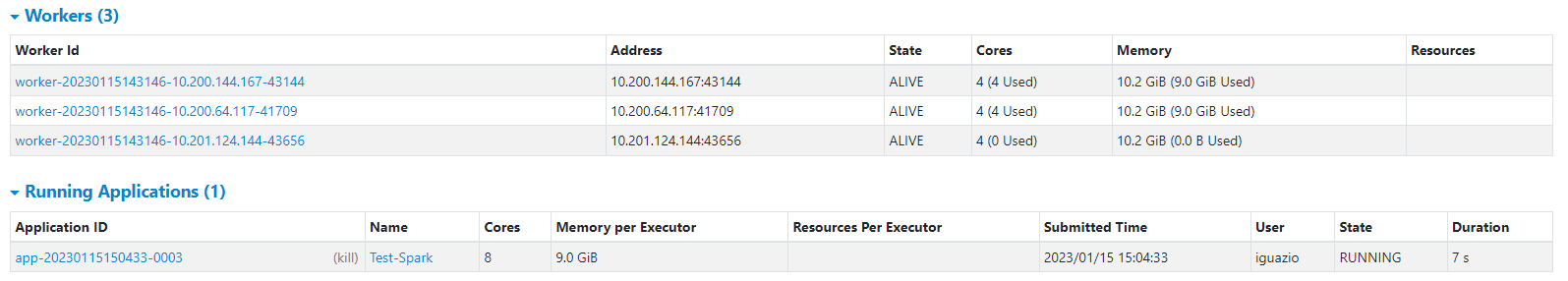

Configuration 2x executors, total 8 cores:

spark = SparkSession.builder.appName('Test-Spark') \

.config("spark.executor.memory", "9g") \

.config("spark.executor.cores", "4") \

.config('spark.cores.max', 8) \

.getOrCreate()

or it is possible to use Spark operator, detail see link