I have the image in which I want to detect the text, I am using easyocr to detect the text. The OCR gives the output bounding box value and probability as shown in the output image. I want to remove the probability which is less than 0.4 of any text detected How can I change that?

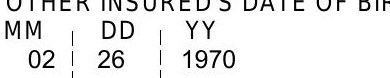

Image1

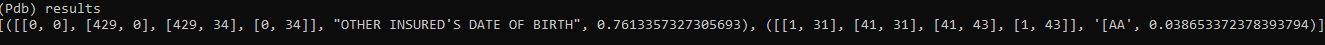

Image 2

The results element gives the output probability of the first text and second text 'AA' as shown in figure. i want to remove the lowest probability text detected.

Output of image2

Requirements

pip install pytesseract

pip install easyocr

Run the code using python main.py -i image1.jpg

# import the necessary packages

from pytesseract import Output

import pytesseract

import argparse

import cv2

from matplotlib import pyplot as plt

import numpy as np

import os

import easyocr

from PIL import ImageDraw, Image

def remove_lines(image):

result = image.copy()

gray = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# Remove horizontal lines

horizontal_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (40,1))

remove_horizontal = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, horizontal_kernel, iterations=2)

cnts = cv2.findContours(remove_horizontal, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(result, [c], -1, (255,255,255), 5)

# Remove vertical lines

vertical_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1,40))

remove_vertical = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, vertical_kernel, iterations=2)

cnts = cv2.findContours(remove_vertical, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(result, [c], -1, (255,255,255), 5)

plt.imshow(result)

plt.show()

return result

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image to be OCR'd")

ap.add_argument("-c", "--min-conf", type=int, default=0,

help="mininum confidence value to filter weak text detection")

args = vars(ap.parse_args())

reader = easyocr.Reader(['ch_sim','en']) # need to run only once to load model into memory

# load the input image, convert it from BGR to RGB channel ordering,

# and use Tesseract to localize each area of text in the input image

image = cv2.imread(args["image"])

# image = remove_lines(image)

results = reader.readtext(image)

print(results)

CodePudding user response:

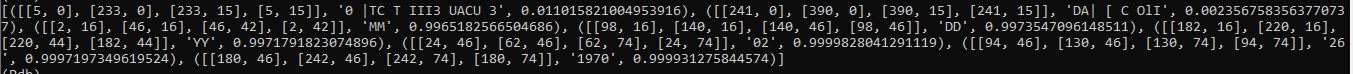

results=[([[5, 0],[233, 0],[233, 15],[5, 15]],' Ꮎ ]TC T III3 UᎪCU 3', 0.011015821004953916),

([[241, 0], [390, 0], [390, 15], [241, 15] ] , 'ᎠA[ [ C 0lᎢ', 0.0023567583563770737),

([[2, 16], [46, 16], [46, 42], [2, 42]], 'MM', 0.9965182566504686),

([[98, 16], [140, 16], [140, 46], [98, 46]], 'D', 0.9973547096148511),

([[182, 16], [220, 16],[220, 44], [182, 44]], 'Y', 0.9971791823074896),

([[24, 46], [62, 46], [62, 74], [24, 74]], '62', 0.9999828941291119),

([[94, 46], [130, 46], [130, 74], [94, 74]], '26', 0.9997197349619524),

([[180, 46], [242, 46], [242, 74], [180, 74]],'1970', 0.999931275844574)]

low_precision = []

for text in results:

if text[2]<0.5: # precision here

low_precision.append(text)

for i in low_precision:

results.remove(i) # remove low precision

print(results)

result:

[([[2, 16], [46, 16], [46, 42], [2, 42]], 'MM', 0.9965182566504686),

([[98, 16], [140, 16], [140, 46], [98, 46]], 'D', 0.9973547096148511),

([[182, 16], [220, 16], [220, 44], [182, 44]], 'Y', 0.9971791823074896),

([[24, 46], [62, 46], [62, 74], [24, 74]], '62', 0.9999828941291119),

([[94, 46], [130, 46], [130, 74], [94, 74]], '26', 0.9997197349619524),

([[180, 46], [242, 46], [242, 74], [180, 74]], '1970', 0.999931275844574)]