I'm trying to implement a faster version of the following code fragment:

Eigen::VectorXd dTX = (( (XPSF.array() - x0).square() (ZPSF.array() - z0).square() ).sqrt() txShift)*fs/c t0*fs;

Eigen::VectorXd Zsq = ZPSF.array().square();

Eigen::MatrixXd idxt(XPSF.size(),nc);

for (int i = 0; i < nc; i ) {

idxt.col(i) = ((XPSF.array() - xe(i)).square() Zsq.array()).sqrt()*fs/c dTX.array();

idxt.col(i) = (abs(XPSF.array()-xe(i)) <= ZPSF.array()*0.5/fnumber).select(idxt.col(i),-1);

}

The sample array sizes I'm working with right now are:

XPSF: Column Vector of 591*192 coefficients (113,472 total values in the column vector)

ZPSF: Same size as XPSF

xe: RowVector of 192 coefficients

idxt: Matrix of 113,472x192 size

Current runs with gcc and -msse2 and -o3 optimization yield an average time of ~0.08 seconds for the first line of the loop and ~0.03 seconds for the second line of the loop. I know that runtimes are platform dependent, but I believe that this still can be much faster. A commercial software performs the operations I'm trying to do here in ~two orders of magnitude less time. Also, I suspect my code is a bit amateurish right now!

I've tried reading over Eigen documentation to understand how vectorization works, where it is implemented and how much of this code might be "implicitly" parallelized by Eigen, but I've struggled to keep track of the details. I'm also a bit new to C in general, but I've seen the documentation and other resources regarding std::thread and have tried to combine it with this code, but without much success.

Any advice would be appreciated.

Update:

Update 2

I would upvote Soleil's answer because it contains helpful information if I had the reputation score for it. However, I should clarify that I would like to first figure out what optimizations I can do without a GPU. I'm convinced (albeit without OpenMP) Eigen's inherent multithreading and vectorization won't speed it up any further (unless there are unnecessary temporaries being generated). How could I use something like std::thread to explicitly parellelize this? I'm struggling to combine both std::thread and Eigen to this end.

CodePudding user response:

OpenMP

If your CPU has enough many cores and threads, usually a simple and quick first step is to invoke OpenMP by adding the pragma:

#pragma omp parallel for

for (int i = 0; i < nc; i )

and compile with /openmp (cl) or -fopenmp (gcc) or just -ftree-parallelize-loops with gcc in order to auto unroll the loops.

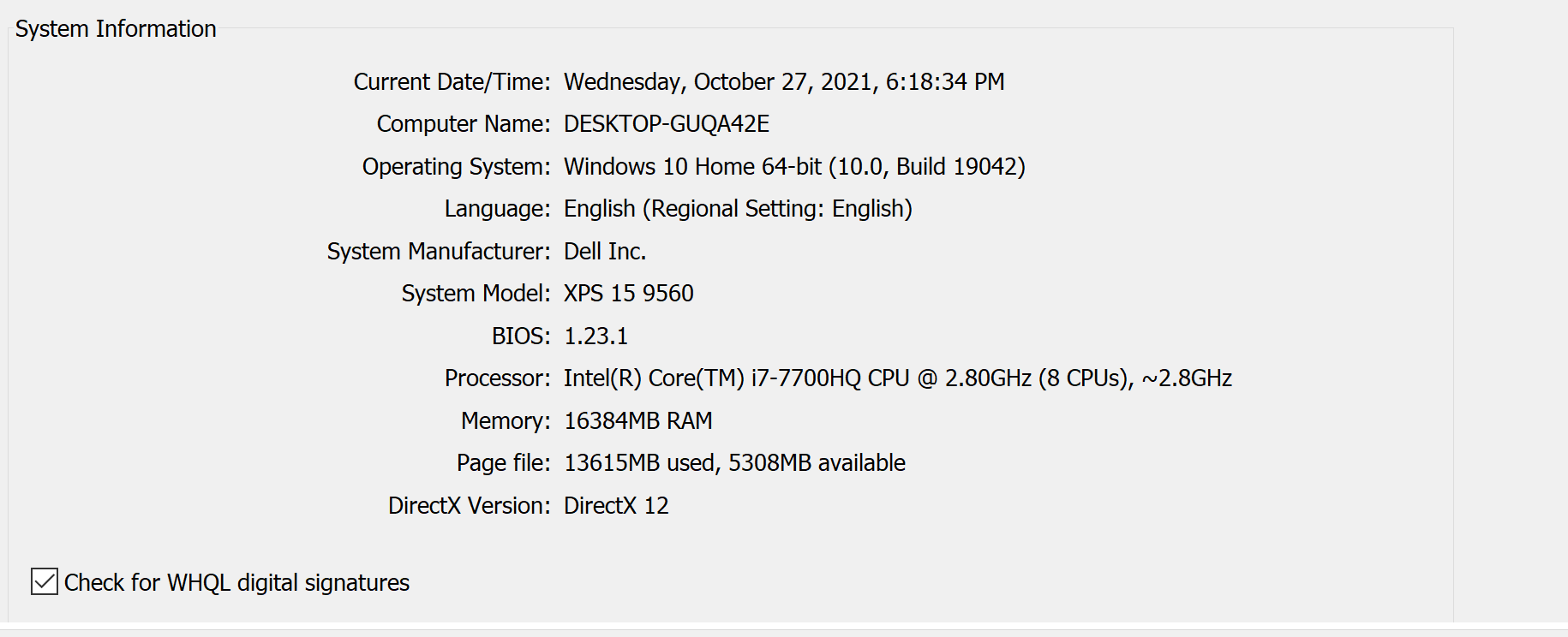

This will do a map reduce and the map will occur over the number of parallel threads your CPU can handle (8 threads with the 7700HQ).

In general you also can set a clause num_threads(n) where n is the desired number of threads:

#pragma omp parallel num_threads(8)

Where I used 8 since the 7700HQ can handle 8 concurrent threads.

TBB

You also can unroll your loop with TBB:

#pragma unroll

for (int i = 0; i < nc; i )

threading integrated with eigen

With Eigen you can add

OMP_NUM_THREADS=n ./my_program

omp_set_num_threads(n);

Eigen::setNbThreads(n);

remarks with multithreading with eigen

However, in the FAQ:

currently Eigen parallelizes only general matrix-matrix products (bench), so it doesn't by itself take much advantage of parallel hardware."

In general, the improvement with OpenMP is not always here, so benchmark the release build. Another way is to make sure that you're using vectorized instructions.

Again, from the FAQ/vectorization:

How can I enable vectorization?

You just need to tell your compiler to enable the corresponding instruction set, and Eigen will then detect it. If it is enabled by default, then you don't need to do anything. On GCC and clang you can simply pass -march=native to let the compiler enables all instruction set that are supported by your CPU.

On the x86 architecture, SSE is not enabled by default by most compilers. You need to enable SSE2 (or newer) manually. For example, with GCC, you would pass the -msse2 command-line option.

On the x86-64 architecture, SSE2 is generally enabled by default, but you can enable AVX and FMA for better performance

On PowerPC, you have to use the following flags: -maltivec -mabi=altivec, for AltiVec, or -mvsx for VSX-capable systems.

On 32-bit ARM NEON, the following: -mfpu=neon -mfloat-abi=softfp|hard, depending if you are on a softfp/hardfp system. Most current distributions are using a hard floating-point ABI, so go for the latter, or just leave the default and just pass -mfpu=neon.

On 64-bit ARM, SIMD is enabled by default, you don't have to do anything extra.

On S390X SIMD (ZVector), you have to use a recent gcc (version >5.2.1) compiler, and add the following flags: -march=z13 -mzvector.

multithreading with cuda

Given the size of your arrays, you want to try to offload to a GPU to reach the microsecond; in that case you would have (typically) as many threads as the number of elements in your array.

For a simple start, if you have an nvidia card, you want to look at cublas, which also allows you to use the tensor registers (fused multiply add, etc) of the last generations, unlike regular kernel.

Since eigen is a header only library, it makes sense that you could use it in a cuda kernel.

You also may implements everything "by hand" (ie., without eigen) with regular kernels. This is a nonsense in terms of engineering, but common practice in an education/university project, in order to understand everything.

multithreading with OneAPI and Intel GPU

Since you have a skylake architecture, you also can unroll your loop on your CPU's GPU with OneAPI:

// Unroll loop as specified by the unroll factor.

#pragma unroll unroll_factor

for (int i = 0; i < nc; i )

(from the sample).