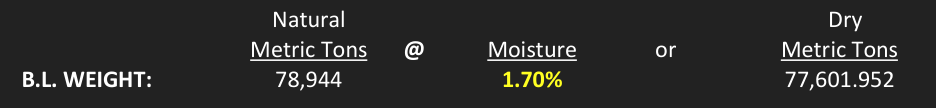

I'd like to extract certain numeric info from a bunch of PDFs. A sample is shown below, where the numeric info is positioned under the corresponding headings.

The strings corresponding to the above image (read in by pdftools::pdf_text()) is:

mystr <- ' Natural Dry\n Metric Tons @ Moisture or Metric Tons\n B.L. WEIGHT: 78,944 1.70% 77,601.952\n'

There are a lot of spaces and line breaks. Is it possible to extract the information under those headings?

My desired end result would be something like:

myresult <- tibble(

`Natural Metric Tons` = 78944,

Moisture = 1.7,

`Dry Metric Tons` = 77601.952

)

CodePudding user response:

If you use pdftools::pdf_data() you get a list of tibbles, one per page containing the text and its x and y coordinates (among other data). Vertically aligned text will have the same y coordinates with increasing x coordinates. So you can wrangle each tibble as follows:

tibble %>%

group_by(y) %>%

arrange(x) %>%

filter(lag(text) == "your search term")

You can then use a for loop or purrr::map() to apply on the whole list.

I see from your sample, that the numbers are centralised, the code above assumes left justified entries, so you may have to do more complex wrangling than group_by(y).

Sorry for any formatting problems, I am on mobile.