I have the following 3D Numpy matrix:

import numpy as np

arr = np.random.random([4, 3, 4])

array([[[0.86061437, 0.28274671, 0.08120691, 0.07529454],

[0.93281252, 0.28959613, 0.89955385, 0.23104958],

[0.70399225, 0.78649787, 0.65668005, 0.1078731 ]],

[[0.2604536 , 0.74093858, 0.71550647, 0.07096532],

[0.49281007, 0.04934752, 0.2316176 , 0.8452892 ],

[0.5559128 , 0.89977194, 0.60539768, 0.88640264]],

[[0.21532865, 0.02557637, 0.70641993, 0.86614863],

[0.26946359, 0.00956061, 0.91330073, 0.0074185 ],

[0.79044557, 0.50265835, 0.70721046, 0.69482905]],

[[0.15602922, 0.65337023, 0.44756636, 0.97871331],

[0.60633134, 0.93488194, 0.53871744, 0.48607869],

[0.39678678, 0.02369235, 0.42945214, 0.48460456]]])

If we splice arr with v = arr[:,0,0], we get:

array([0.86061437, 0.26045360, 0.21532865, 0.15602922])

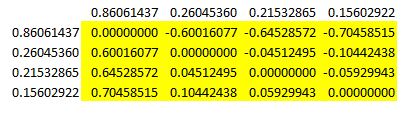

I would like to create a matrix like the one in yellow below that results from subtracting each element from every other element:

My initial thought was to take arr[:,0,0] and the transpose arr[:,0,0].T, then try to do the subtraction this way. But, not sure how to do it.

Thanks in advance for your help!

CodePudding user response:

You were very close:

arr.T[..., None, :] - arr.T[..., None]