I've been working on a python project that can recognize codes in pictures but I've run into trouble when the code is sideways and in such a nosy background nothing I've tried has been close to working so I'm shooting in the dark. Some insight into how to solve this problem would be appreciated. I've tried anything from tesseract to Keras-ocr but I only get back random numbers and letters.

import pytesseract

import PIL.Image

import cv2

my_config = r"--psm 11 --oem 3"

text = pytesseract.image_to_string(PIL.Image.open(path_to_image2), config = my_config)

print(text)

Ive also tried lots of stuff with cv2 like creating masks but the masks arent dynamic enough to filter out the background

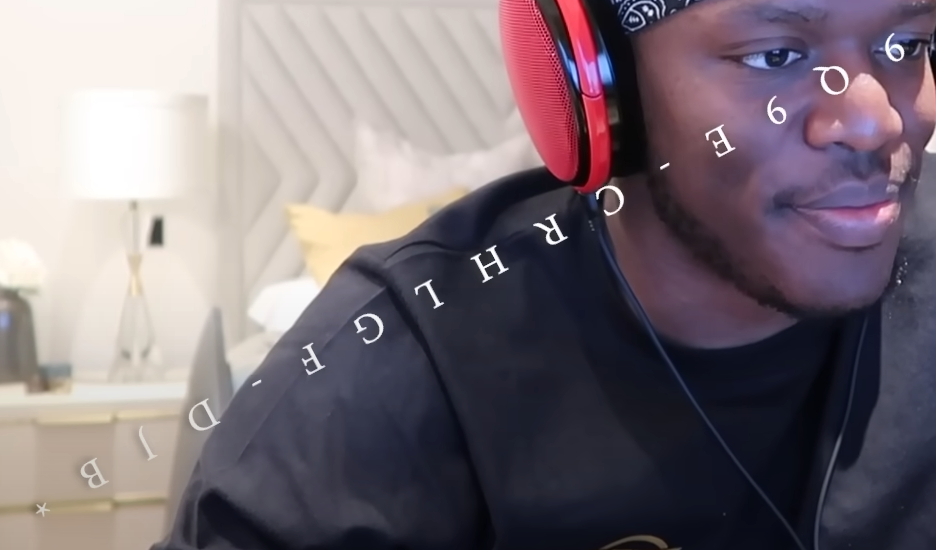

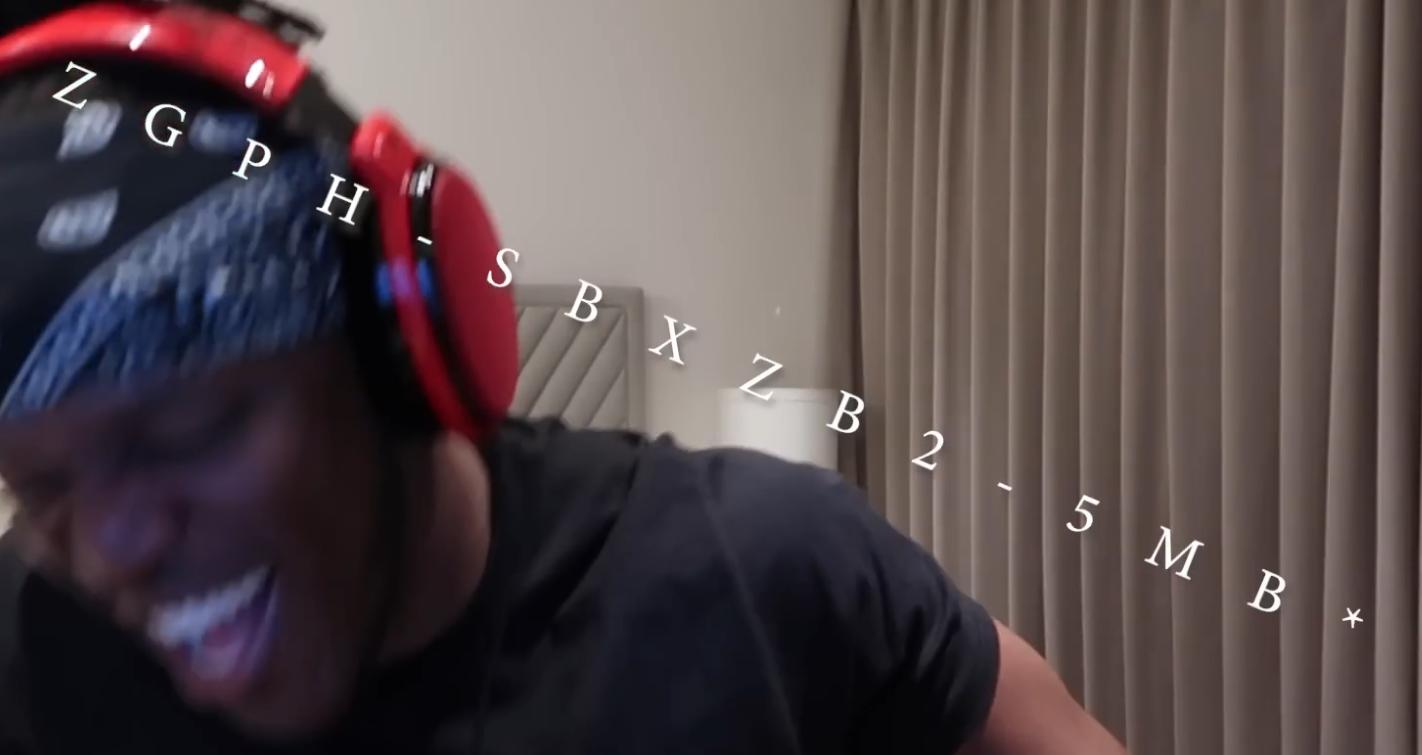

Here are two examples that I've been dealing with Thank you to anyone who tries to help me out!

CodePudding user response:

I hope this will help for your case. I had tried the same exact scenario with easyocr.

import easyocr

import cv2

import numpy as np

from scipy import ndimage

def image_sharpen(image, kernel_size=(5, 5), sigma=1.0, amount=1.0, threshold=0):

blurred = cv2.GaussianBlur(image, kernel_size, sigma)

sharpened = float(amount 1) * image - float(amount) * blurred

sharpened = np.maximum(sharpened, np.zeros(sharpened.shape))

sharpened = np.minimum(sharpened, 255 * np.ones(sharpened.shape))

sharpened = sharpened.round().astype(np.uint8)

if threshold > 0:

low_contrast_mask = np.absolute(image - blurred) < threshold

np.copyto(sharpened, image, where=low_contrast_mask)

return sharpened

def reduce_brightness(image, gamma=1.0):

invGamma = 1.0 / gamma

table = np.array([((i / 255.0) ** invGamma) * 255

for i in np.arange(0, 256)]).astype("uint8")

return cv2.LUT(image, table)

gamma = 0.35

rotation_angle = 155 # 25

image = cv2.imread('pze5c.png') # yCVet.jpg

rotate_image = ndimage.rotate(image,rotation_angle)

sharpened = image_sharpen(rotate_image)

adjusted = reduce_brightness(sharpened, gamma=gamma)

cv2.imwrite('resize.png', adjusted)

# cv2.imshow('',adjusted)

# cv2.waitKey(0)

reader = easyocr.Reader(['en'], gpu=False)

result = reader.readtext('resize.png')

for detection in result:

print(detection)

The output what i got is

([[37, 394], [994, 394], [994, 505], [37, 505]], '9 Q 9 E - C R H L G F D ] B', 0.13722358295856807)

Instead of J it recognized ]

for the other image i change the rotation angle as 25. The output is,

([[78, 565], [1515, 565], [1515, 678], [78, 678]], "Z G P H ' $ B X Z B 2 - 5 M B *", 0.3908300967267578)

Instead of S it picked $. Feel free to play around with gamma, rotational angle and other. For higher performance, you go with google vision but it is non-opensource.