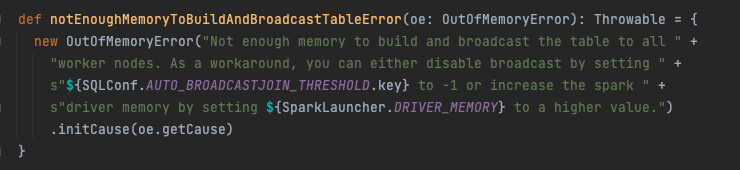

When broadcasting, Spark can fail with the error org.apache.spark.sql.errors.QueryExecutionErrors#notEnoughMemoryToBuildAndBroadcastTableError (Spark 3.2.1):

Why BroadcastExchange needs more driver memory? Isn't broadcast sending data to all drivers? Why driver memory is a bottleneck?

Thanks.

CodePudding user response:

Unfortunately executor side broadcast joins are not yet supported in Spark (see SPARK-17556). Currently all data of the broadcasted dataset is collected in the driver first to build an in-memory hash table which is then distributed to workers. This can result in high memory pressure on the driver.