I have the coordinates of the four corners of a table in my image frame as well as the actual dimensions of that table. What I want to do is find the transformation matrix from the table in the frame (first image) to the actual table (second image). The goal is to be able to map points in the raw frame (location of a ball when it bounces) to where it bounced in the second image rectangle.

I have tried using openCV's findHomography however I am getting inaccurate results. I am trying to find the matrix T = A -> B where:

A: the coordinates of table corners in the raw image (see first image):

[[512.10633894 269.22351997] # Bottom corner

[325.78198672 236.36953072] # Left Corner

[536.67952727 199.18259532] # Top Corner

[715.21023044 214.80199122]] # Right Corner

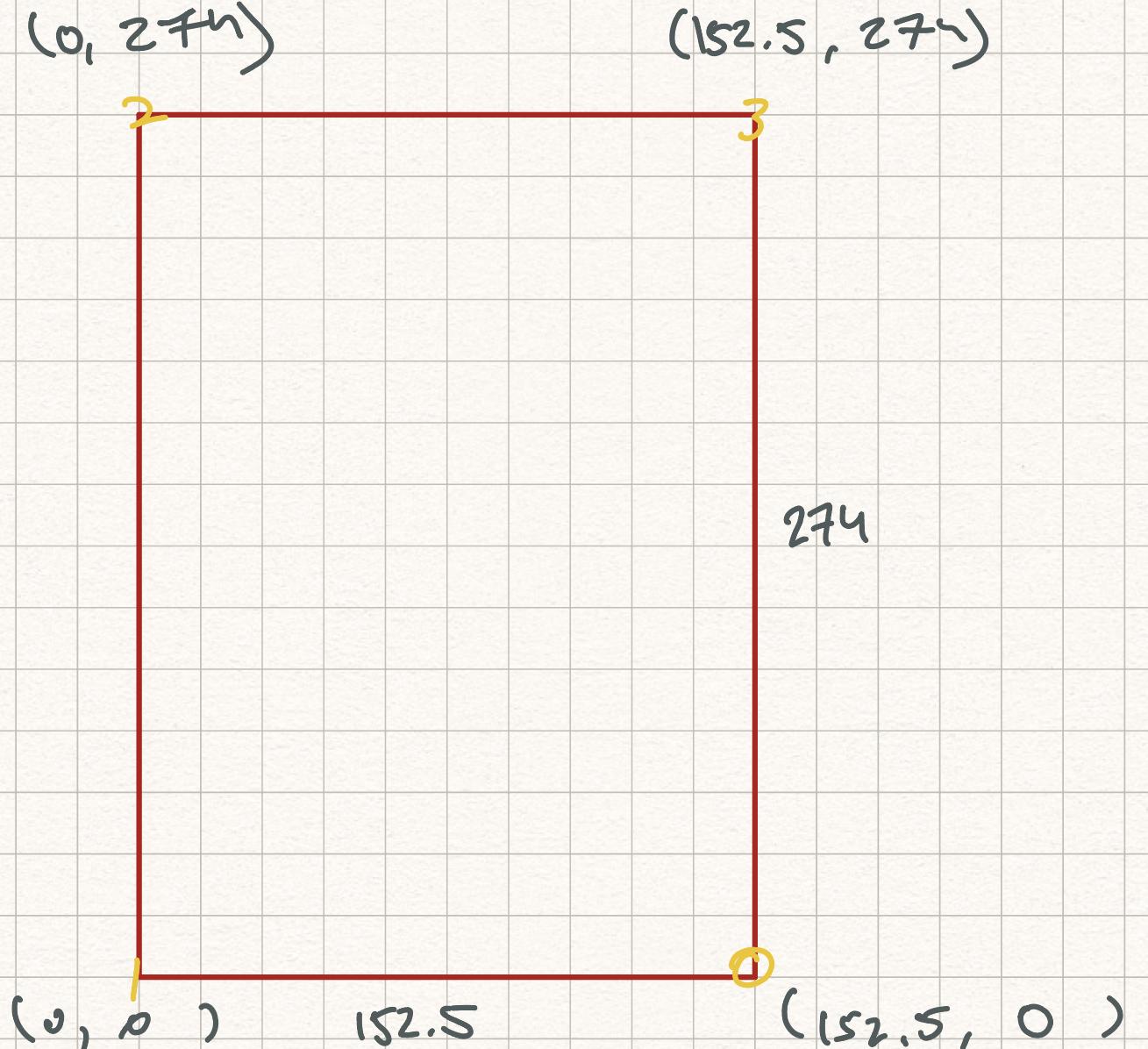

B: Actual coordinates to map to (see second image):

[[152.5 0. ]

[ 0. 0. ]

[ 0. 274. ]

[152.5 274. ]]

T: Transformation matrix

[[-5.96154850e-01 -3.38096031e 00 9.92931129e 02]

[-5.59829402e-01 3.17495425e 00 -5.68494643e 02]

[-2.76038296e-04 -8.61104226e-03 1.00000000e 00]]

Using findHomography gives me the transformation matrix T, but when I input the original coordinates A I expect to get B but I end up with B':

[[[-221 0 -1]]

[[ 0 0 -1]]

[[ 0 -235 0]]

[[-159 -285 -1]]]

This is of the correct magnitude so it seems like it's doing something right but is nowhere near the accuracy I want, and for some reason all the values are negative which I don't understand why. Why is B' not equal to B?

Here is the relevant code:

T, status = cv.findHomography(img_corners, TABLE_COORDS, cv.RANSAC, 5.)

T_img = cv.transform(img_corners.reshape((-1, 1, 2)), T)

I have also tried changing the 5.0 parameter to something lower but without any luck...

CodePudding user response:

EDIT: Turns out you rounded your output matrix too soon and forgot to "normalize" it with the last coordinate.

T_img /= T_img[:, 0, 2].reshape([4, 1, 1])

print(T_img)

Result seems fine if you ignore SC notation:

[[[ 1.5249998e 02 -1.8539389e-05 1.0000000e 00]]

[[-3.9001084e-06 -3.6695651e-06 1.0000000e 00]]

[[-1.1705048e-05 2.7400000e 02 1.0000000e 00]]

[[ 1.5249997e 02 2.7399997e 02 1.0000000e 00]]]

Alternative way: I use getPerspectiveTransform instead, which should be doing same thing essentially.

input_pts = np.float32([ [512.10633894, 269.22351997], # Bottom corner

[325.78198672, 236.36953072], # Left Corner

[536.67952727, 199.18259532], # Top Corner

[715.21023044, 214.80199122]])

output_pts = np.float32([ [152.5, 0.0],

[ 0.0, 0.0],

[ 0.0, 274.0],

[152.5, 274.0]])

matrix = cv2.getPerspectiveTransform(input_pts, output_pts)

print(matrix)

Result:

[[-5.95356887e-01 -3.37643559e 00 9.92043065e 02]

[-5.58341286e-01 3.16651417e 00 -5.66569957e 02]

[-2.74644869e-04 -8.59569964e-03 1.00000000e 00]]

Let's check now:

x = np.float32([512.10633894, 269.22351997, 1.0])

res = np.dot(matrix, x)

print(res)

print(res / res[2])

Result (remember it should be normalized with last coordinate):

[-221.85880447 0. -1.45481181]

[152.50000261 -0. 1. ]

Seems to be working.

I suspect your output vector somehow got rounded to int and lost all precision. The way you are asking about negatives also implies you forgot to "unscale" them.