In my Azure data factory I need to copy data from an SFTP source that has structured the data into date based directories with the following hierarchy year -> month -> date -> file

I have created a linked service and a binary dataset where the dataset "filesystem" points to the host and "Directory" points to the folder that contains the year directories. Ex: host/exampledir/yeardir/

with yeardir containing the year directories.

When I manually write into the dataset that I want the folder "2015" it will copy the entirety of the 2015 folder, however if I put a parameter for the directory and then input the same folder path from a copy activity it creates a file called "2015" inside of my blob storage that contains no data.

My current workaround is to make a nested sequence of get metadata for loops that drill into each folder and subfolder and copy the individual file ends. However the desired result is to instead have the single binary dataset copy each folder without the need for get metadata.

Is this possible within the scope of the data factory?

edit:

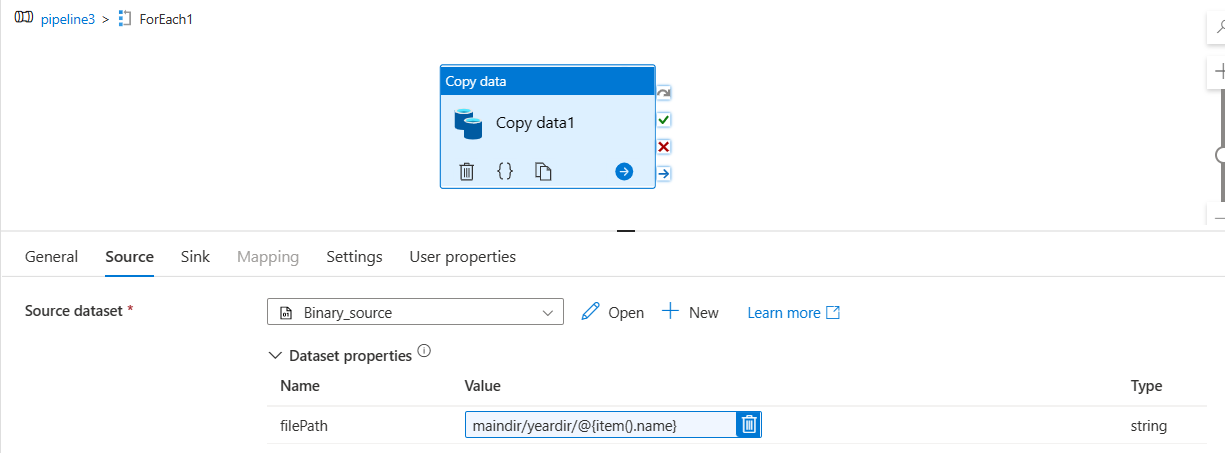

properties used in copy activity

To add further context I have tried manually writing the filepath into the copy activity as shown in the photo, I have also attempted to use variables, dynamic content for the parameter (using base filepath and concat) and also putting the base filepath into the dataset alongside @dataset().filePath. None of these solutions have worked for me so far and either copy nothing or create the empty file I mentioned earlier.

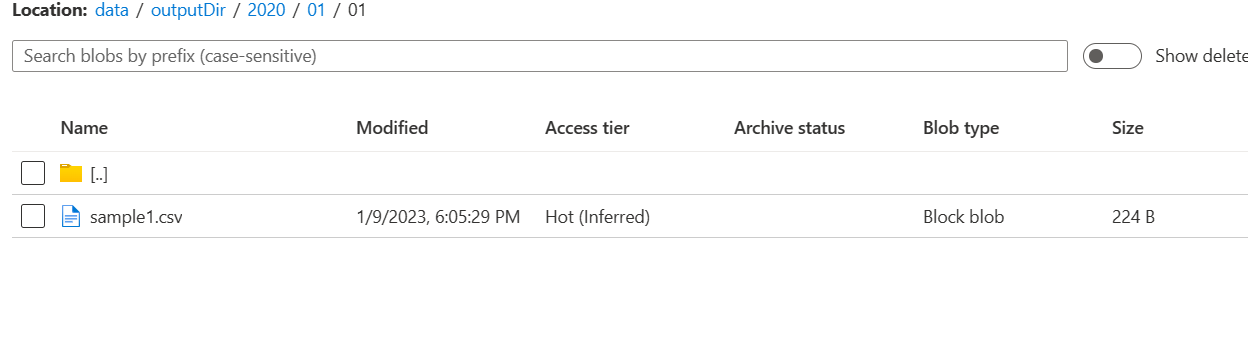

The sink is a binary dataset linked to Azure Data Lake Storage Gen2.

CodePudding user response:

Since giving exampledir/yeardir/2015 worked perfectly for you and you want to copy all the folders present in exampledir/yeardir, you can follow the below procedure:

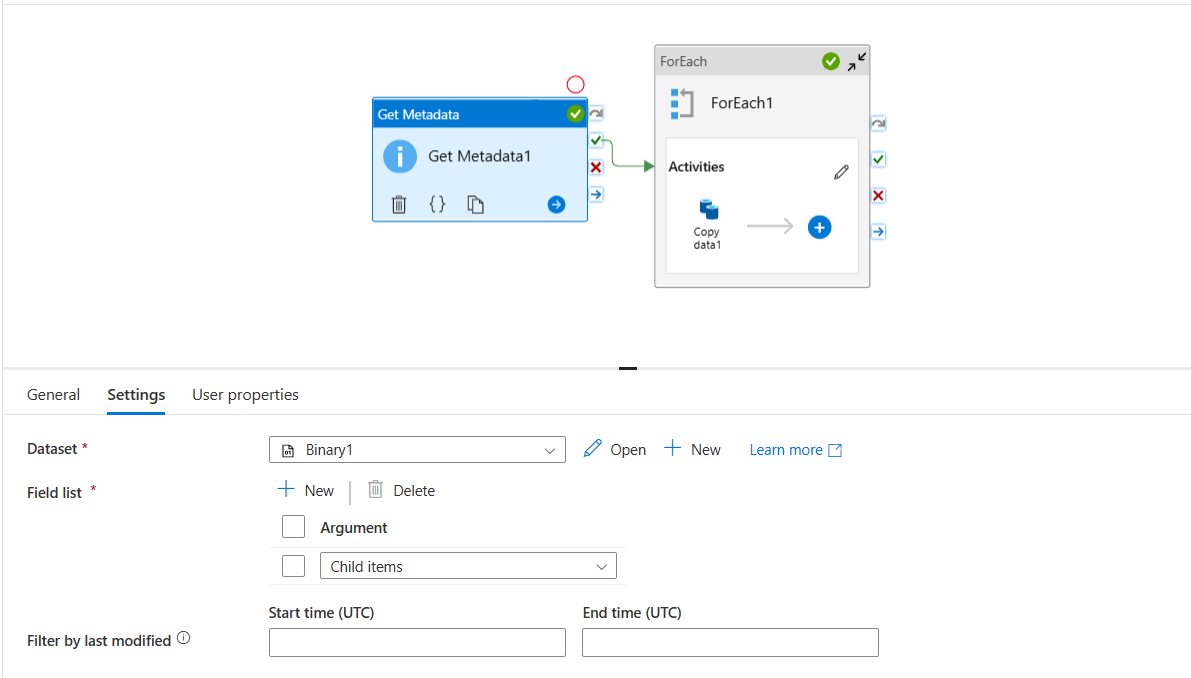

- I have taken a

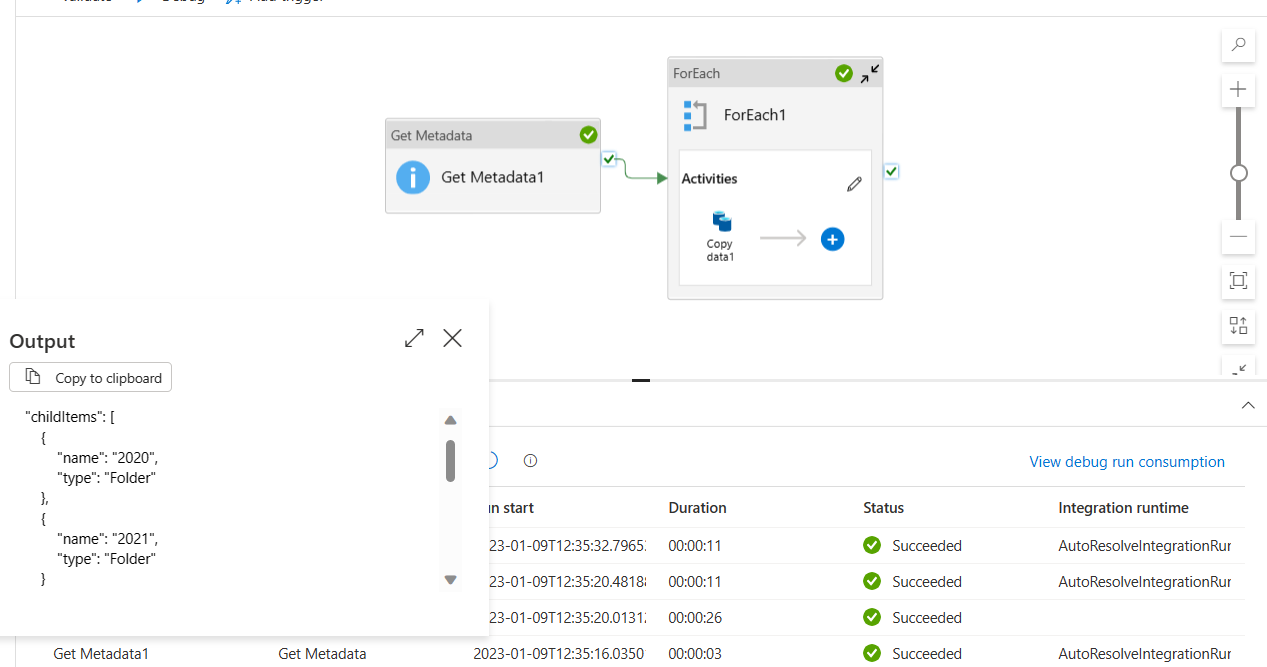

get metadataactivity to get the child items of the folderexampledir/yeardir/(In my demonstration, I have taken path as 'maindir/yeardir'.).

- This will give you all the year folders present. I have taken only 2020 and 2021 as an example.

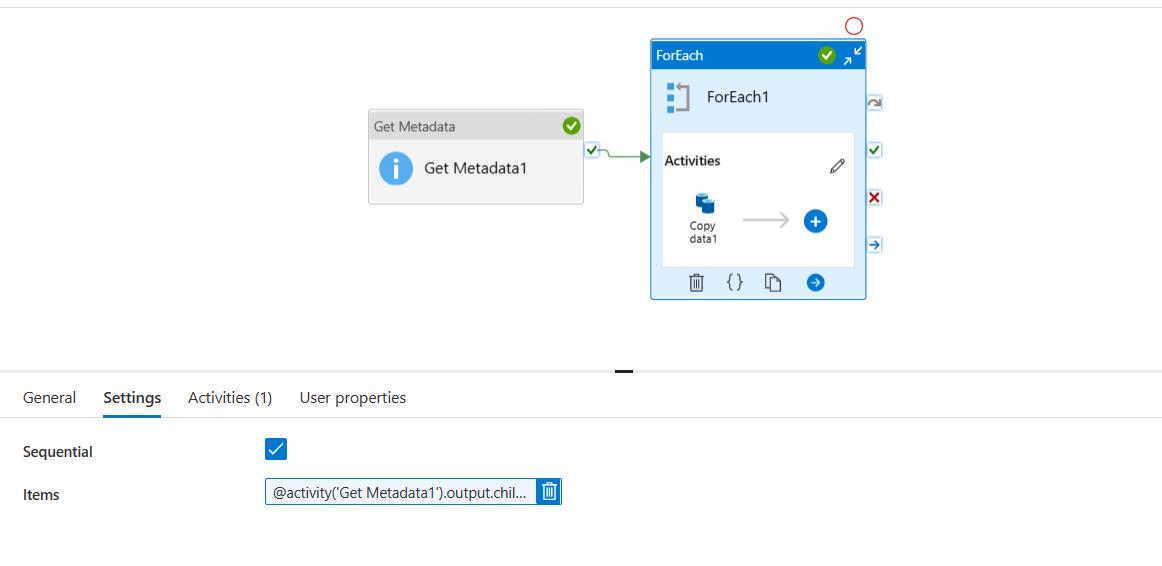

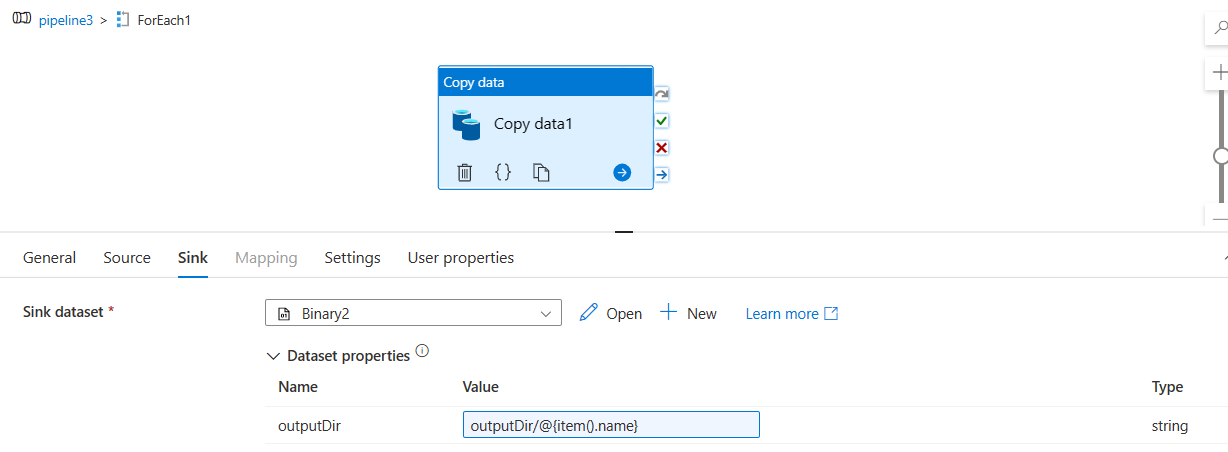

- Now, with only one for each activity with items value as the child items output of get metadata activity, I have directly used copy activity.

@activity('Get Metadata1').output.childItems

- Now, inside for each I have my copy data activity. For both source and sink, I have created a dataset parameter for paths. I have given the following dynamic content for source path.

maindir/yeardir/@{item().name}

- For sink, I have given the output directory as follows:

outputDir/@{item().name}

Since giving path manually as

exampledir/yeardir/2015worked, we have got the list of year folders using get metadata activity. We looped through each of this and copy each folder with source path asexampledir/yeardir/<current_iteration_year_folder>.Based on how I have given my sink path, the data will be copied with contents. The following is a reference image.